Patents

Literature

114 results about "Robot vision systems" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Simulation method and device of digital twin system of industrial robot

InactiveCN108724190AAvoid exceptionImprove adaptabilityProgramme-controlled manipulatorVisual technologyWork task

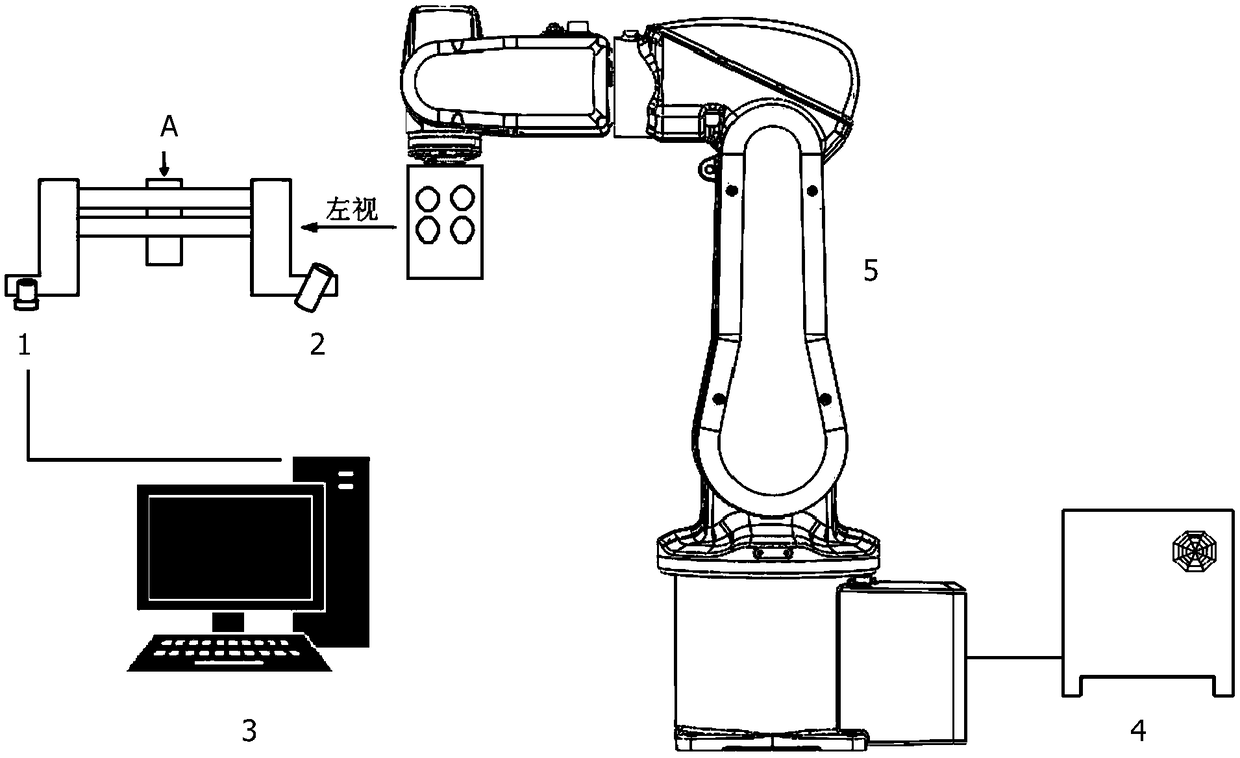

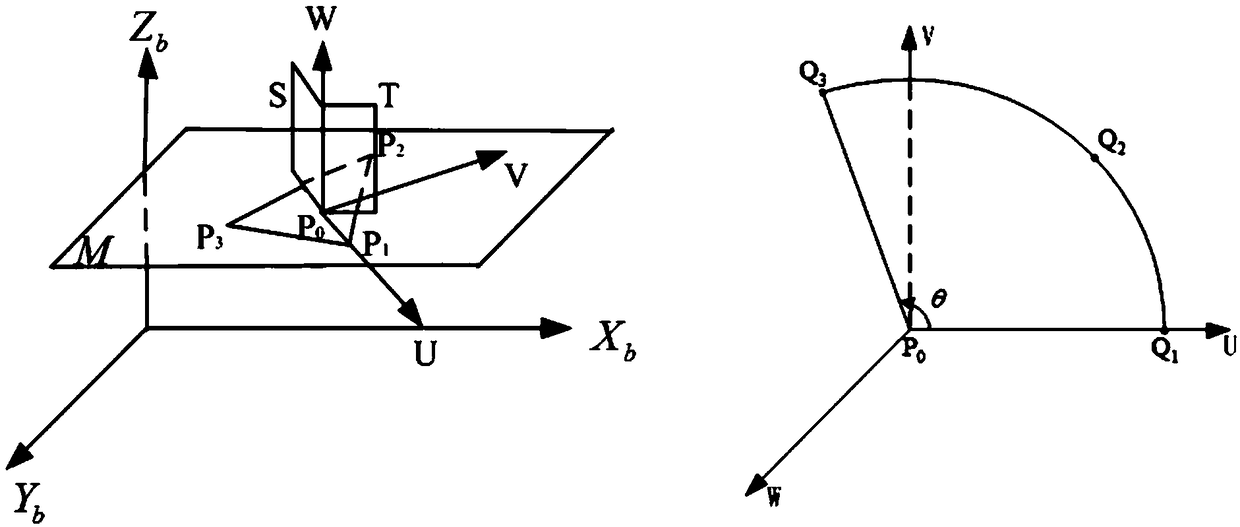

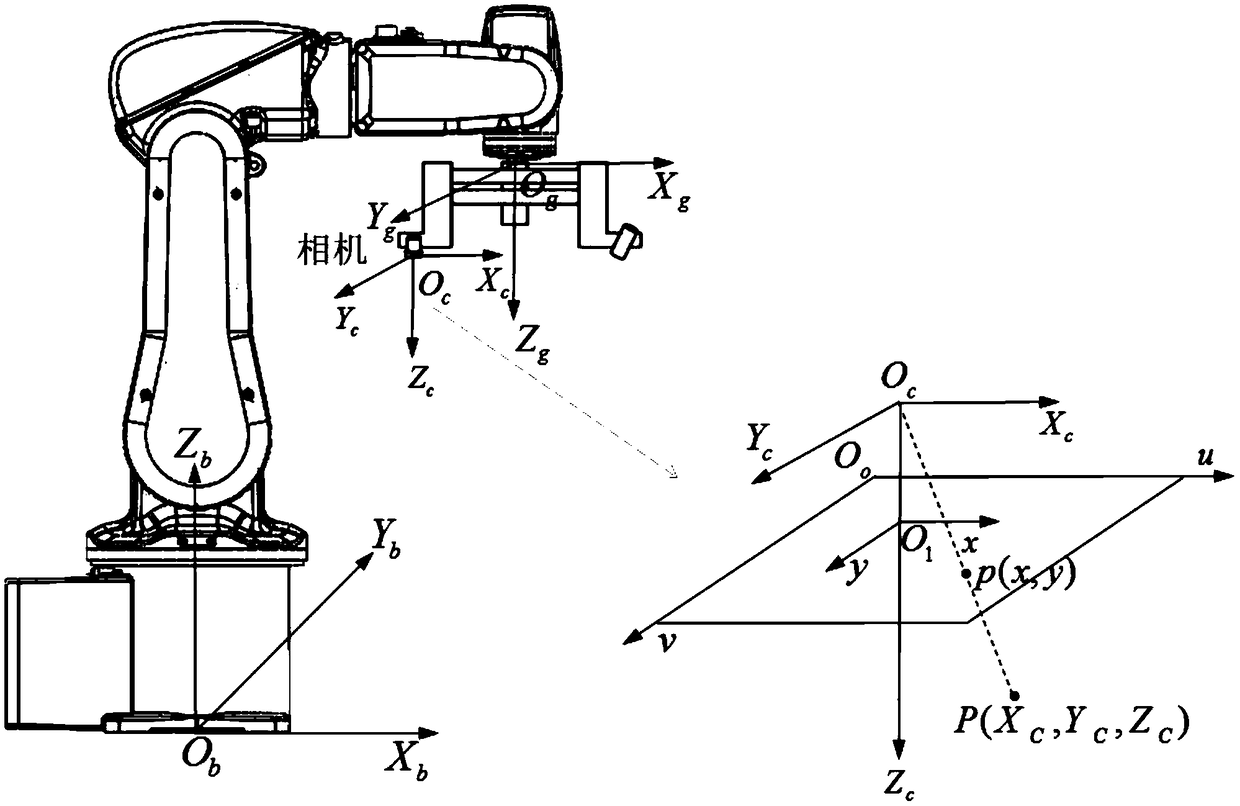

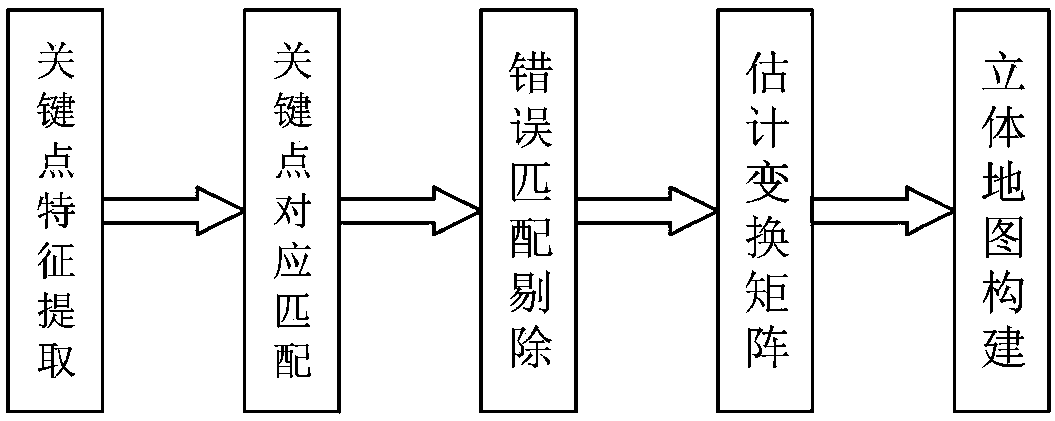

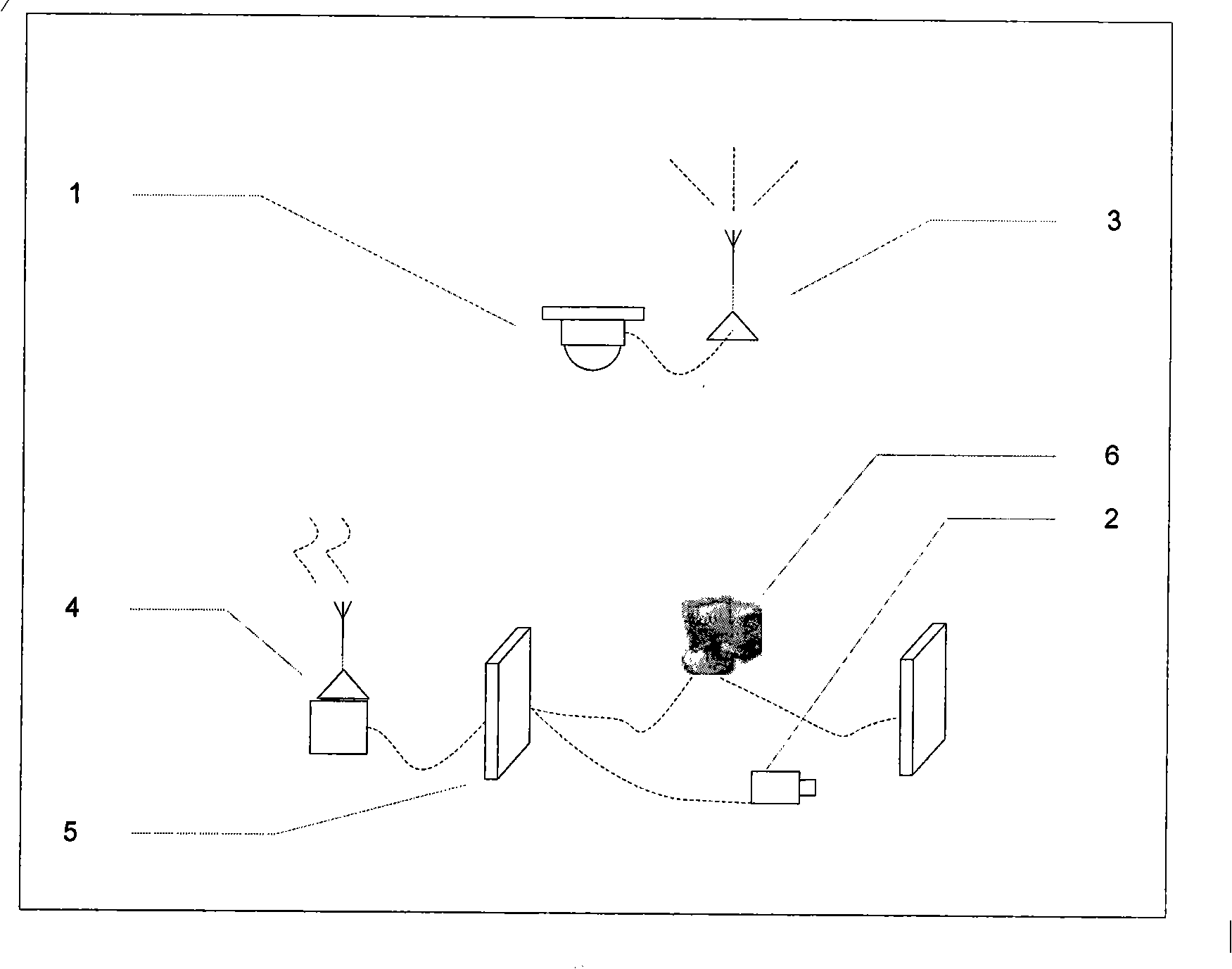

The invention provides a simulation method and device of a digital twin system of an industrial robot. The simulation device comprises the industrial robot, a vision perception unit and a computer, wherein the vision perception unit is arranged at the tail end of the robot and is composed of a camera and a wire structure light emitter; an industrial robot simulation system is established, the robot is modeled, and three instructions are analyzed and run; a target three-dimensional measurement model of a robot visual system is acquired by using a wire structure optical geometric triangular method; a motion instruction is determined for the working task of the robot, virtual simulation is carried out through the computer, and reachable points and collision point detection are carried out; and the robot is actually used for identifying a target object through the visual perception unit, and the actual movement of the robot is driven. The simulation device is safe and reliable in virtual simulation, abnormal operation possibly in actual operation is avoided, the visual perception unit is used for identifying the target object through three-dimensional visual technology, the self-adaptive capacity of the robot to the field environment is improved, and flexibility and intelligence are enhanced.

Owner:XI AN JIAOTONG UNIV

Three-dimensional point cloud-based patrol robot vision system and control method

InactiveCN108171796AImprove matching accuracyVisual inspectionImage enhancementImage analysisReal-time dataPoint cloud

The invention relates to a three-dimensional point cloud-based patrol robot vision system and a control method. According to the system, point cloud data of a patrol environment is collected by adopting an RGBD video camera, and a three-dimensional map of the patrol environment is created based on a point cloud fusion technology; obstacle avoidance and optimal path planning are performed based onan artificial potential field method; based on a convolutional neural network identification algorithm, three-dimensional features of objects are fused, a target object in the patrol environment is identified, and according to a mapping relationship between the target object and the camera, three-dimensional coordinates of the target object are accurately located; based on a wireless network system, real-time data obtained by a patrol robot is transmitted to a control terminal quickly in real time; and an operator can monitor or play back a patrol condition through the control terminal in realtime, and can control the robot to execute a patrol task through the terminal. According to the control method of the system, a working environment of the patrol robot is not influenced by change ofambient light, and the patrol task can be finished in a dark light condition.

Owner:YANSHAN UNIV

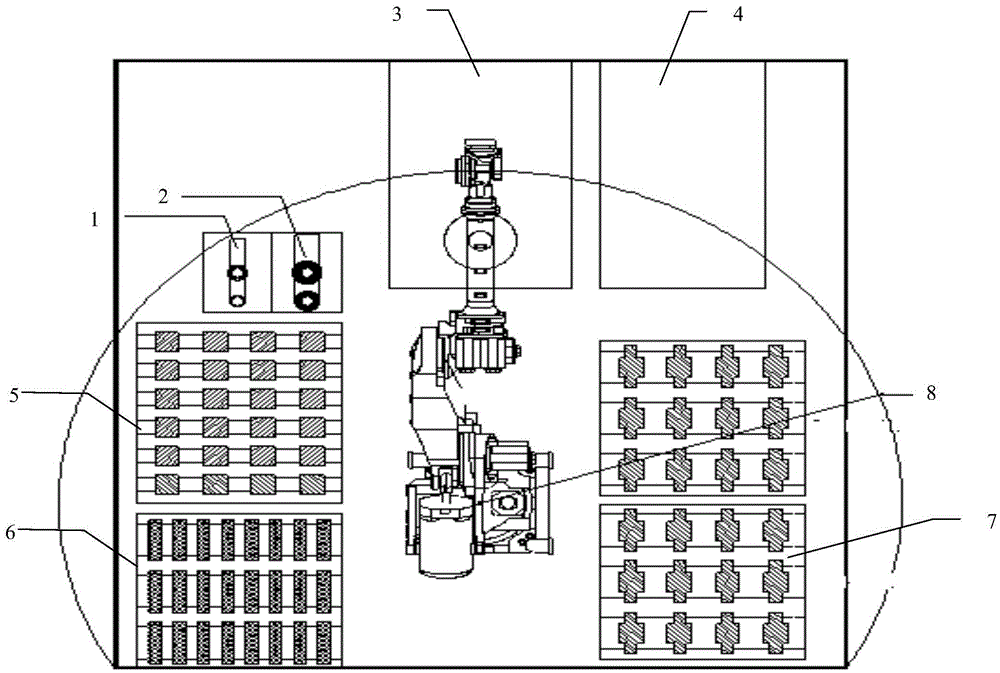

Visual system for ball picking robot in stadium

InactiveCN101537618AProcessing speedProgramme-controlled manipulatorCharacter and pattern recognitionWireless videoComputer graphics (images)

The invention relates to a visual system for a ball picking robot in a stadium, which comprises a global camera, a robot carrier camera, a wireless video transmission module, an image acquisition card and an image processor. The image acquisition card is used for transmitting video signals acquired by the global camera and the robot carrier camera to the image processor; and the image processor is used for processing the video signals according to visual signals and transmitting corresponding commands to the robot. The image processor is used for identifying a ball and the robot by utilizing the color and the shape, judging the relative position of the ball and the robot in real time, and then carrying out route planning to the robot according to position information provided according to an identification algorithm so that the robot can finish the ball picking task through selecting an optimal route. The invention can control the robot to finish the ball picking task under the multi-ball environment, has quick processing speed and is suitable for various stadium environments.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

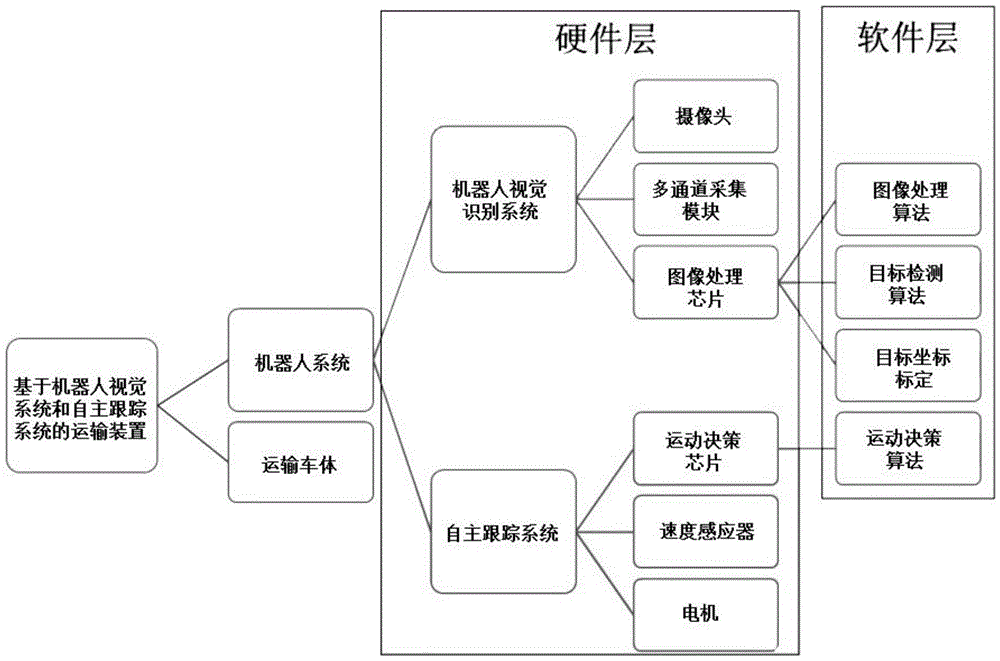

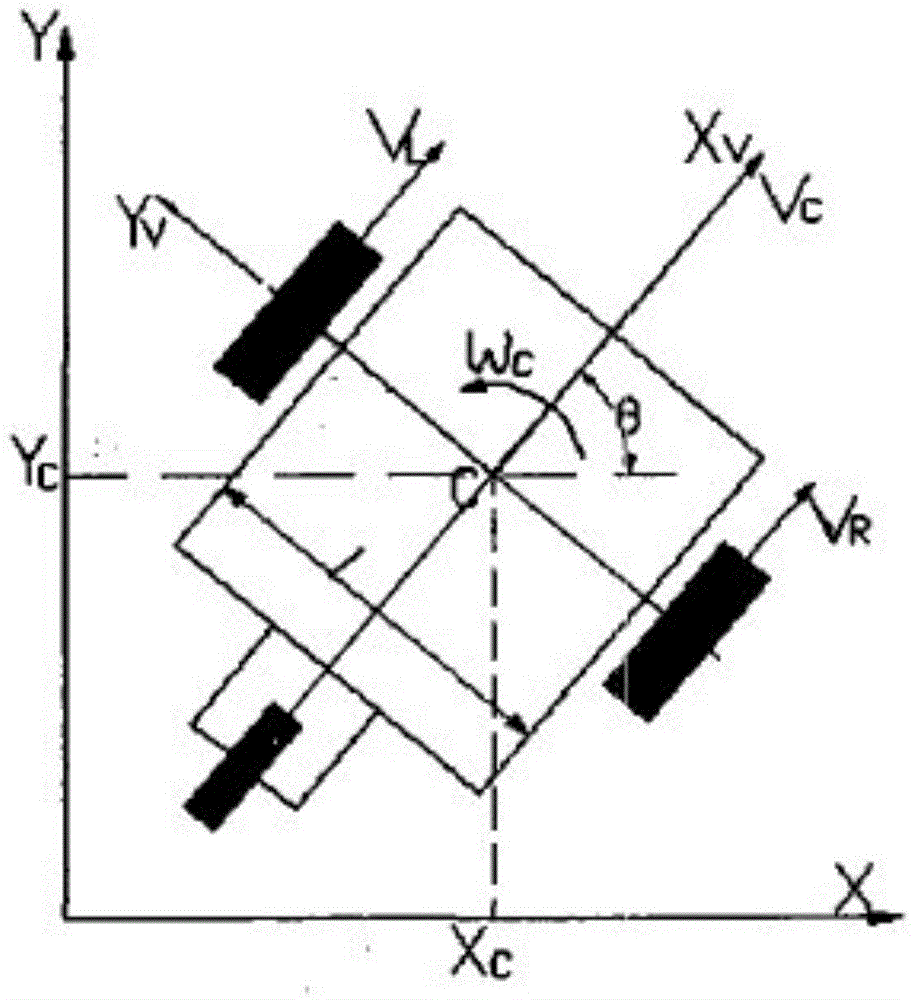

Transportation device based on robot vision system and independent tracking system

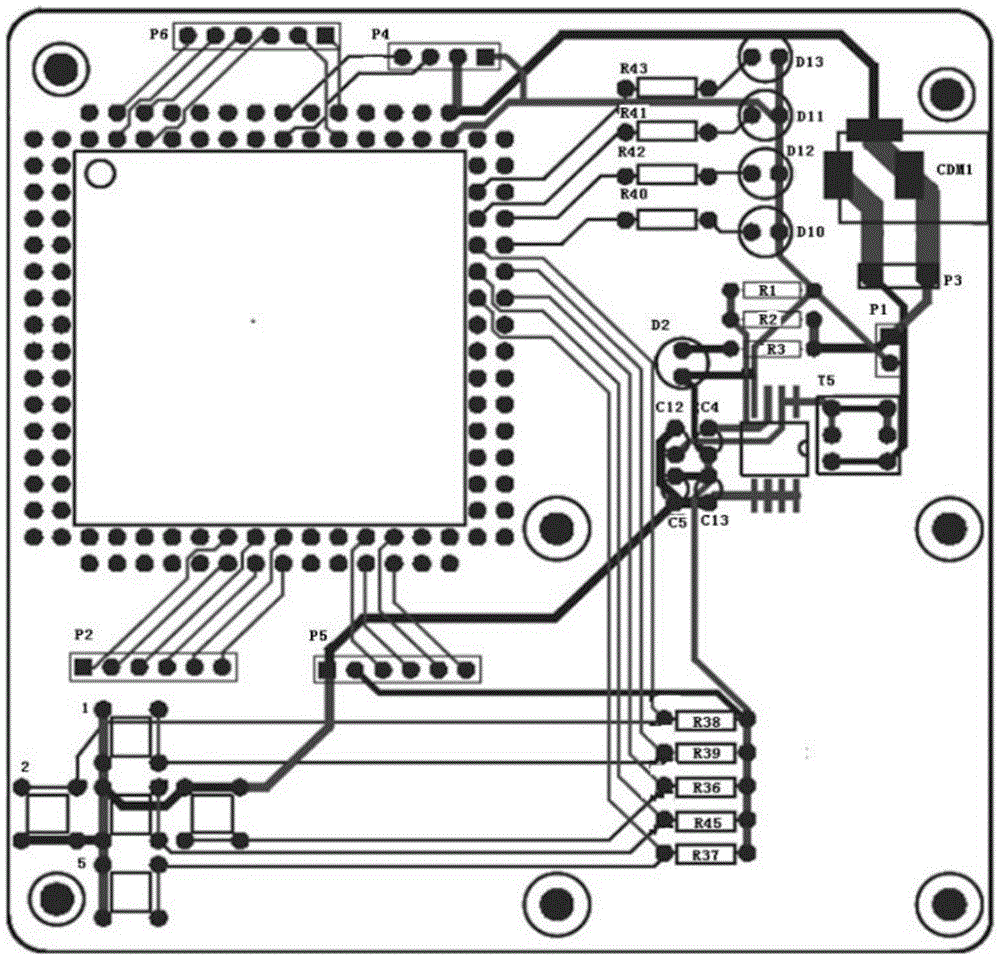

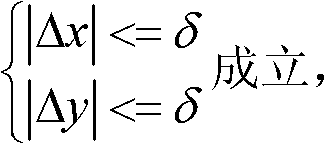

InactiveCN104950887AGuarantee the effect of transportationGuaranteed transportation efficiencyPosition/course control in two dimensionsProportion integration differentiationDecision control

The invention discloses a transportation device based on a robot vision system and an independent tracking system. The transportation device comprises a transportation vehicle body, wherein a control system, an initial target information storage system, a vision recognition system, a decision control system and an independent tracking system are arranged on the transportation vehicle body; the initial target information storage system, the vision recognition system, the decision control system and the independent tracking system are respectively connected with the control system; the initial target information storage system is used for storing the human body feature information of an initial target and setting a luggage taking code or a sensing signal; the vision recognition system is used for processing the extracted image information; the decision control system is used for tracking and judging the initial target, optimizing the path, generating a control instruction and issuing the control instruction to the independent tracking system; the independent tracking system is used for performing differential speed regulation on wheels of the transportation vehicle body and driving the transportation vehicle body to implement tracking on a target. The transportation device has the advantages that the vision recognition is used for locking the position of a target object and the self body, the decision control is realized through a genetic PID (proportion integration differentiation) algorithm, a moving mechanism is executed to complete the tracking on the target object, and the goal of closely following passengers is achieved.

Owner:CHONGQING UNIV

Target tracking method of independent forestry robot

The invention discloses a target tracking method of an independent forestry robot, and the method can be applied to target tracking of the independent forestry robot consisting of a computer visual and digital control pan-tilt and a central control computer. in the method, a moving target detection part and a pan-tilt real-time movement control part are utilized, wherein the moving target detection part comprises an image pretreatment module, a movement information acquisition module adopting a multi-frame difference method, a moving target judgment module and a target central coordinate calculation module; the moving target detection part is used for detecting the information of a moving target and recording the bounding rectangle and central coordinate of the target; and the pan-tilt real-time movement control part is used for controlling the center of the target to be positioned at an area adjacent to an image center. By utilizing the method, the independent forestry robot is ensured to control the operation target to be positioned at the image center or the area adjacent to the image central of the vision system of the independent forestry robot all the time in the operation process.

Owner:BEIJING FORESTRY UNIVERSITY

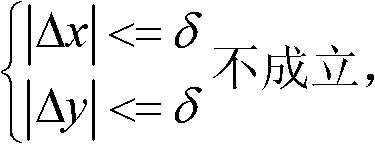

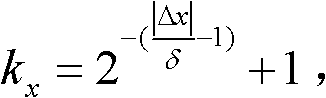

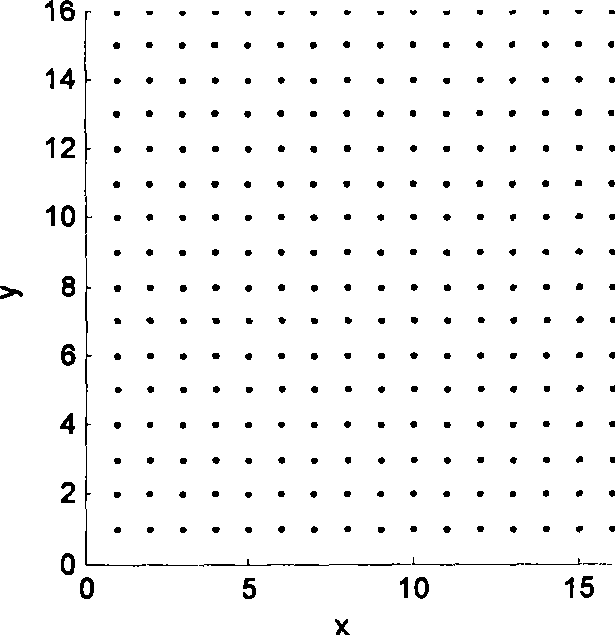

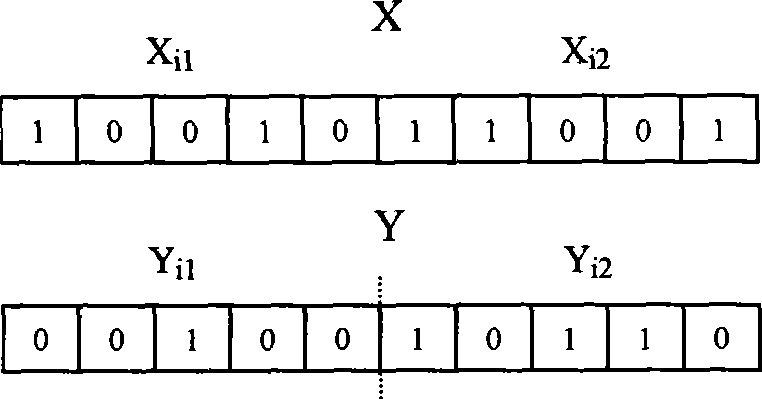

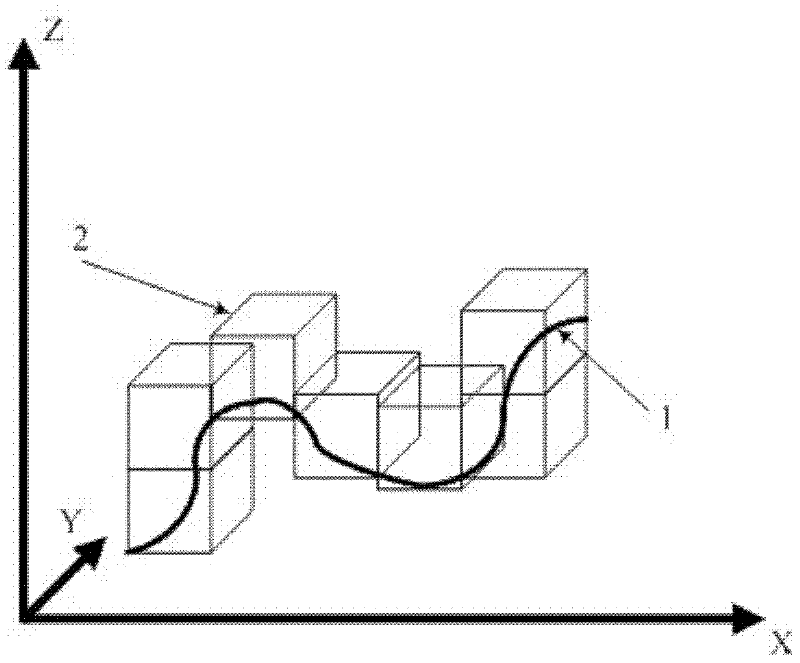

Mobile robot path planning method based on binary quanta particle swarm optimization

InactiveCN101387888AReal-timeEasy to controlComputing modelsTarget-seeking controlSimulationGlobal optimization

The invention discloses a mobile robot path planning method based on the binary quantum particle swarm optimization, which is characterized by comprising steps of 1, simplifying a robot into a point moving in a two-dimensional space, and then sensing the present position of the robot and the present positions of obstacles via the visual system, 2, processing all the obstacles sensed by the visual system of the robot into convex polygons, 3, discretizing the two-dimensional space into series grids, and performing binary encoding for eight probable motion directions of the robot at each grid, 4, defining the distance of the path between the starting point and the destination point as a target function required to be solved by the method, and 5, overall optimizing the target function in the step 4 by utilizing the binary quantum particle swarm optimization aiming to the discrete characteristics of robot path planning problems to obtain the optimum mobile robot path. The invention has the advantages of simple process, easy realizing, good robustness, high solving efficiency and the like.

Owner:JIANGNAN UNIV

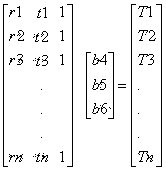

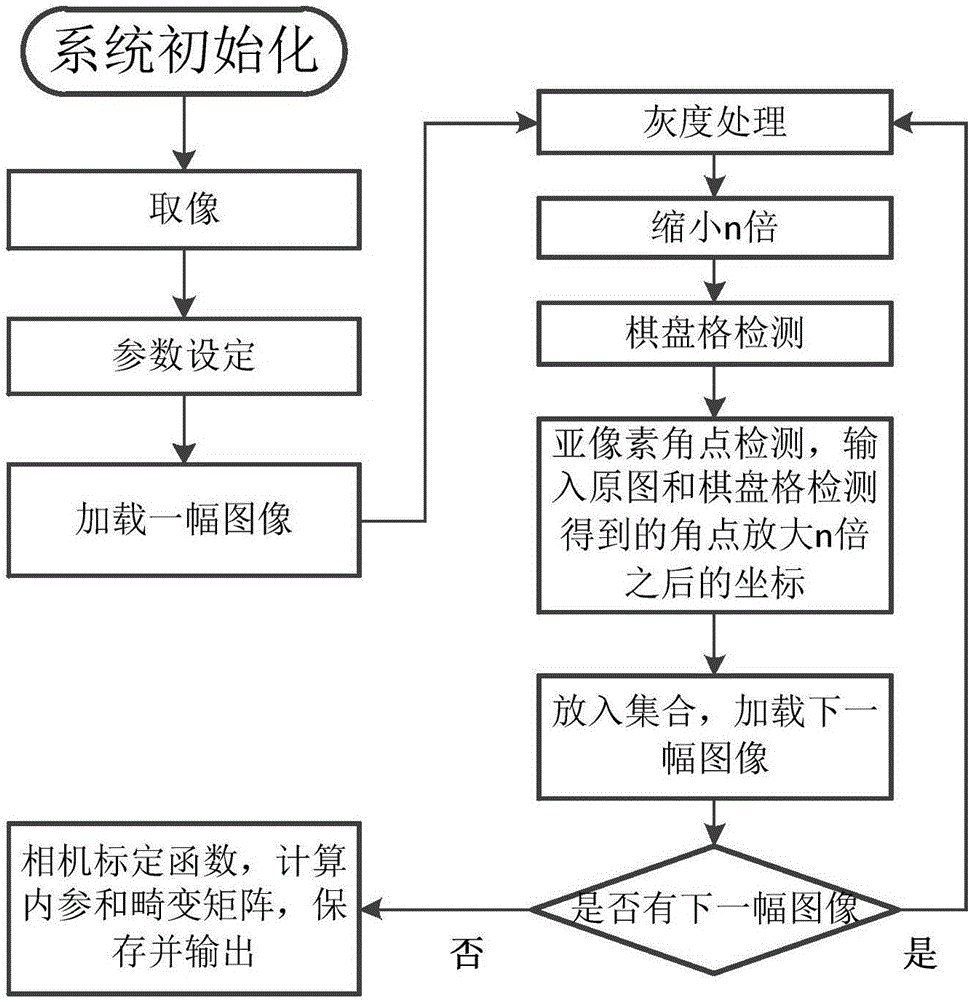

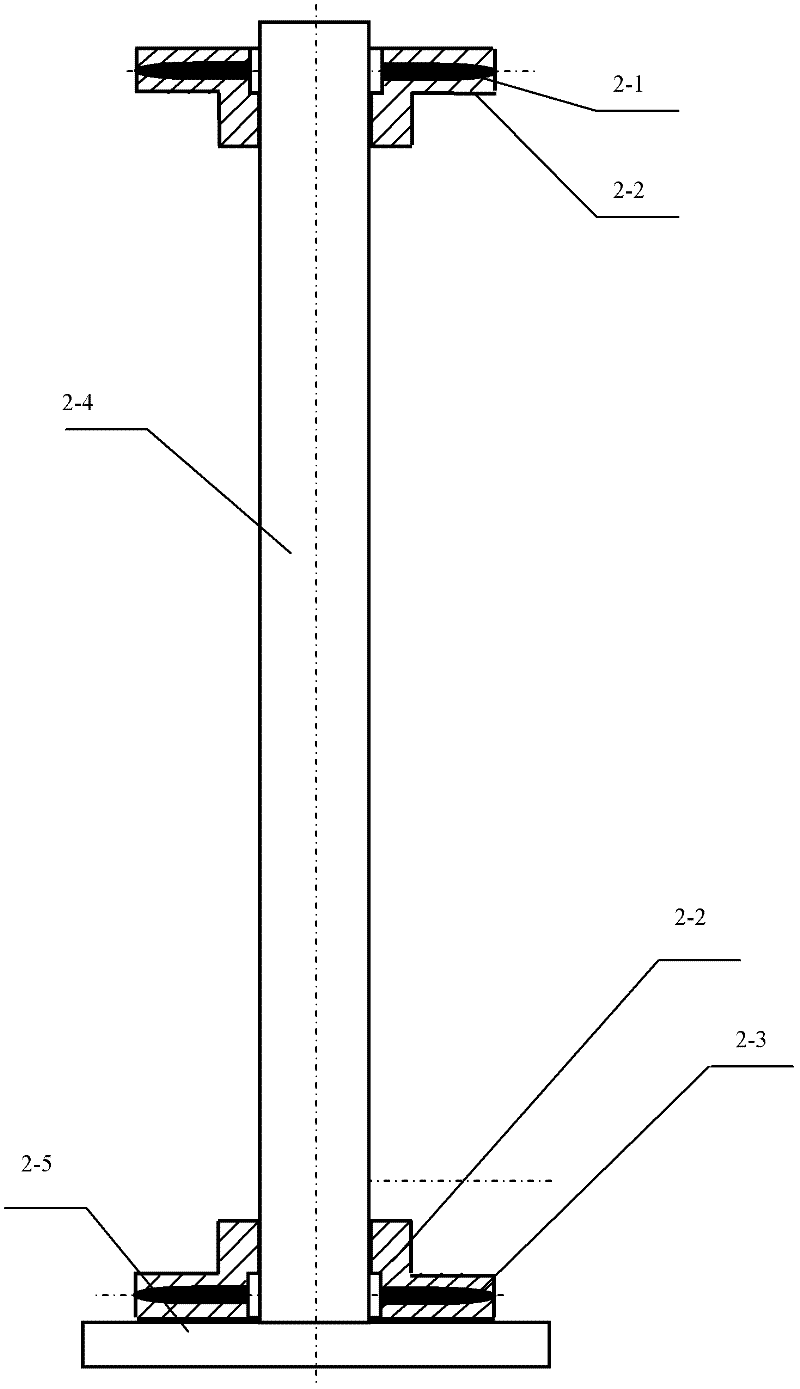

Rapid calibration method of robot visual system

InactiveCN104180753ARealize automatic calibrationAchieve visual effectsUsing optical meansComputer visionManipulator

The invention relates to a rapid calibration method of a robot visual system, especially to calibration between a camera and a manipulator and calibration between cameras. The method comprises the steps of arranging a calibration plate, setting movement rules of the manipulator, obtaining coordinates of induction points of the calibration plate and coordinates of the corresponding visual system, establishing mapping relations of matrixes according to the coordinates, and solving a transformation matrix.

Owner:GUANGDONG AOPUTE TECH CO LTD

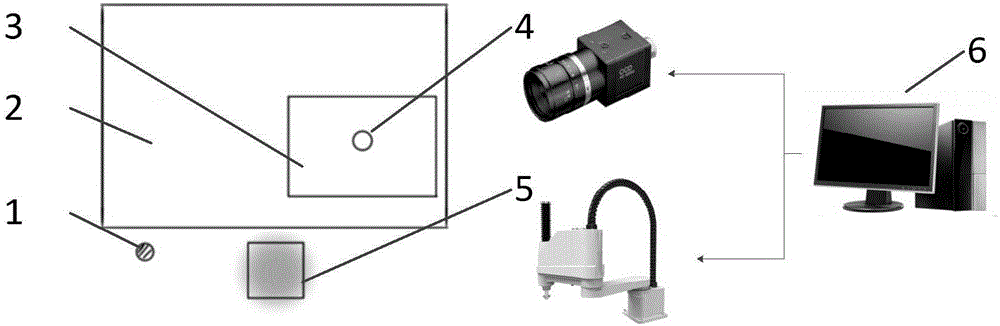

Quick calibration method for robot vision system

ActiveCN106780623AOvercome the disadvantage of slow acquisition speedCalculation speedImage analysisCcd cameraVisual perception

The invention discloses a quick calibration method for a robot vision system. The robot vision system comprises a robot, a working platform, a CCD camera and a computer, wherein the computer is used for controlling the CCD camera to collect image data of the working platform, and the computer further can control movement of a robot. The method comprises the following steps of 1, mounting the CCD camera to the working platform, so that when a workpiece is put on the working platform, a local part of the workpiece is located in a view field region of the CCD camera; collecting feature coordinate points of the workpiece by coordinates of a coordinate system of the camera; 2, writing a distortion correction algorithm by utilizing opencv, and performing quick distortion correction on the CCD camera; 3, calibrating scale relations between the coordinate system of the camera and a world coordinate system of the robot and between the coordinate system of the camera and an actual size; and 4, calibrating an origin of a user coordinate system of the whole working platform. The calibration method is low in cost, convenient in installation, quick in calibration and easy to realize.

Owner:XIAMEN UNIV OF TECH

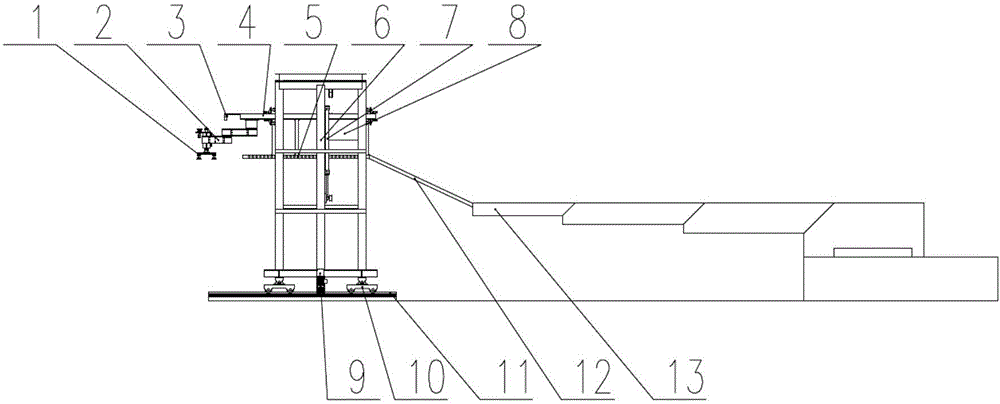

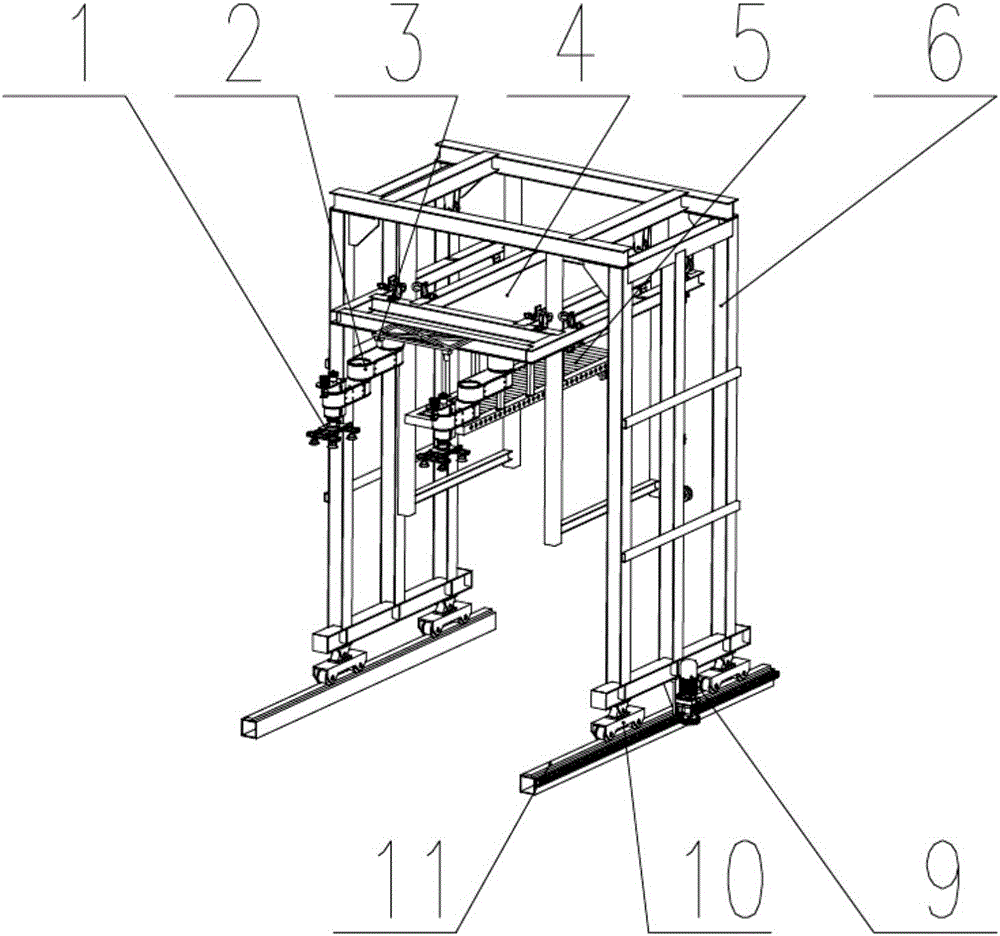

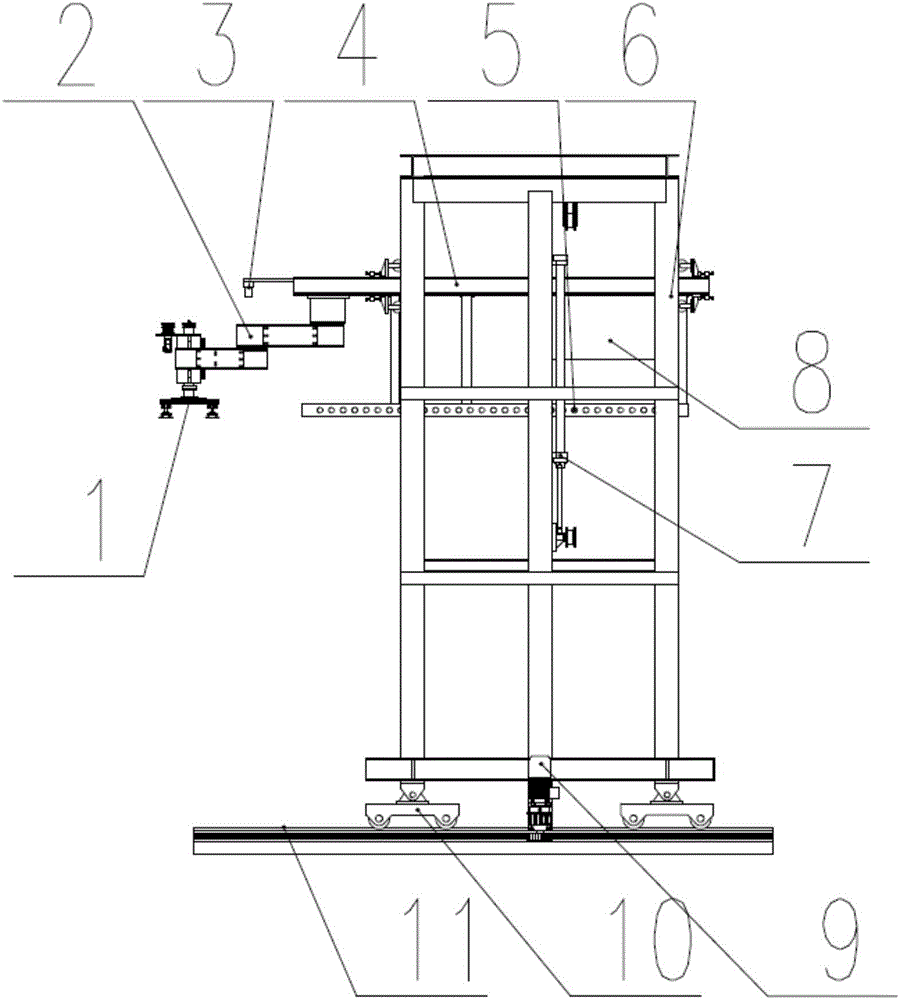

Automatic unloading robot system

The invention provides an automatic unloading robot system. The automatic unloading robot system comprises a gantry type walking vehicle, a lifting platform, a robot, a roller conveyor, a transition conveying belt, a telescopic belt conveyor, a robot visual system and a control system; the gantry type walking vehicle can cross a boxcar to longitudinally move along a freight car, so that unloading operation is convenient; the lifting platform moves and is locked in the vertical direction; the robot is installed on the lifting platform, and the height of the robot in the vertical direction is adjusted along with changes of the position of the lifting platform; the roller conveyor, the transition conveying belt and the telescopic belt conveyor cooperate with one another to work and convey unloaded freight to a specific position; the robot visual system is used for determining the freight grabbing position and cooperating with the robot to work; the control system controls the automated work of the whole automatic unloading robot system. The automatic unloading robot system can replace manual unloading and unstacking, save time and labor and improve the working efficiency.

Owner:SHANGHAI JIAO TONG UNIV

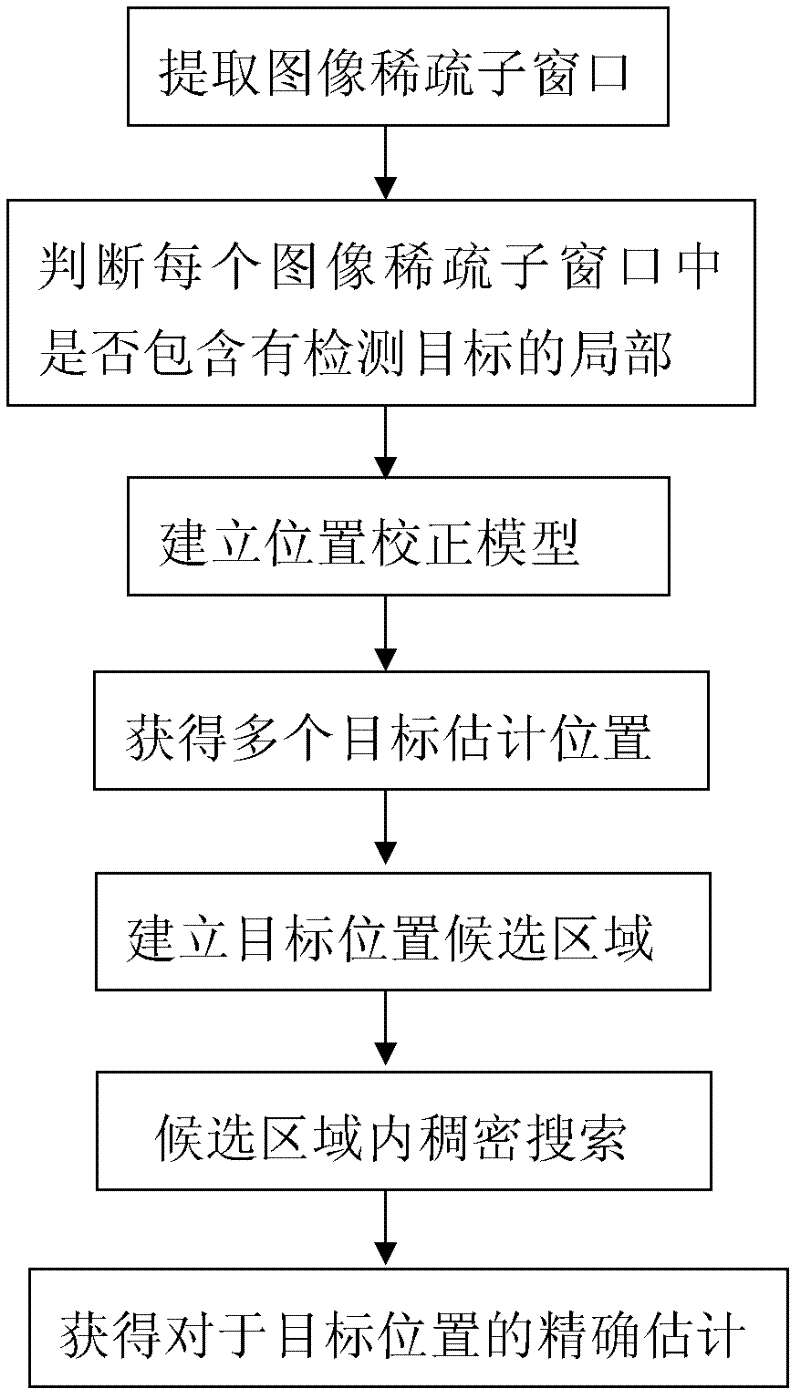

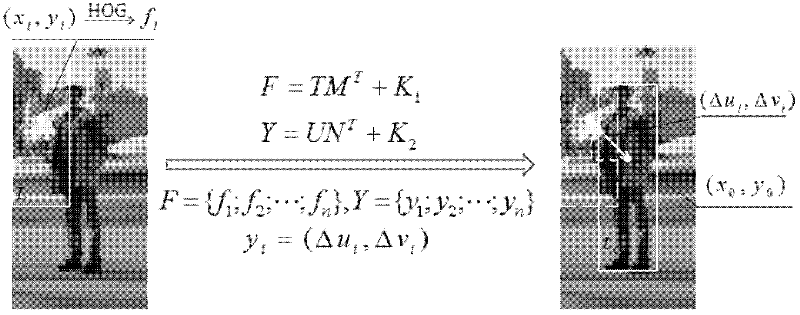

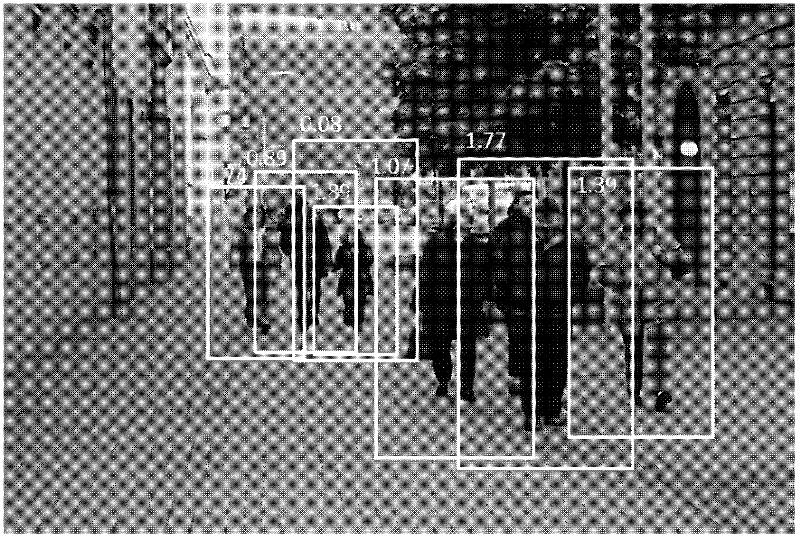

Pedestrian detection method based on position correction model

ActiveCN102609720ARapid positioningQuickly discardCharacter and pattern recognitionVideo monitoringRelative displacement

The invention discloses a rapid pedestrian detection method which comprises three steps: sparse scanning, position correction model setting and denseness search. The method particularly comprises the following steps: carrying out sparse scanning on an image to be detected; extracting HOG (histogram of oriented gradient) features of all sparse child windows, and classifying roughly to obtain all child windows possibly comprising the partial objects; estimating the relative displacement of the acquired child window positions and the object true positions by utilizing a pre-learning position correction model; and establishing object position candidate regions according to the object estimation position of each child window, and carrying out denseness search to obtain the object accurate position. According to the invention, exhaustion for all child windows of the image is not required, and the quantity of the search windows can be reduced obviously, so that the method is important for improving the object detection speed in a supervisory control scene. The rapid pedestrian detection method disclosed by the invention can be widely applied to intelligent video supervisory control systems, robot vision systems, auxiliary driving systems and the like.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

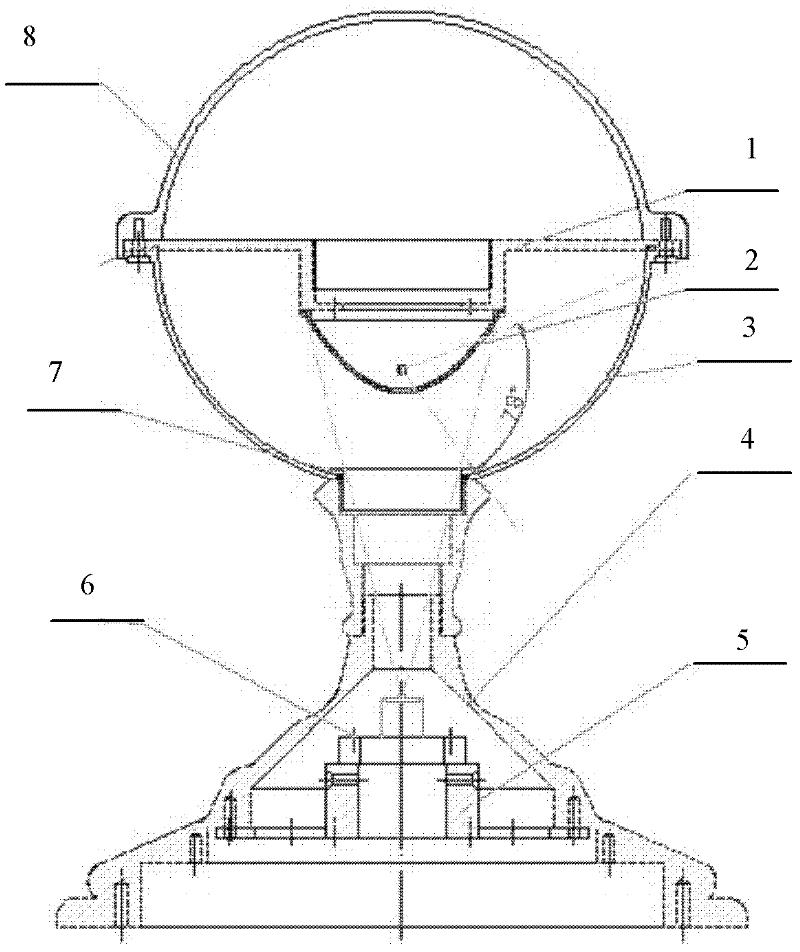

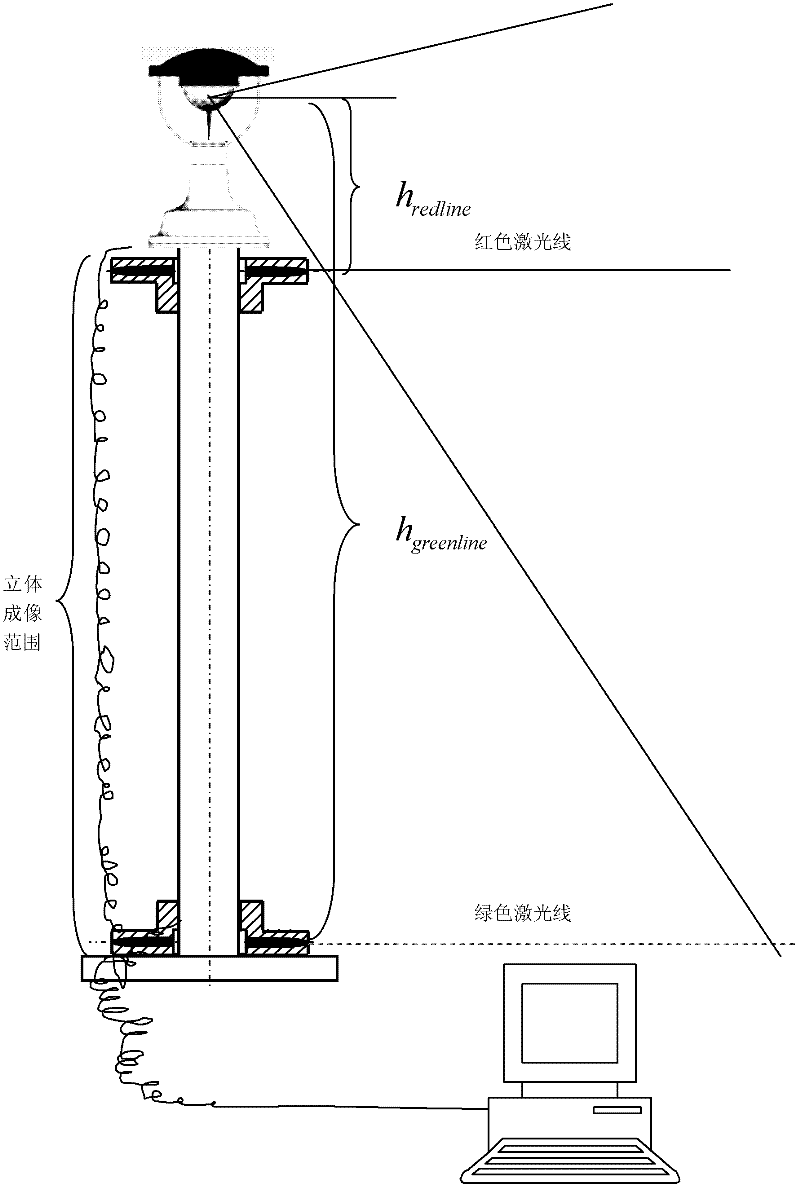

Vision system based on active panoramic vision sensor for robot

ActiveCN102650886ARealisticReduce resource consumptionPosition/course control in two dimensionsLaser scanningLaser light

The invention discloses a vision system based on an active panoramic vision sensor for a robot. The vision system comprises an omnibearing vision sensor, a key surface laser light source, and a microprocessor used for carrying out three-dimensional stereoscopic image pick-up measurement, obstacle detection, obstacle avoidance and navigation on an omnibearing image; the omnibearing vision sensor and the key surface laser light source are configured on the same axial lead; the microprocessor internally comprises a video image reading module, an omnibearing video sensor calibrating module, a Bird-View converting module, an omnibearing surface laser information reading module, an obstacle characteristic point calculating module, an obstacle space distribution estimating module between key surfaces, an obstacle contour line generating module and a storing unit; spatial data points of the key surface, scanned by laser and corresponding pixel points in the omnibearing image are subjected to data fusion, so that the spatial point has geometric information and color information at the same time; a quasi-three-dimensional omnibearing map in an unknown environment is finally established; the resource consumption of a computer can be reduced; the measurement is quickly completed; and the obstacle avoidance and the navigation of the robot are convenient.

Owner:ZHEJIANG UNIV OF TECH

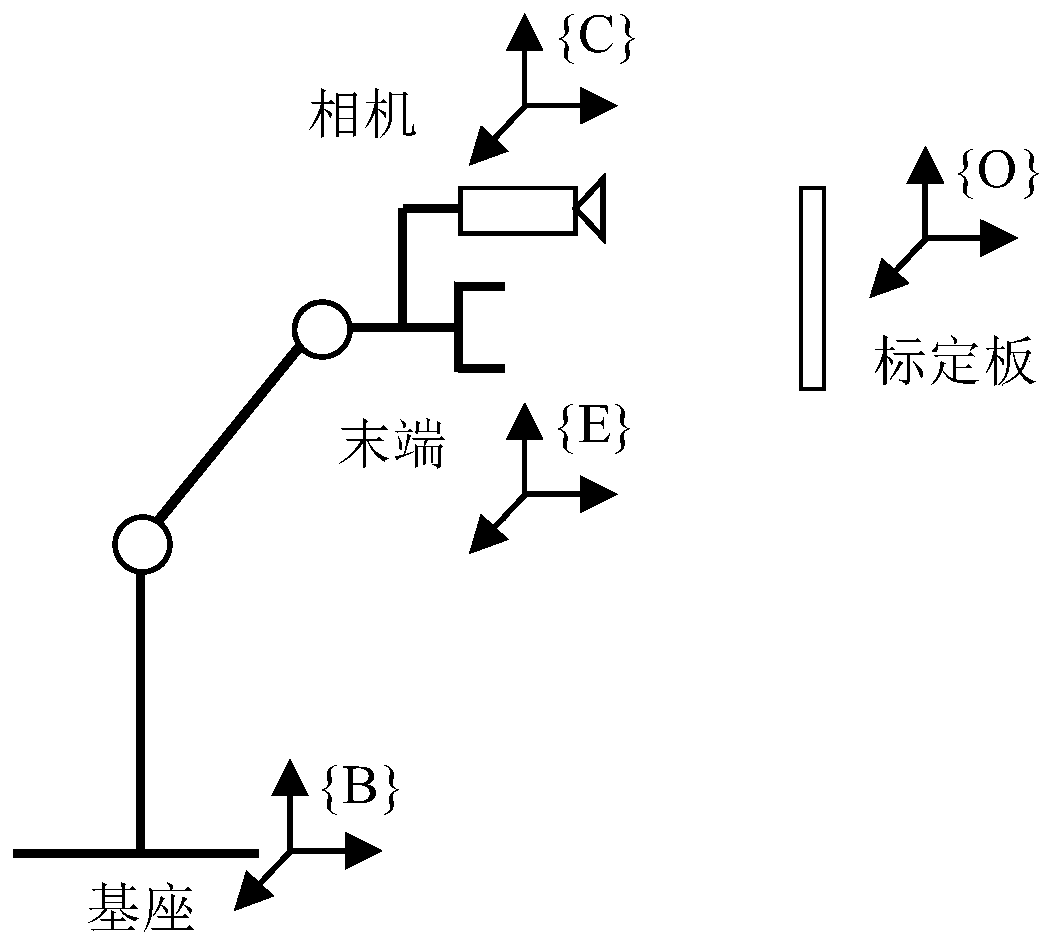

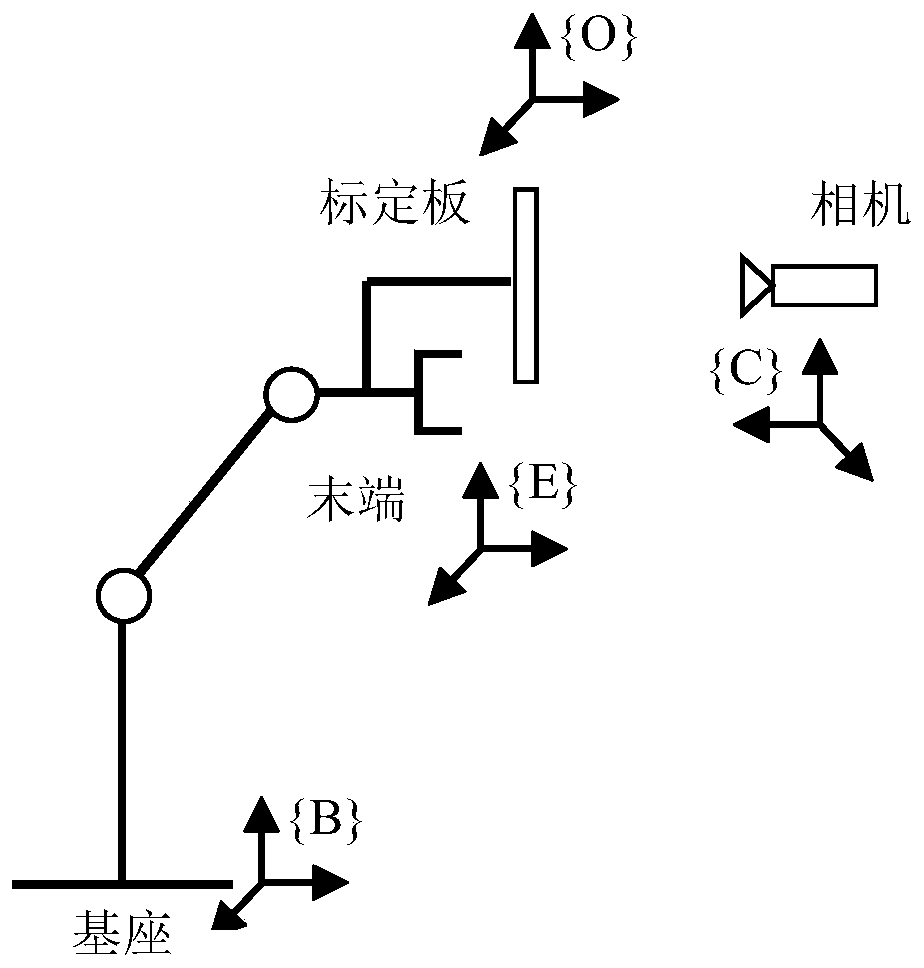

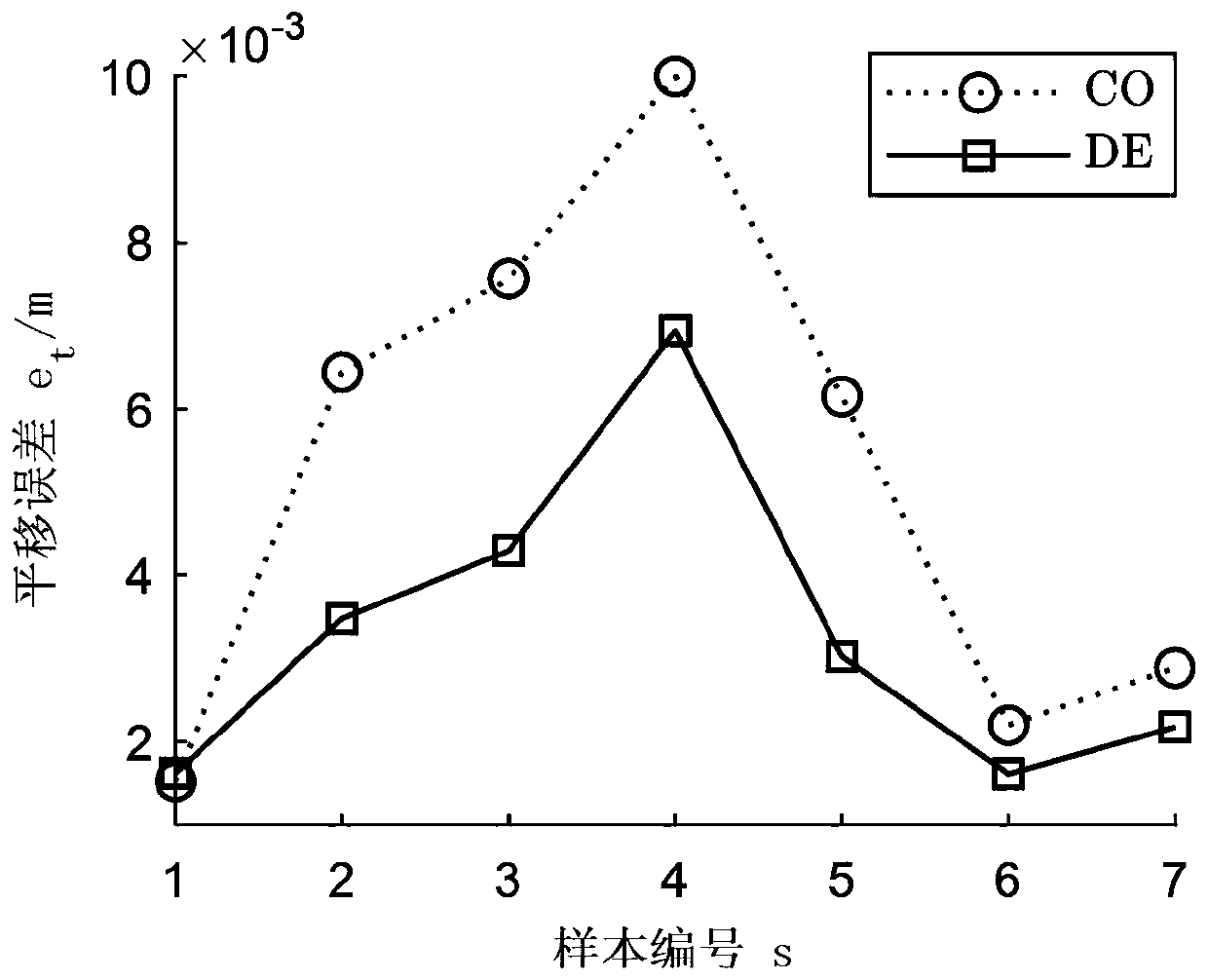

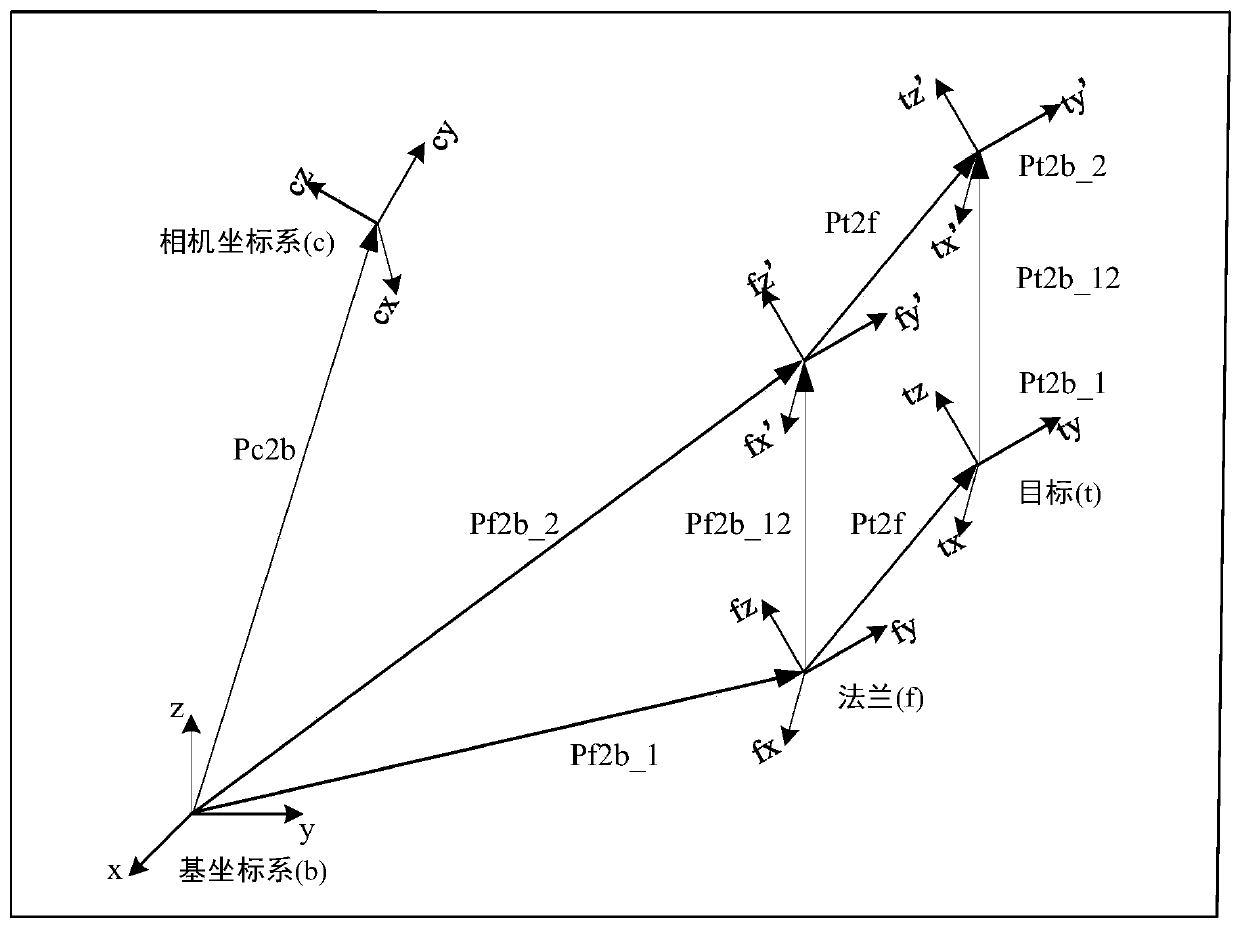

Hand-eye calibration parameter identification method, hand-eye calibration parameter identification system based on differential evolution algorithm and medium

ActiveCN110842914AGuaranteed global optimalityAvoid calculationProgramme-controlled manipulatorPattern recognitionCamera image

The invention provides a hand-eye calibration parameter identification method, a hand-eye calibration parameter identification system based on a differential evolution algorithm and a medium. The hand-eye calibration parameter identification method comprises the following steps of moving a robot end of a robot vision system to different poses to collect robot joint data and camera image data; separately calculating a pose matrix of the robot end relative to a robot base coordinate system and a pose matrix of a calibration board relative to a camera coordinate system; defining a rotary component calibrated error function and a translational component calibrated error function; determining and solving a multi-target optimization function of a hand-eye calibration problem; and separately calculating calibrated errors of a rotating part and a translational part of the robot vision system and verifying and identifying the optimum hand-eye calibration parameter. The global optimality of a calibration result can be acquired, the acquired calibration result falls onto a special Euclidean group SE (3), and additionally introduced calculation of calibrating orthogonalization of the acquiredrotating matrix.

Owner:SHANGHAI JIAO TONG UNIV

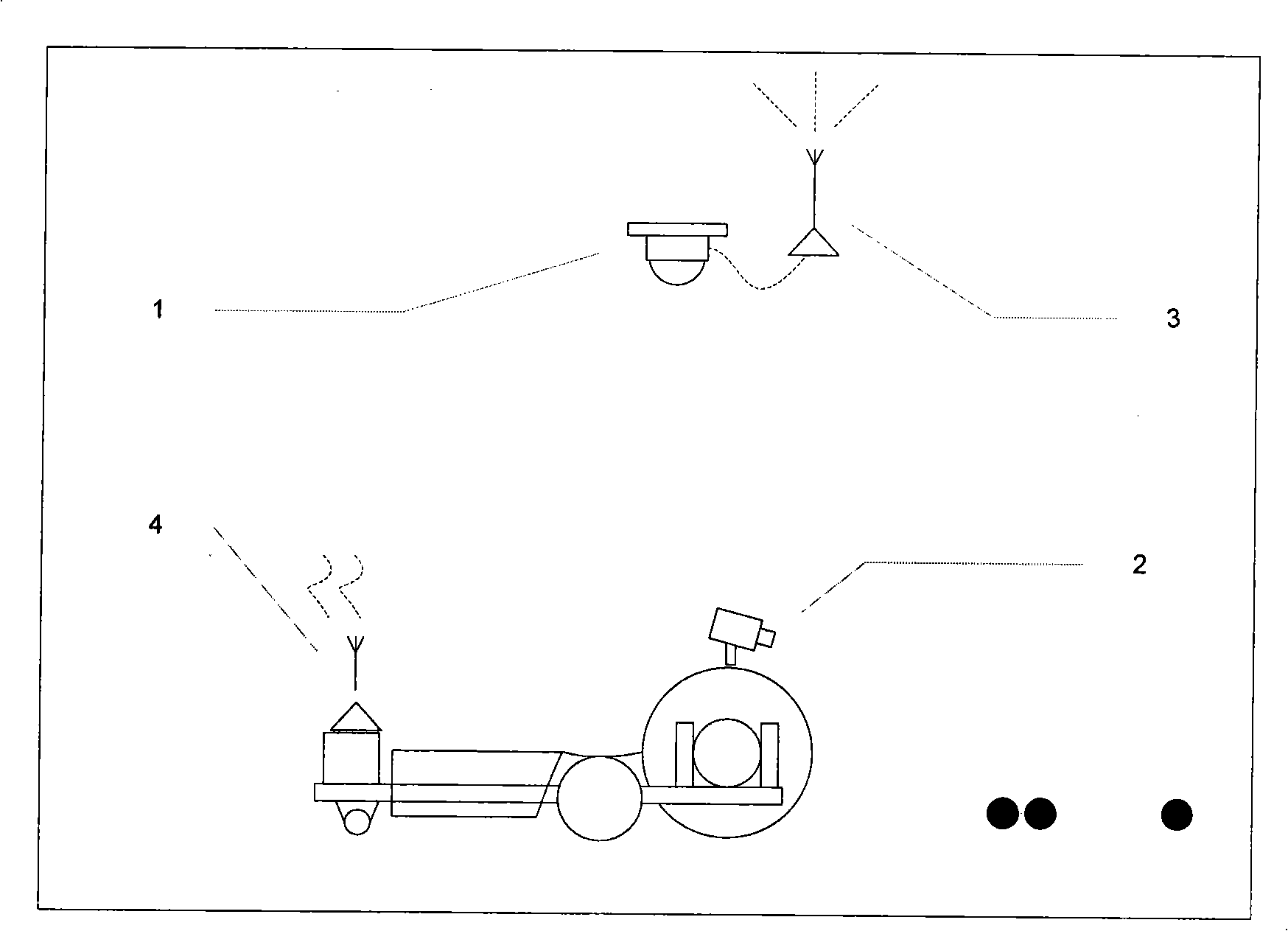

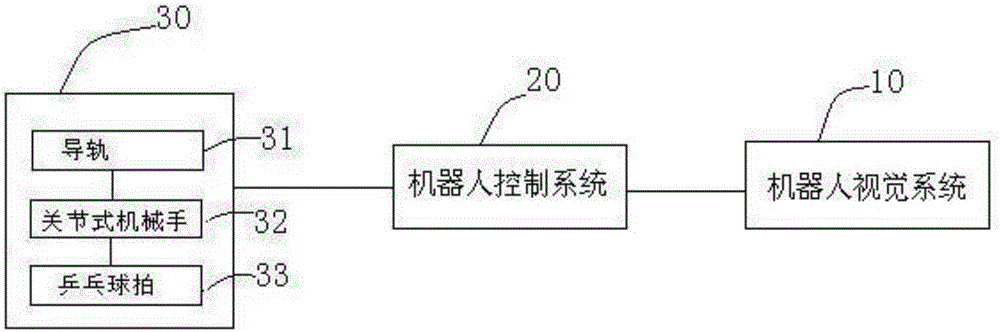

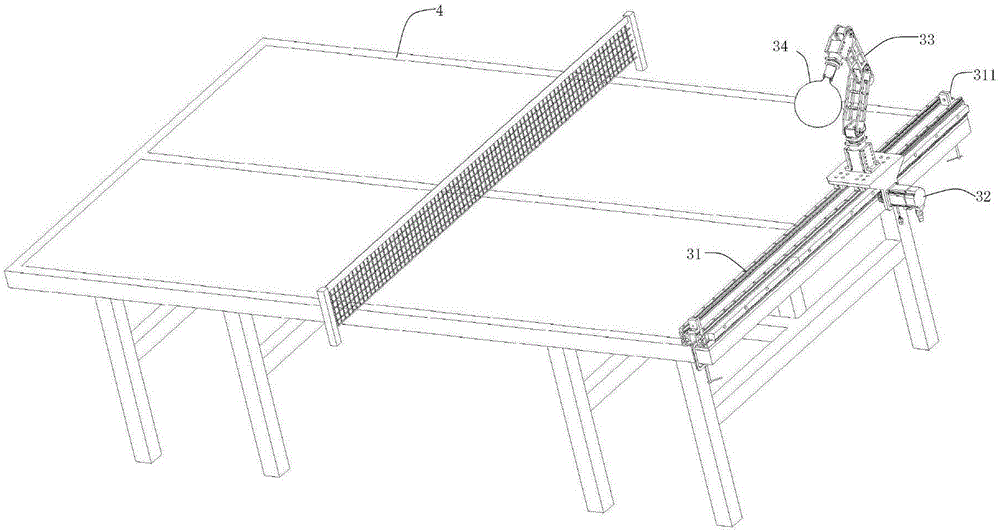

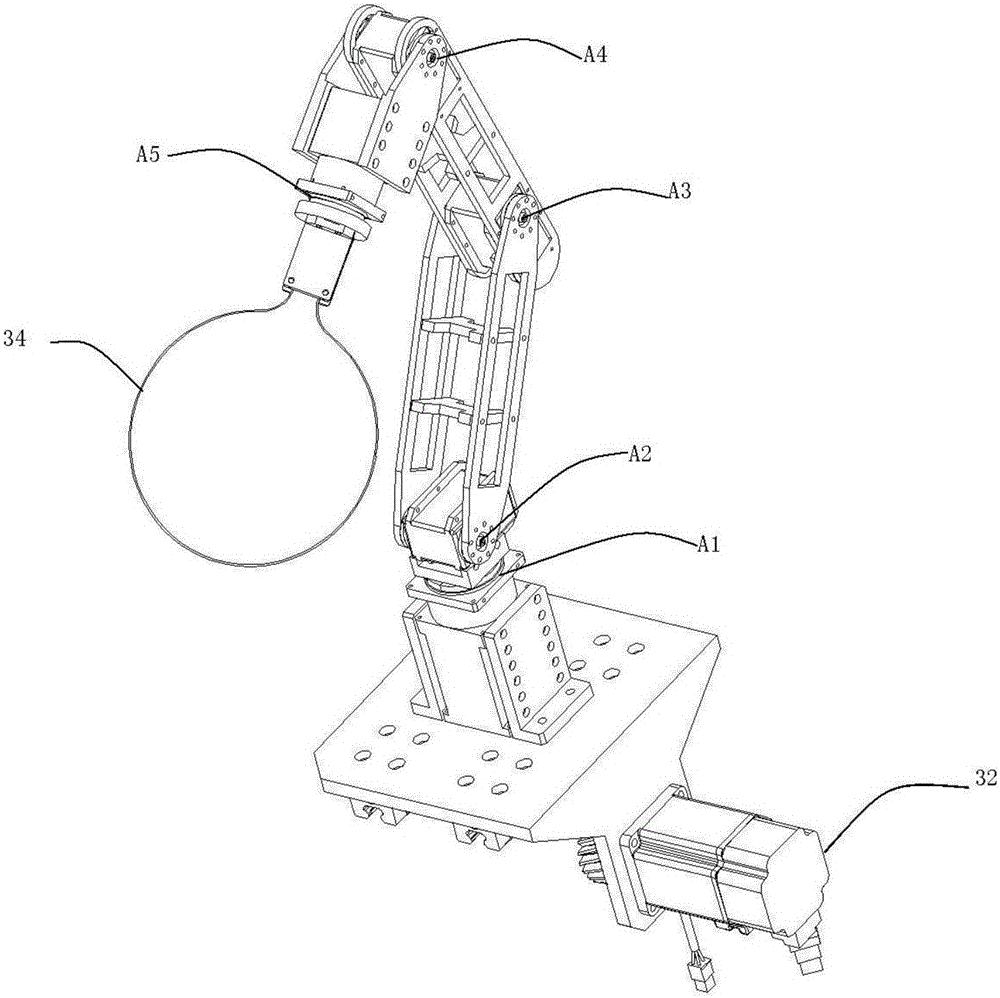

Table tennis robot and control method thereof

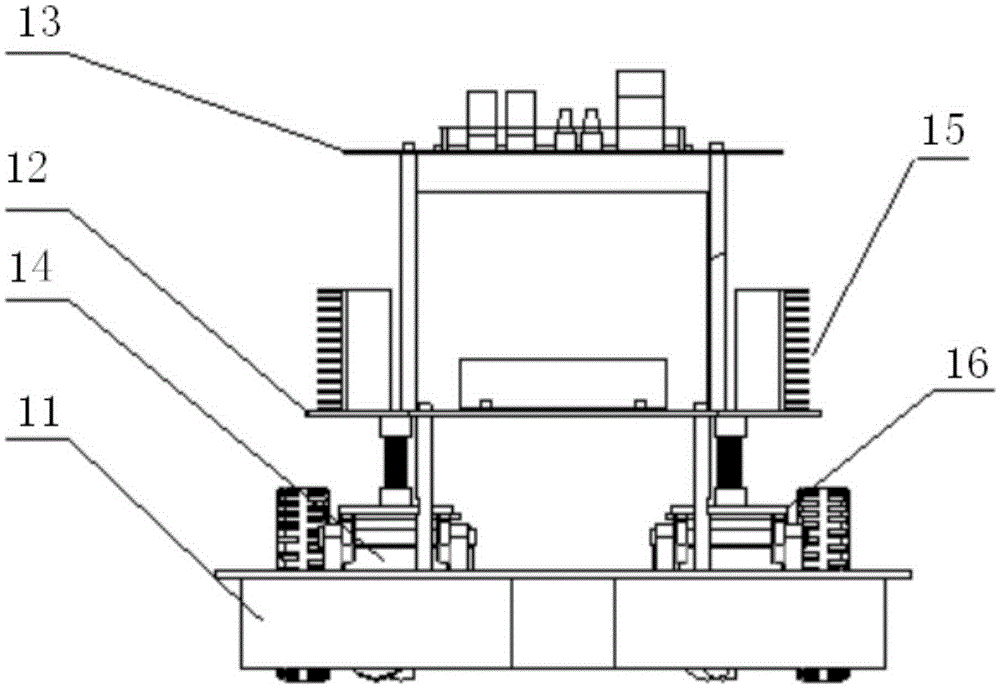

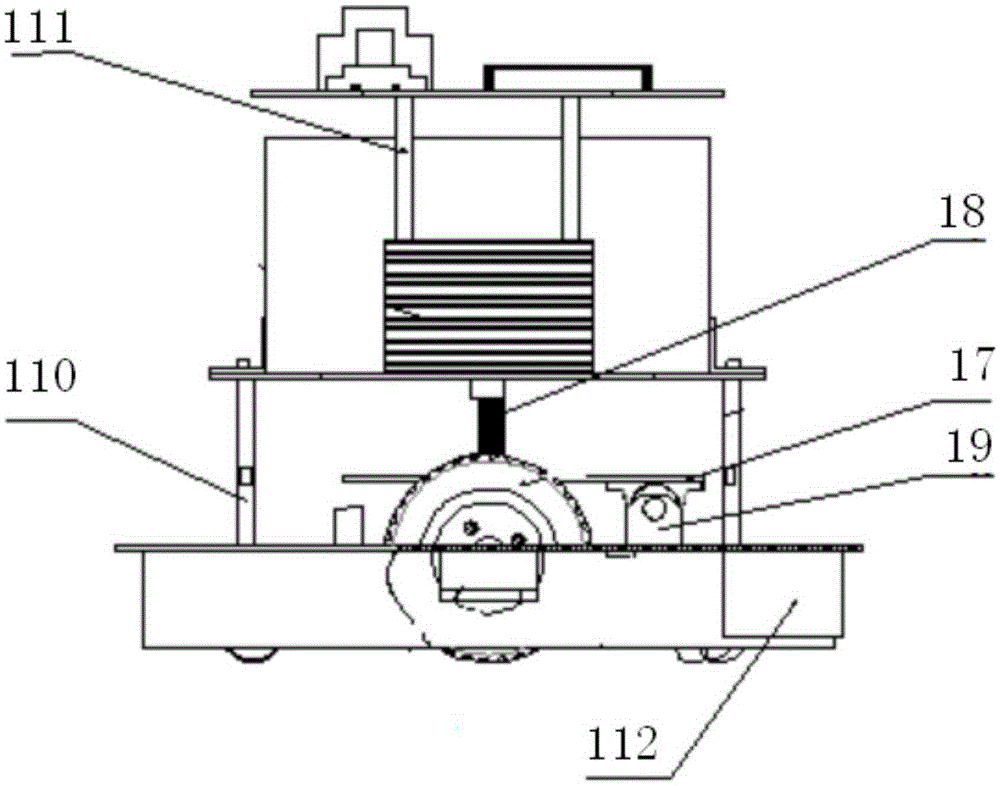

ActiveCN106426200AExpand coverageProgramme-controlled manipulatorMicrochiropteraRobot control system

The invention discloses a table tennis robot and a control method thereof. The table tennis robot comprises a robot body, a robot visual system and a robot control system, wherein the robot body includes a guide rail fixed on the transverse side of a table tennis table, a joint manipulator mounted on the guide rail and capable of moving along the guide rail, and table tennis bats mounted on the joint manipulator; the robot visual system is used for obtaining real-time image data of a table tennis ball; and the robot control system is used for obtaining the displacement of the joint manipulator on the guide rail based on the real-time image data of the table tennis ball obtained by the robot visual system and outputting a guide rail motion control instruction for controlling the joint manipulator to move along the guide rail, and obtaining relative angle displacements of all adjacent mechanical arms of the joint manipulator based on the real-time image data of the table tennis ball obtained by the robot visual system and outputting a joint motion control instruction to control a rotating motion of each joint of the joint manipulator.

Owner:SHENZHEN DOCTORS OF INTELLIGENCE & TECH CO LTD

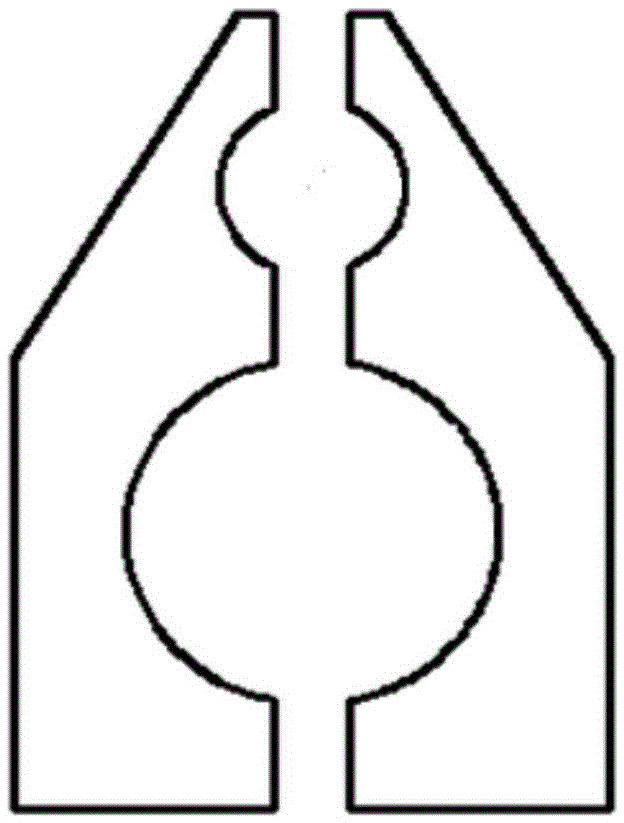

Visual-assembly production line of motor rotor and assembly process

ActiveCN105656260ARealize real-time deviation correctionAvoid problems such as low work efficiencyManufacturing stator/rotor bodiesLocation detectionProduction line

The invention relates to a visual-assembly production line of a motor rotor and an assembly process. The visual-assembly production line is characterized by comprising shaft-sleeve automatic discharging equipment, end-plate automatic discharging equipment, a single-arm hydraulic machine, magnetic-steel embedding equipment, a rotor iron core pallet, a rotary-shaft pallet, a pressed-finished-product pallet, a robot, a PLC (Programmable Logic Controller), an industrial camera, a ring-shaped light source and an industrial flat-panel computer, wherein the robot, the PLC, the single-arm hydraulic machine, the shaft-sleeve automatic discharging equipment, the rotor iron core pallet, the rotary-shaft pallet, the pressed-finished-product pallet, the end-plate automatic discharging equipment and the magnetic-steel embedding equipment form a robot execution system; the industrial camera, the ring-shaped light source and the industrial flat-panel computer form a visual system of the robot; the industrial flat-panel computer is provided with visual software for recognizing the position of a workpiece; the ring-shaped light source is fixed on the industrial camera; and the industrial camera and the ring-shaped light source are coaxially arranged on a mechanical arm of the robot and are vertical to the surface of the workpiece when carrying out detection on the position of the workpiece.

Owner:HEBEI UNIV OF TECH +1

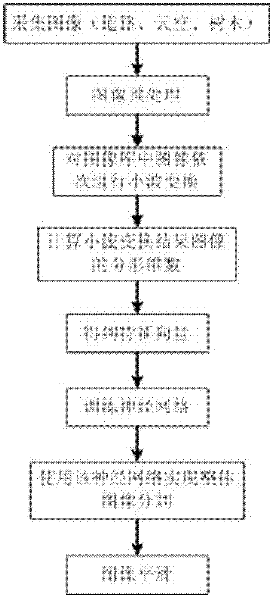

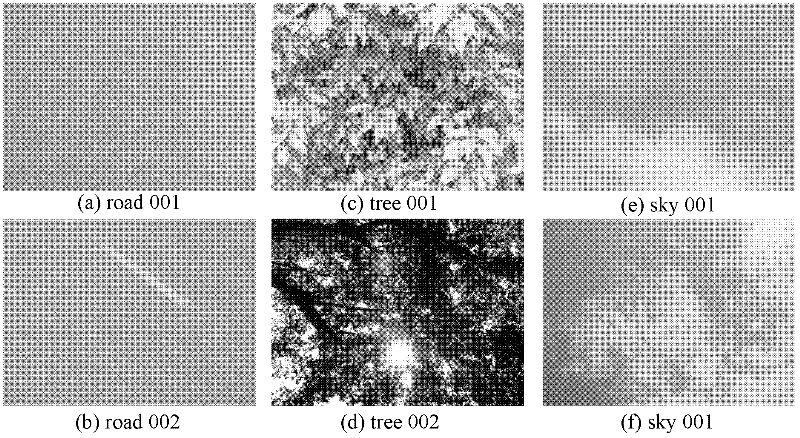

Robot vision image segmentation method based on multi-scale fractal dimension and neural network

InactiveCN102521831AEffective segmentationSplitting speed is fastImage analysisBiological neural network modelsSkyImage segmentation

The invention discloses a robot vision image segmentation method based on multi-scale fractal dimension and a neural network, and belongs to the technical field of robot vision. The robot vision image segmentation method adopts a method combing image wavelet transformation and fractal dimension to perform image segmentation, uses a neural network method for distinguishing image areas where extracted characteristics belong and can achieve rapid image segmentation. The robot vision image segmentation method utilizes a character that fractal dimension of surface texture of different objects in nature is different, and can effectively segment three different areas of the sky, trees and roads. In addition, segmentation speed can be improved when image area segmentation is conducted on the basis of existing neural network training results, and the robot vision image segmentation method is suitable for a robot vision system in a movement process.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

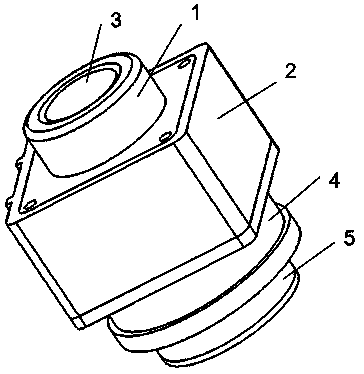

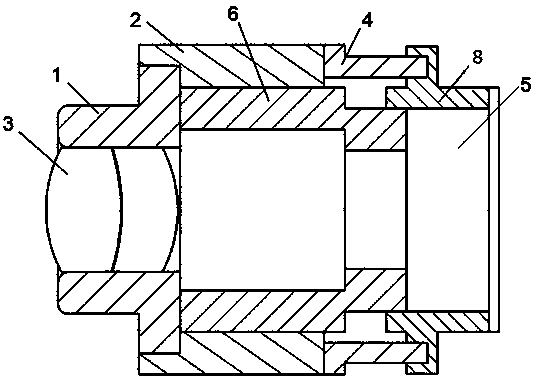

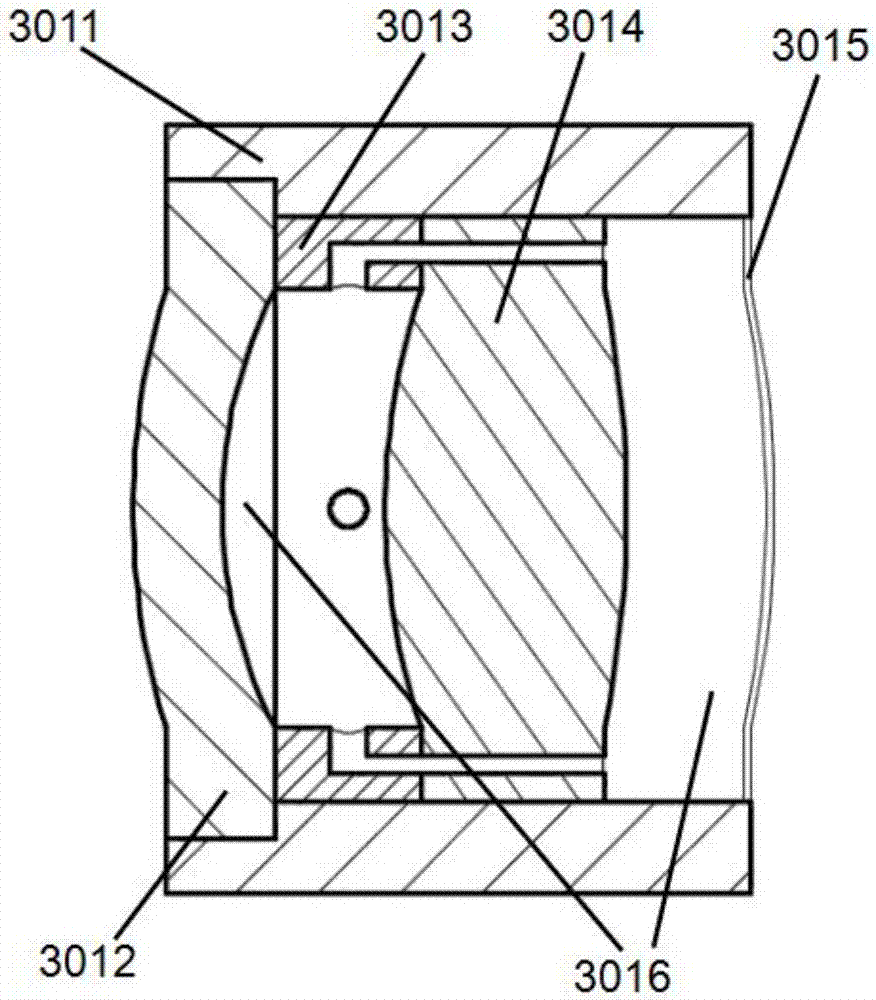

Bionic zoom lens and driving device thereof

The invention discloses a bionic zoom lens and a driving device thereof. A double gluing refraction object lens and a colloid lens are used as refraction devices. Light rays are refracted in advance by simulating zooming characters of a cornea and a crystalline lens of a human eye and using the double gluing refraction object lens as a first lens unit of the zoom lens, and the colloid lens is used as a second lens unit, and accordingly the crystalline lens of the human eye is simulated. Continuous zooming in a zooming range required in design is achieved by respectively installing an object lens fixing frame and a fixing sleeve at two ends of a voice coil motor, installing the double gluing refraction object lens in the object lens fixing frame, connecting a press ring and an inner ring of the voice coil motor together through a thread, enabling the rear end face of the press ring to contact with the front surface of the colloid lens, connecting the fixing sleeve and a housing of the colloid lens together, and using the inner ring of the voice coil motor to drive the press ring to squeeze the front surface of the colloid lens so as to change surface curvature of the colloid lens. The bionic zoom lens and the driving device thereof have the advantages of being stable in optical axis, good in imaging quality, rapid in response and the like, and can be widely used in robot vision systems and a variety of modern imaging systems.

Owner:ZHEJIANG UNIV

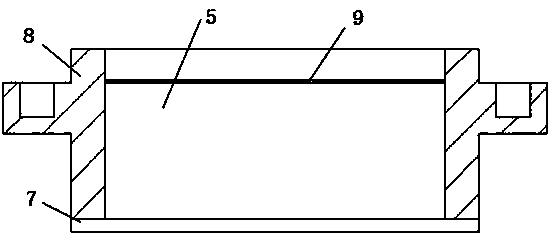

Flexible adjustable lens with multilayer structure and zooming optical system

The invention discloses a flexible adjustable lens with a multilayer structure and a zooming optical system. A rigid lens, optical liquid, a multichannel glass inner lens and a transparent elastic film are used as refraction media to form a multilayer multichannel flexible adjustable lens. The zooming optical system comprises a pre-refraction lens group, a diaphragm, a flexible adjustable zooming lens group and a back refraction lens group which are coaxially successively arranged from the left to right. The flexible adjustable zooming lens group comprises two flexible adjustable lenses. In a zooming adjusting process, an annular ultrasonic motor squeezes the external surface of the flexible adjustable lenses through a transmission press ring so as to change the focal lengths of the flexible adjustable lenses. By cooperatively adjusting the focal lengths of the flexible adjustable lenses, continuous zooming adjustment of the system is achieved. The lens and the system are advantaged by compact structure, flexible control, stable optical axis, large adjustment range and high imaging quality, and can be used in all kinds of modern optical imaging systems and robot vision systems.

Owner:北京中科视控科技有限公司

Bionic-based robot perception control system and control method

ActiveCN109079799ARealize multi-degree-of-freedom positioningBeneficial technical effectProgramme-controlled manipulatorSimulationVisual perception

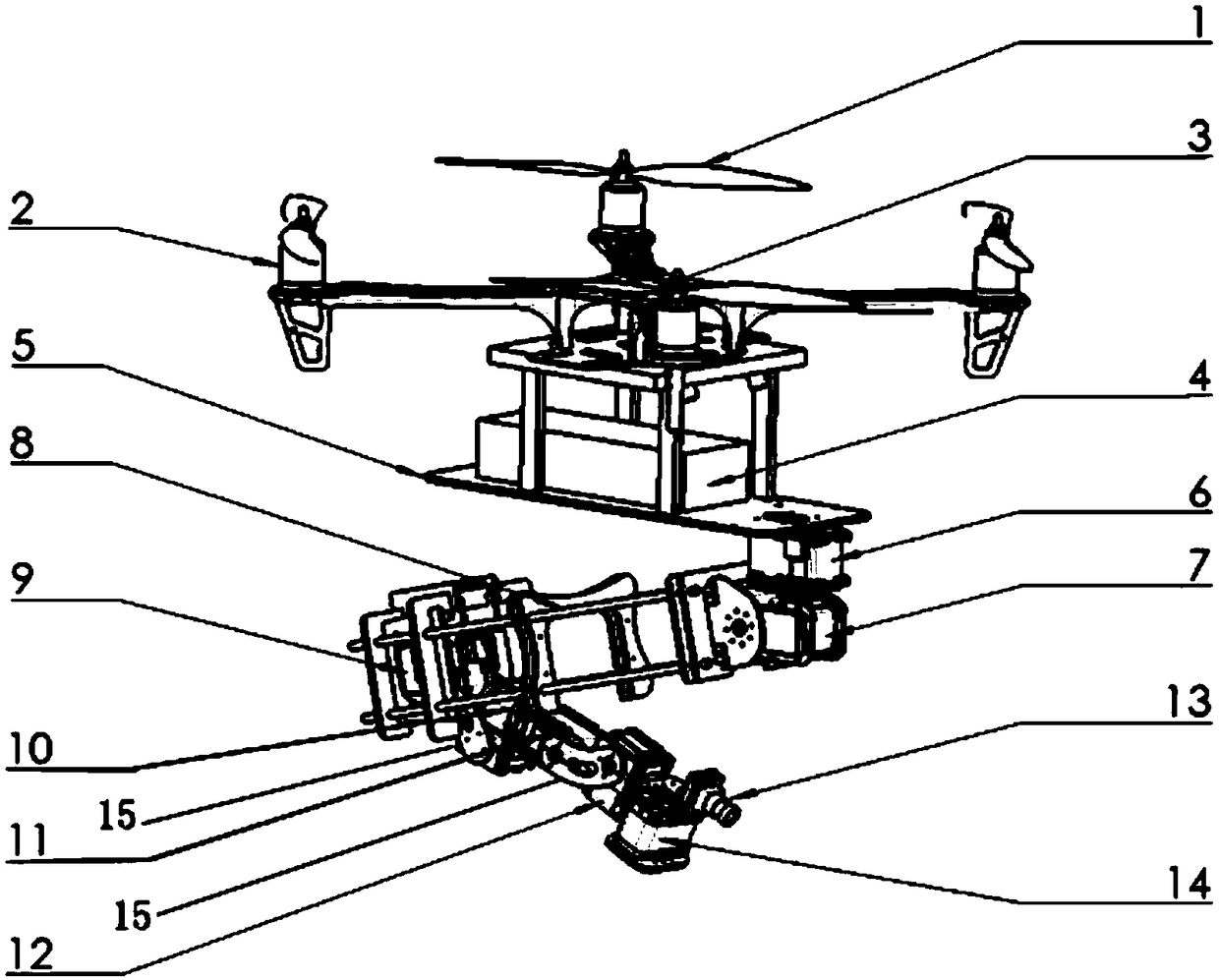

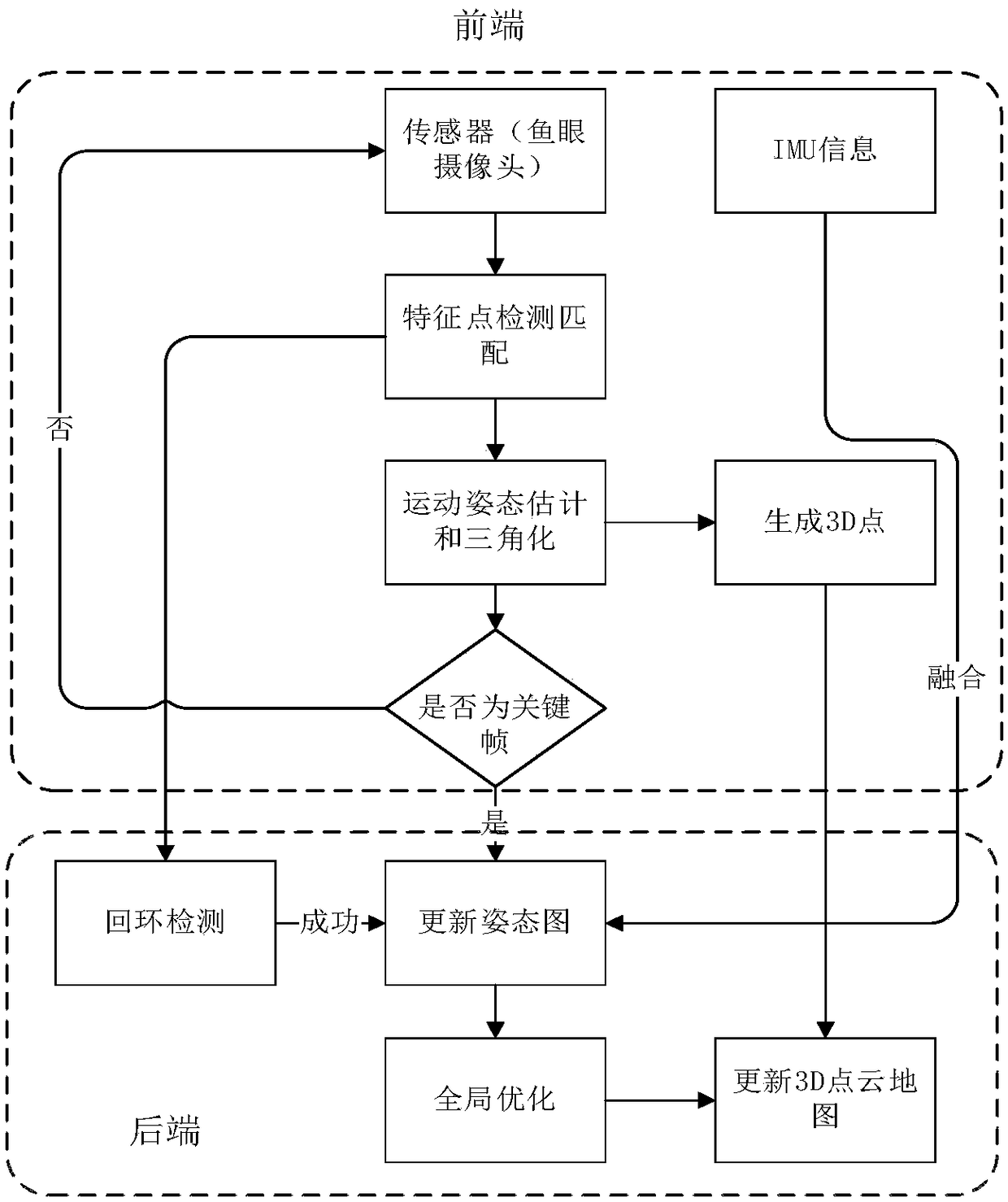

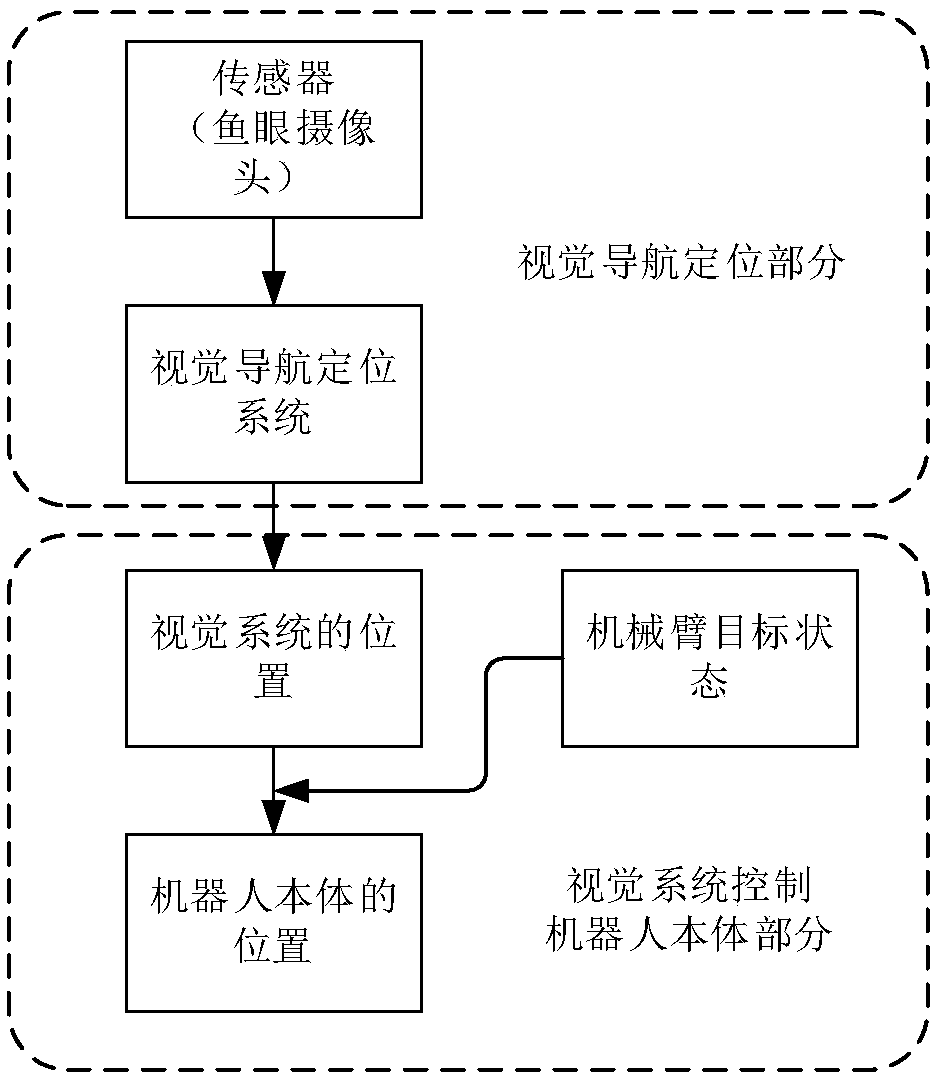

The invention discloses a bionic-based robot perception control system and a control method. The system comprises a robot body, a mechanical arm and a visual inertial navigation module, the robot bodyis provided with a mechanical arm base, the mechanical arm is mounted on the mechanical arm base, the mechanical arm is provided with a plurality of rotating joints, one steering gear is arranged ineach rotating joint, the visual inertial navigation module is installed at the end of the mechanical arm, and the visual inertial navigation module uses a visual inertial odometer to establish a cloudmap synchronously in real time. The system adopts the visual inertial navigation module as a visual navigation and positioning system of a bionic robot, utilizes a multi-degree-of-freedom movement ofthe mechanical arm to make a robot visual system free from the limitation of the movement of the robot body so that the robot can cope with complex and varied dynamic scenes, utilizes a visual inertia SLAM technology to achieve real-time navigation and positioning functions, which makes the bionic robot have a strong human-computer interaction function, and can be applied in the various dynamic scenes.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

Bank self-service robot system and automatic navigation method thereof

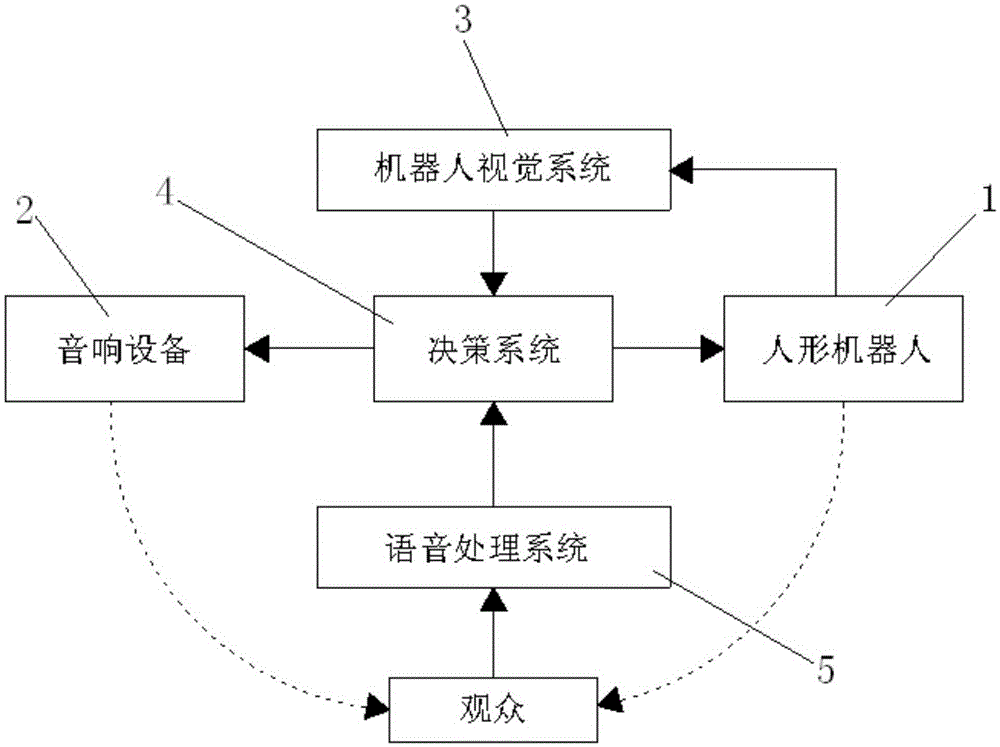

InactiveCN105425799APosition/course control in two dimensionsVehiclesControl layerHumanoid robot nao

The invention discloses a bank self-service robot system and an automatic navigation method thereof. The bank self-service robot system is characterized by comprising a humanoid robot, sound equipment, a robot vision system, a decision-making system and a voice processing system, wherein the decision-making system is connected with the humanoid robot, the sound equipment, the robot vision system and the voice processing system, the humanoid robot is connected with the robot vision system, and the voice processing system interacts with audiences. By adopting the most advanced SLAM technology at home and abroad and by means of an ROS operating system, the bank self-service robot system makes a control layer of the robot into a human brain completely, the robot can automatically perform self-positioning and self-navigation according to indoor environment, the voice adopts a full-autonomous mode without manual control, and the robot is more intelligent and humanized.

Owner:KUNSHAN PANGOLIN ROBOT

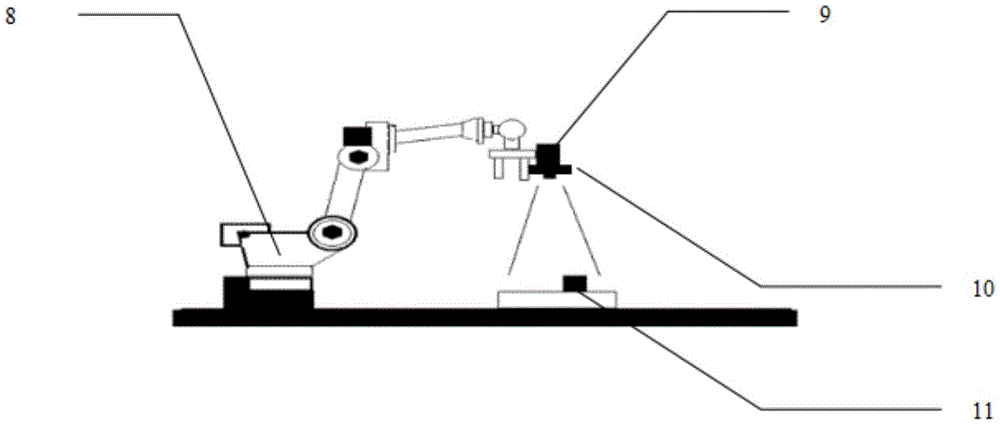

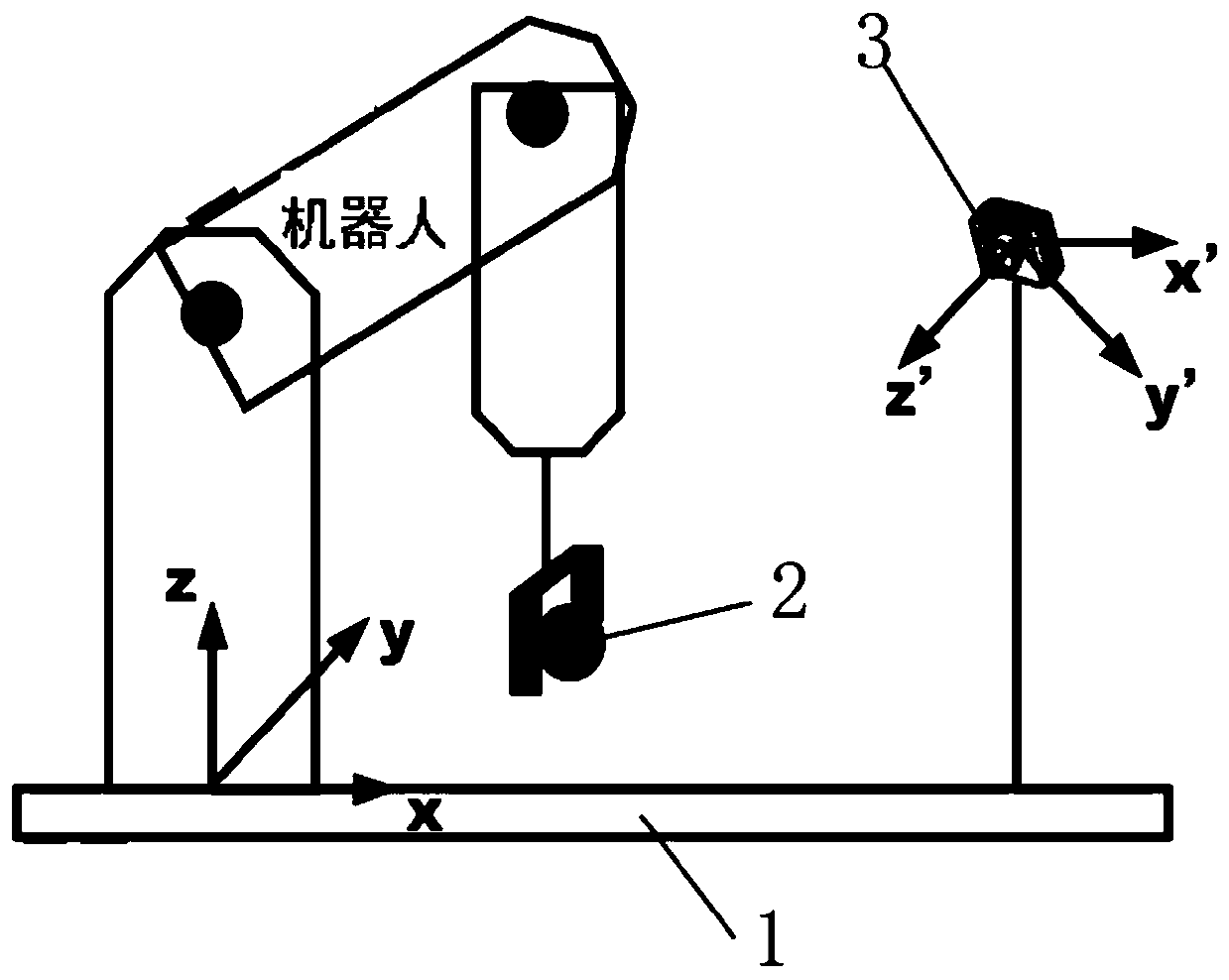

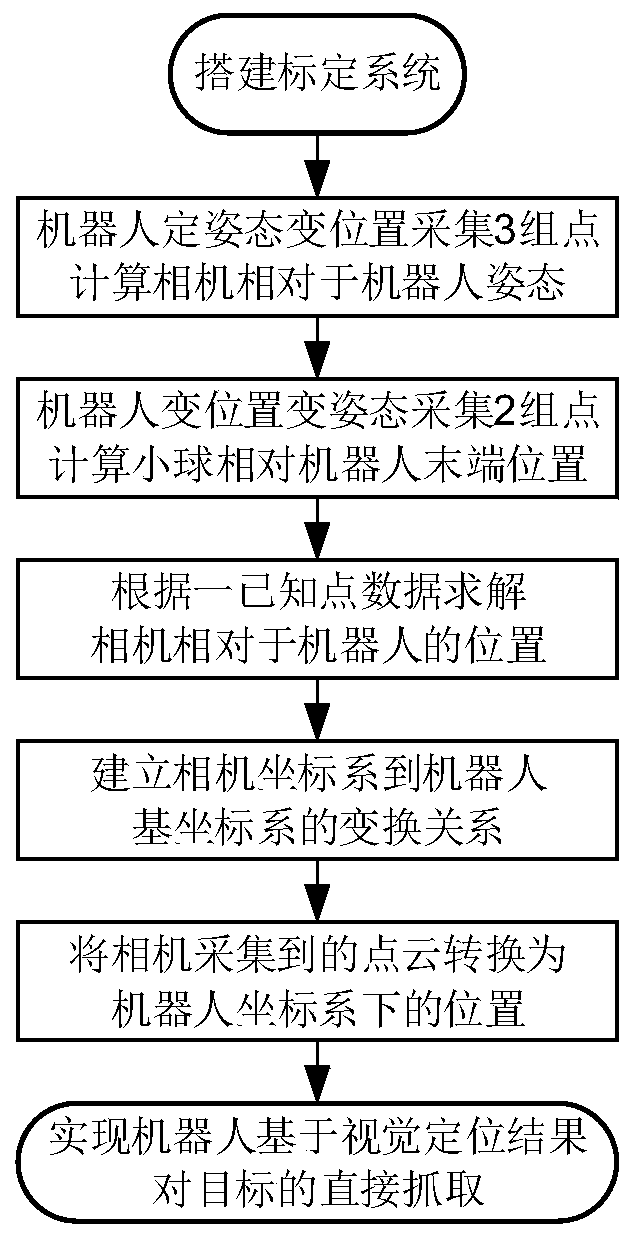

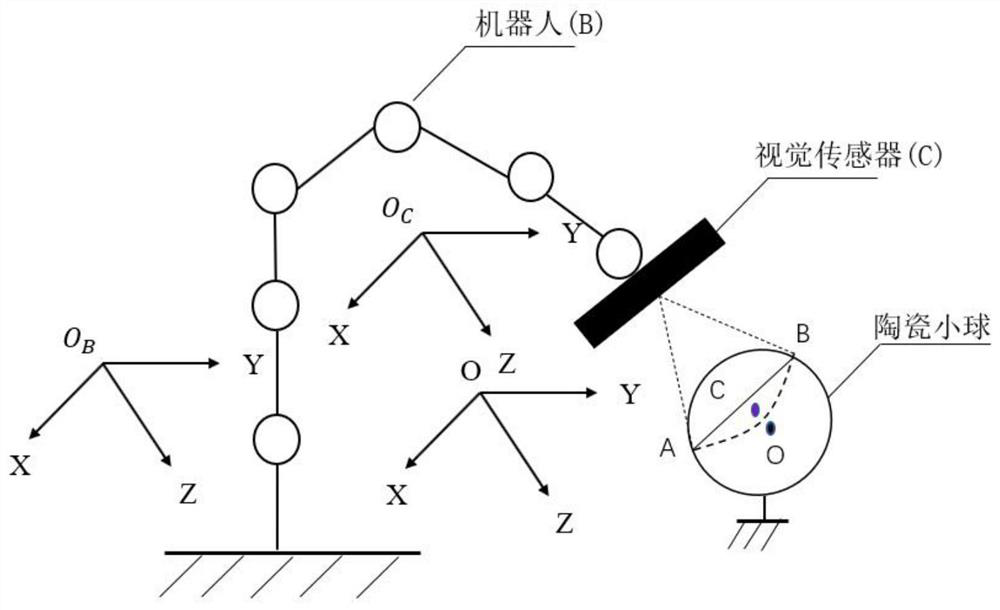

Camera pose calibration method based on spatial point location information

The invention discloses a camera pose calibration method based on spatial point location information. For a robot vision system with an independently-installed camera, a sphere is placed at the tail end of a robot to serve as a calibration object, then the robot is operated to change the position and posture of the sphere to move to different point locations, images and point clouds of table tennis balls at the tail end of the robot are collected; the sphere center is fitted to serve as a spatial point, and meanwhile the corresponding position and posture of the robot are recorded; then a transformation relation between a camera coordinate system and a robot base coordinate system is calculated by searching an equation relation between specific point position changes; the points collectedunder a camera coordinate system are converted into points under a robot base coordinate system, and directly achieving target grabbing of the robot based on visual guidance. According to the invention, the sphere is used as a calibration object, the operation is simple, flexible and portable, the tedious calibration process is simplified, and compared with a method for conversion by means of a calibration plate or a calibration intermediate coordinate system, the method has higher precision, and does not introduce an intermediate transformation relationship and excessive error factors.

Owner:EUCLID LABS NANJING CORP LTD

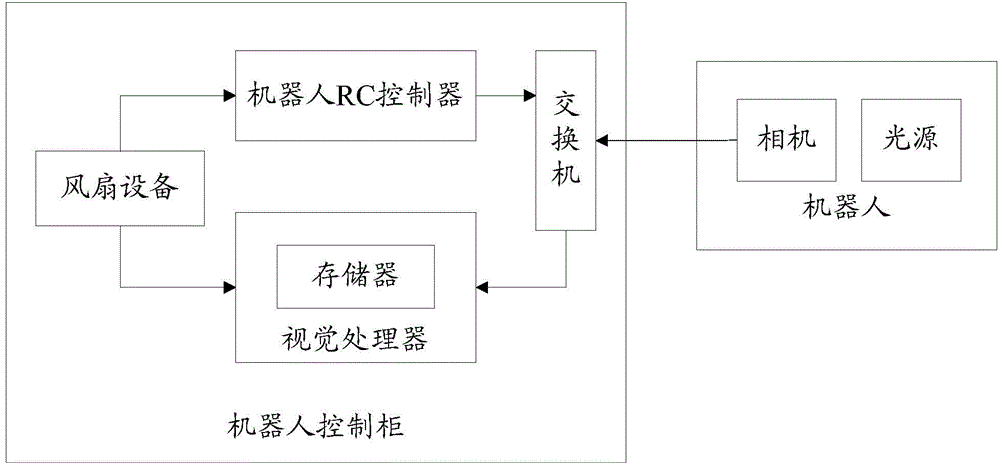

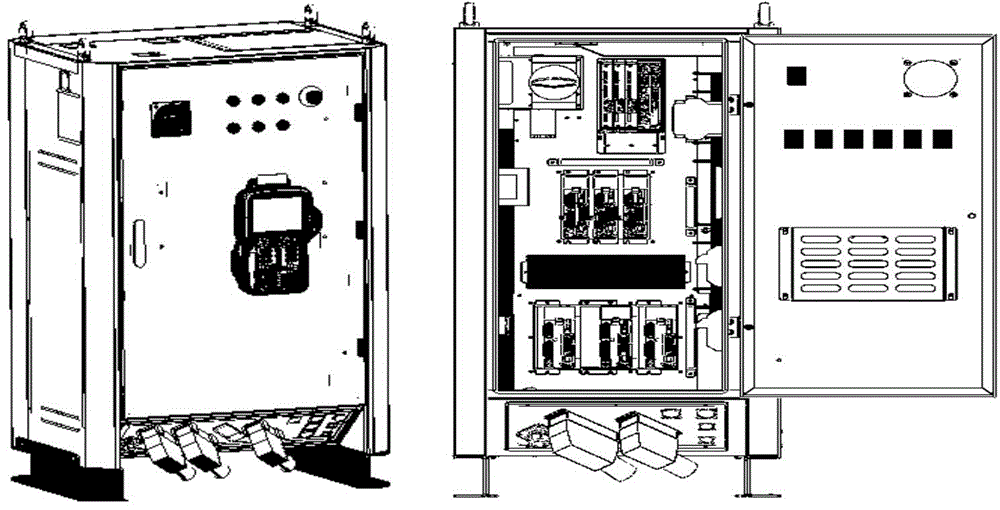

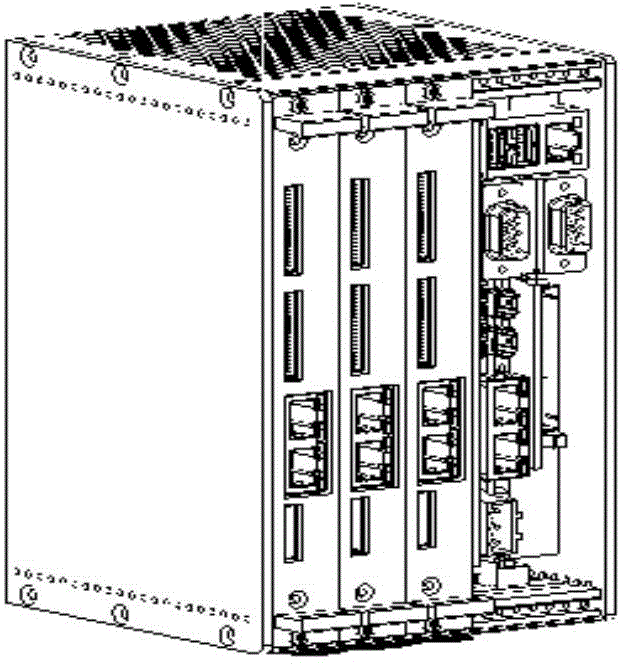

Robot vision system

The invention belongs to the technical field of robot vision systems, in particular to a robot vision system. The robot vision system comprises a camera, a robot control cabinet, a robot RC control, a vision processor and an interchanger, wherein the robot RC control, the vision processor and the interchanger are all arranged in the robot control cabinet; and the camera and the robot RC control are both connected with the network of the vision processor through the interchanger. According to the robot vision system, the vision processor is integrated in the robot control cabinet, so that the size and weight of the camera are structurally reduced; the application is convenient; the cost of the camera is favorably lowered; a PC 104 board card based on an X86 structure is adopted as a processor by the vision processor, so that the calculation capacity of the processor is higher than that of a processor in a current intelligent camera; furthermore, sufficient space is provided for heat dissipation; and the reliability of the system is more higher.

Owner:SHENYANG SIASUN ROBOT & AUTOMATION

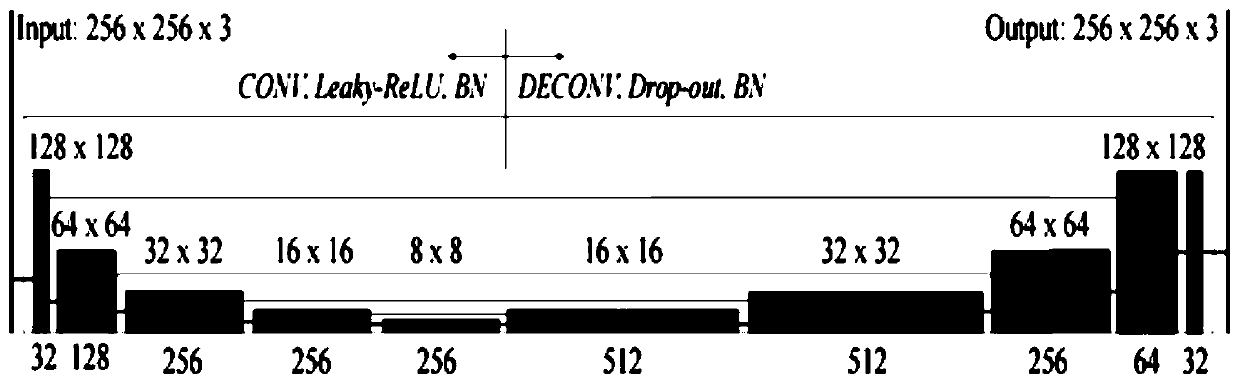

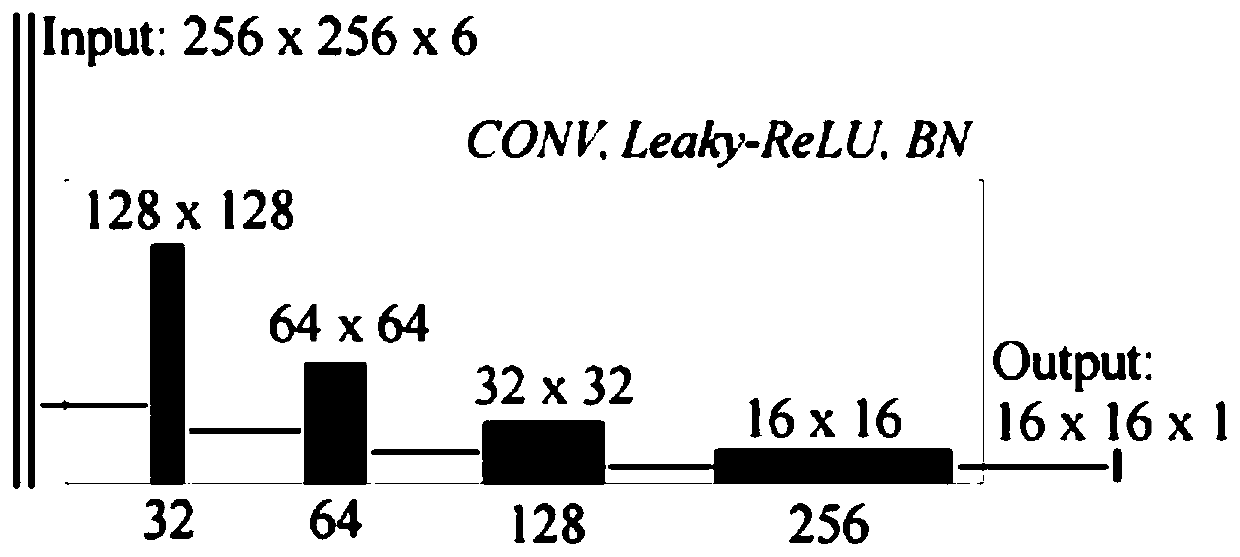

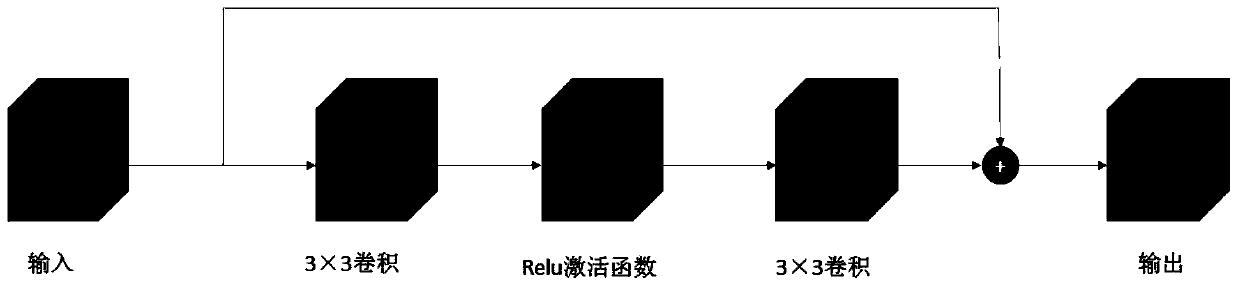

Underwater image real-time enhancement method based on conditional generative adversarial network

ActiveCN111062880ASolve the noiseTroubleshooting technical issues with distortionImage enhancementImage analysisPattern recognitionImaging processing

The invention discloses an underwater image real-time enhancement method based on a conditional generative adversarial network. The invention belongs to the technical field of image processing, the method comprises the steps of establishing a conditional generative adversarial network system in a robot vision system, an image model network is established by following the principle of U-net; establishing a generative adversarial network architecture by adopting a Markov data chain through a discriminator module; the technical problems of noise and distortion of the underwater image are solved;the generative adversarial network is optimized by using a deep convolution method; the visual image enhancement capability of the underwater robot is improved; according to the underwater robot imageenhancement method based on the deep convolution generative adversarial network, the perception quality of the image is evaluated based on the overall content, color, local texture and style information of the image, and a multi-modal target function is formulated to train the model.

Owner:NANJING INST OF TECH

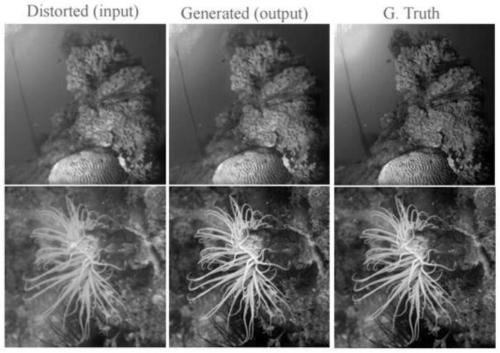

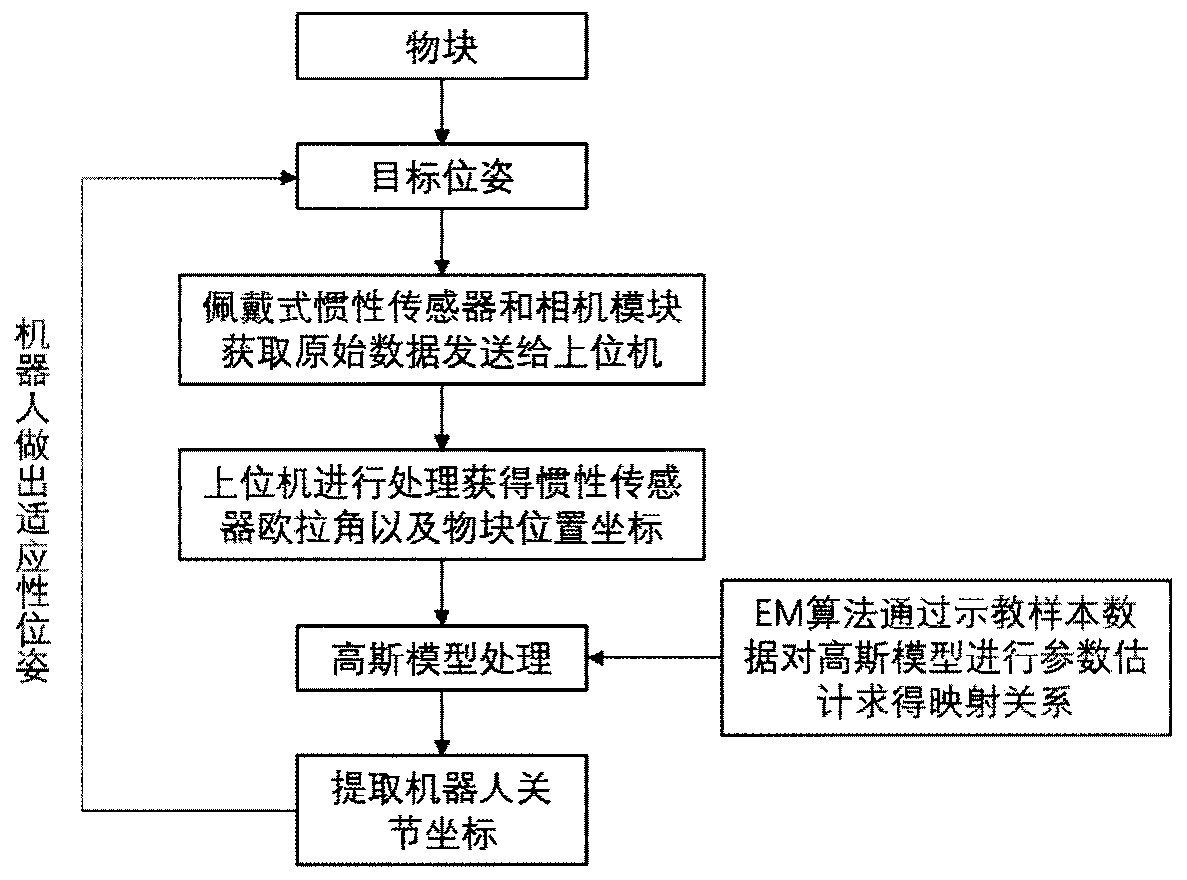

Man-machine collaboration robot grabbing system and work method thereof

InactiveCN109732610ALow professional skills requiredSmooth and supple trajectoryProgramme-controlled manipulatorJoint coordinatesSimulation

The invention discloses a man-machine collaboration robot grabbing system and a work method thereof. The man-machine collaboration robot grabbing system comprises a wearing type inertial sensor module, a camera spacing module, a Gaussian model EM algorithm training module, a Gaussian model processing module and an adaptive robot joint coordinate extracting module. In the man-machine collaborationapplication site, the man-machine collaboration robot grabbing system does not need to carry out calibration and kinematics inversion of a robot vision system, the professional skills required of an operator can be reduced, in addition, the man-machine collaboration robot grabbing system can achieve mapping without a large amount of samples, and the robot track is smooth.

Owner:BEIHANG UNIV

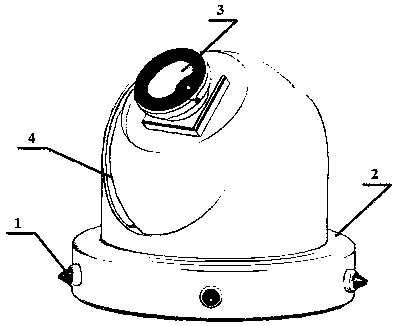

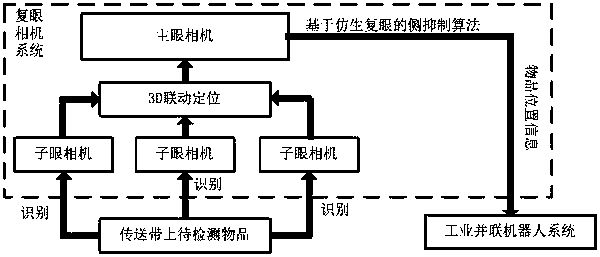

Industrial parallel robot fast visual inspection algorithm based on bionic compound eye structure

InactiveCN107610086ARecognition speed is fastEasy to identifyImage enhancementImage analysisPattern recognitionEngineering

An industrial parallel robot fast visual inspection algorithm based on bionic compound eye structure is provided. A compound eye camera system includes multiple sub-eye cameras and a main eye camera mounted on the front end of an industrial parallel robot, and a lateral inhibition algorithm based on bionic compound eyes. The algorithm can rapidly capture the contours of objects on a conveyor beltunder the global field of view, and make the industrial parallel robot vision system extremely sensitive to a target contour. After the sub-eye cameras obtain a target position quickly, a coordinate mapping relationship is adopted so that the main eye camera after receiving a command can rapidly magnify and locate the target position so as to perform further small field of view and large-target recognition and improve the visual recognition rate and efficiency of the industrial parallel robot.

Owner:TIANJIN SUPER ROBOT TECH CO LTD

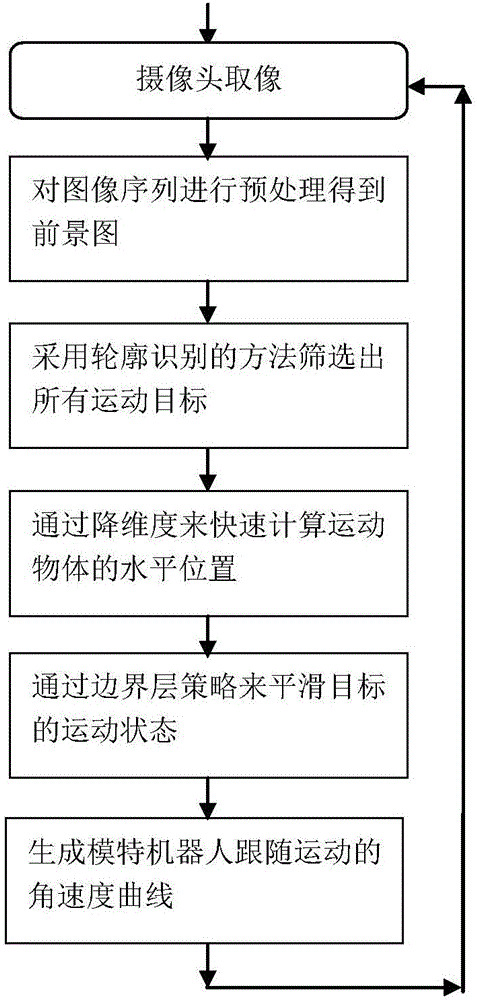

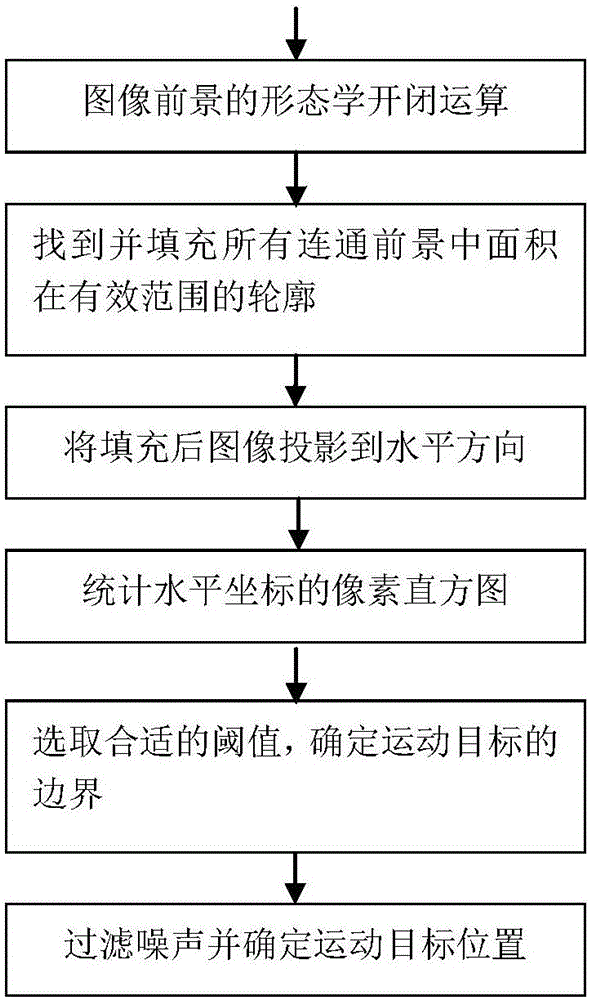

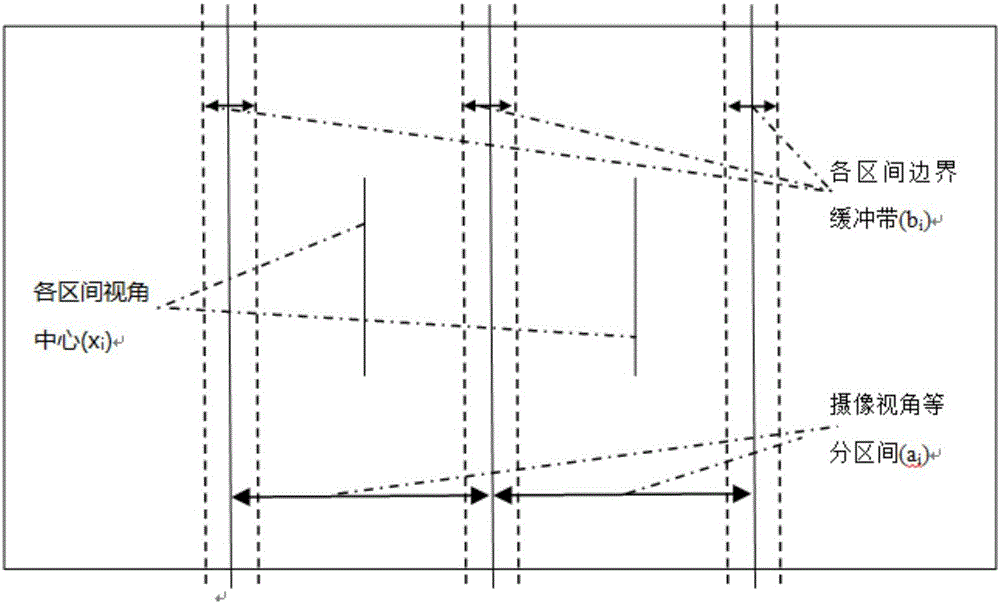

Method for detecting and smoothly following moving object based on video images

ActiveCN106530328AQuick responseImprove computing efficiencyImage enhancementImage analysisObject basedAngular velocity

The invention provides a method for detecting and smoothly following a moving object based on video images. The method includes the steps of preprocessing an image sequence to obtain a foreground image, screening out all moving objects through a contour identifying method, rapidly calculating the horizontal positions of the moving objects by dimension reduction, smoothing the motion state of the objects through a boundary layer strategy, and generating an angular velocity curve of model robot following motion. The algorithm is simple and easily realizable, and moving objects can be rapidly identified. The method has low requirements on hardware configuration environment, and can be widely used in visual sense system of a model robot. The jittering phenomenon can be prevented while realizing a model robot smoothly following the movement of a moving object.

Owner:深圳维周机器人科技有限公司

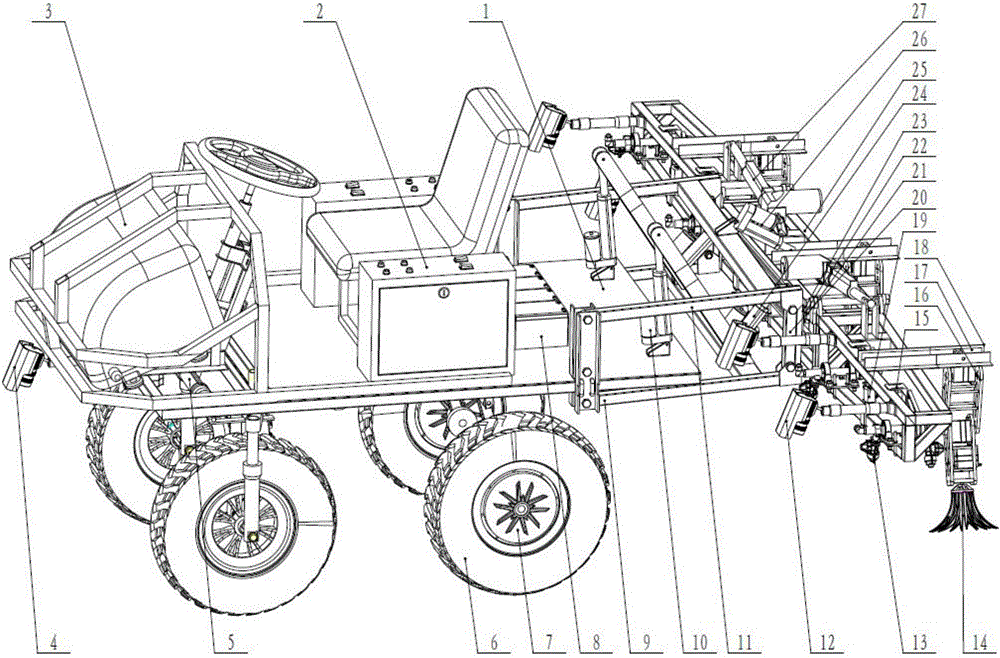

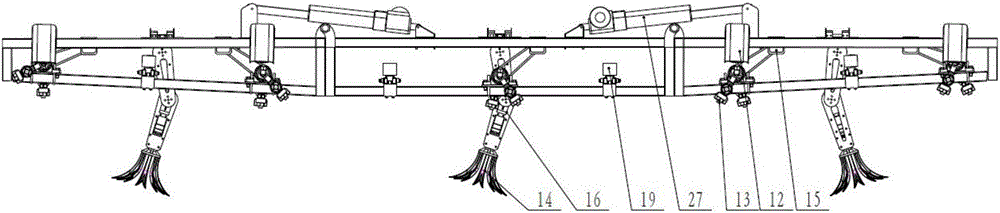

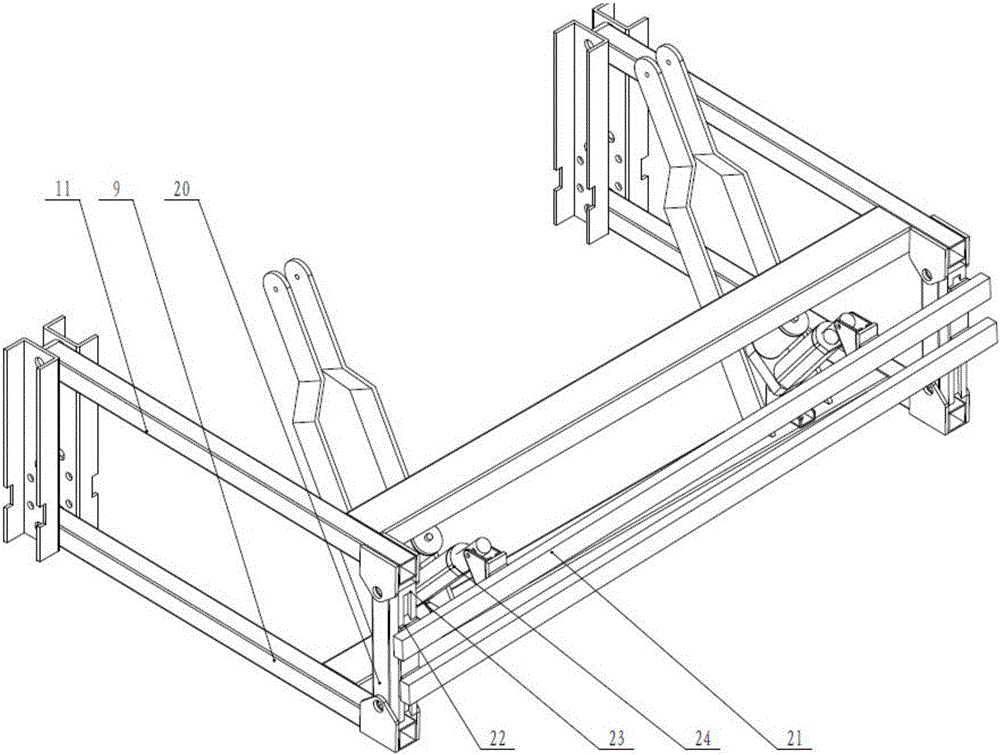

Weeding robot with both functions of targeted quantitative spraying and mechanical fixed point shoveling

The invention relates to a weeding robot with both functions of targeted quantitative spraying and mechanical fixed point shoveling. The weeding robot consists of a double-hub electric driving mechanical platform, a robot vision system, an intelligent control system, an automatic steering system, a horizontal maintaining mechanism, a foldable weeding platform, an alternative targeted quantitative spraying system, an electric assisting system and a feedback system. After the weeding robot is powered on, the robot vision technique is adopted to track orientation of in-field seedling zones, and automatic intelligent navigation can be achieved. Meanwhile the foldable weeding platform is unfolded, in-field data are acquired through five sets of image acquisition devices in real time, weed information is analyzed and integrated a pulse instructions, an electromagnetic valve is controlled to be opened and closed to drive a stepper motor on the foldable weeding platform to drive double nozzles to rotate so as to achieve space fixed point quantitative targeting spraying. The extension length of a push rod motor is adjusted through feedback of the sensor so as to adjust the horizontal holding mechanism, then a weeding mechanism is normally kept in equal distance from the ground horizon, and accurate weeding can be achieved through the weeding mechanism. The weeding robot can be applied to agricultural weeding operation.

Owner:NORTHEAST AGRICULTURAL UNIVERSITY

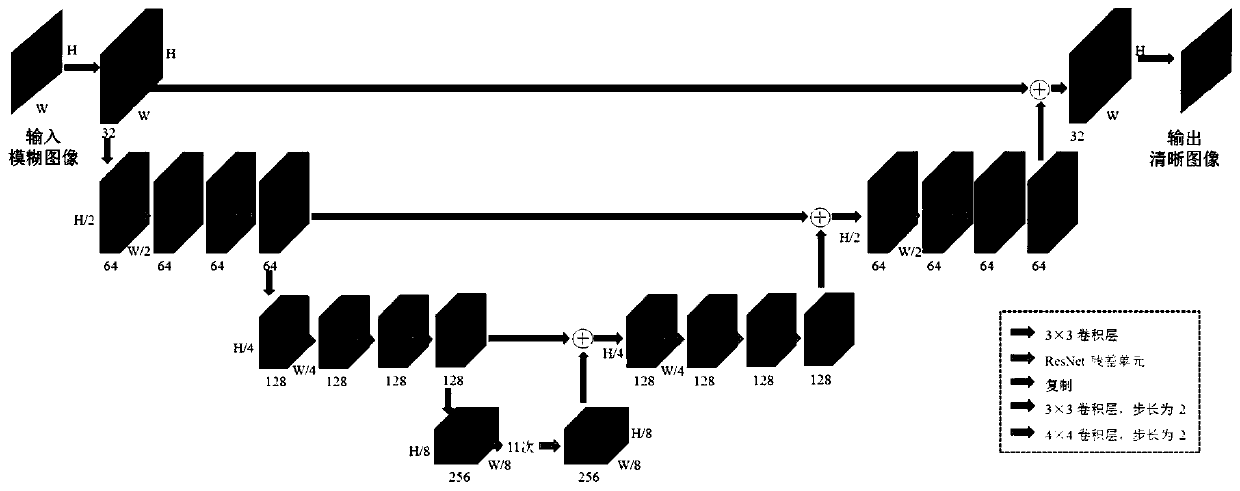

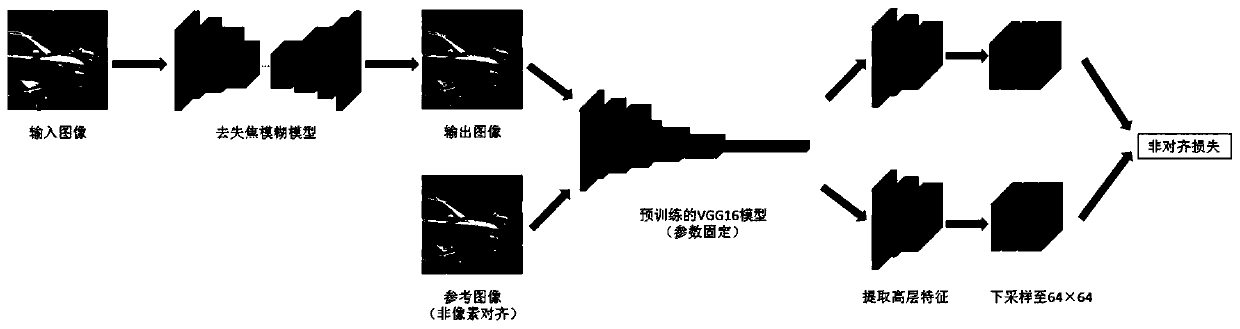

Image defocusing blurring method based on deep learning

ActiveCN111091503AClear textureEffective recoveryImage enhancementImage analysisPattern recognitionData pack

The invention belongs to the technical field of digital image intelligent processing, and particularly relates to an image defocusing blurring method based on deep learning. The method comprises the following steps: constructing a defocusing blurred data set by shooting or adding random blurring and the like, so that each group of data comprises a clear image as an original image and a plurality of blurred images as blurred images corresponding to the clear image; training a defocusing fuzzy deep neural network; recovering a blurred object out of a focal plane from the image through a deep neural network by using a non-alignment loss function; a non-pixel-level aligned deblurred data set is shot in a real scene, and a deep neural network is trained through a non-alignment loss function. Experimental results show that the out-of-focus blurred image shot in a real scene can be effectively recovered, and the proposed data set can effectively train the out-of-focus blurred network througha non-alignment loss function. The method can be used for camera zooming, robot vision systems and the like.

Owner:FUDAN UNIV

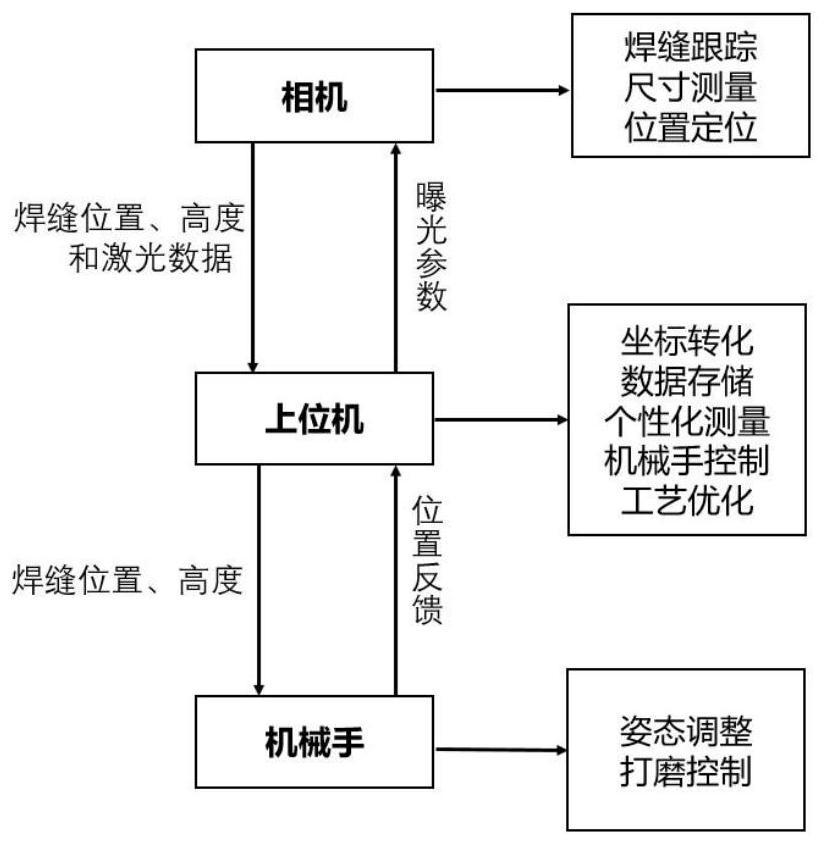

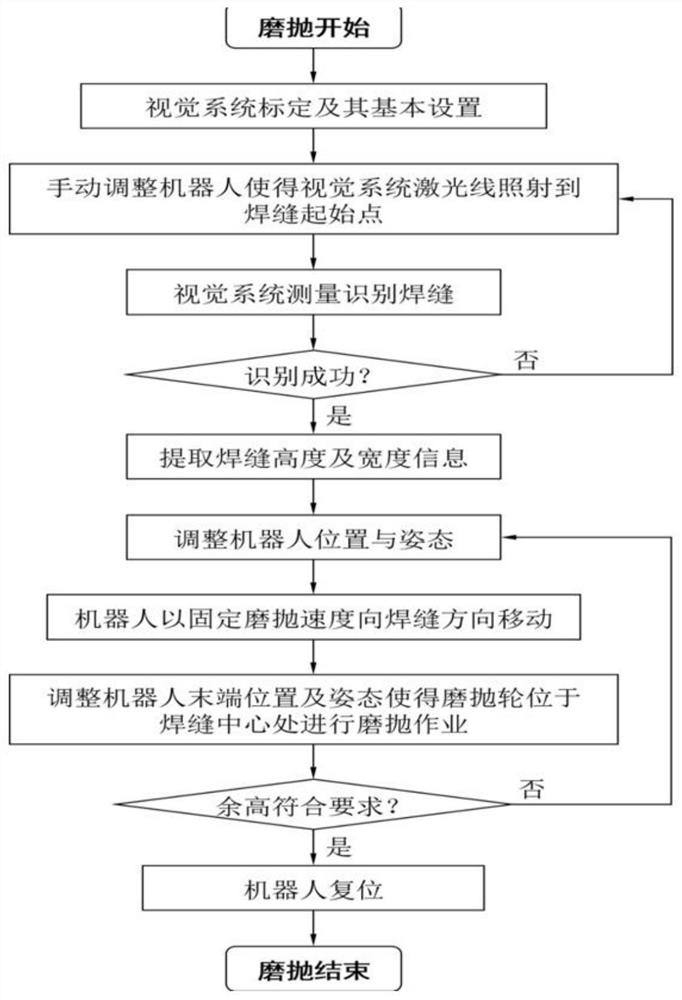

Online grinding method of weld joint grinding and polishing robot

ActiveCN112223293ARealize online adjustmentSolving Adaptive ProblemsEdge grinding machinesProgramme-controlled manipulatorPoint cloudControl engineering

The invention discloses an online grinding method of a weld joint grinding and polishing robot. The online grinding method comprises the following steps: 1, the relation among coordinates of a visualmeasurement unit, coordinates of the robot and coordinates of a grinding wheel is calibrated; 2, communication among the robot, a visual system and a PC is established; 3, a benchmark and a contour benchmark of the visual system are established; 4, a robot movement starting point is created, and the grinding wheel is moved to the structural part weld joint grinding and polishing starting point; 5,a frequency converter adjusts the rotating speed of the grinding wheel, and the robot slowly gets close to the weld joint starting point; 6, the visual measurement unit recognizes the contour of theweld joint, processes the point cloud data and obtains a weld joint characteristic value; 7, the robot sends a signal of the current position, and the visual system feeds back feature point data extracted at the moment to the robot; 8, the robot is controlled to track the contour of the weld joint in real time, continuously sends a signal to the visual system, and the step 7 and the step 8 are repeated; and 9, the robot completes grinding and polishing operation of the weld joint and returns to the Home point.

Owner:HUNAN UNIV OF SCI & TECH

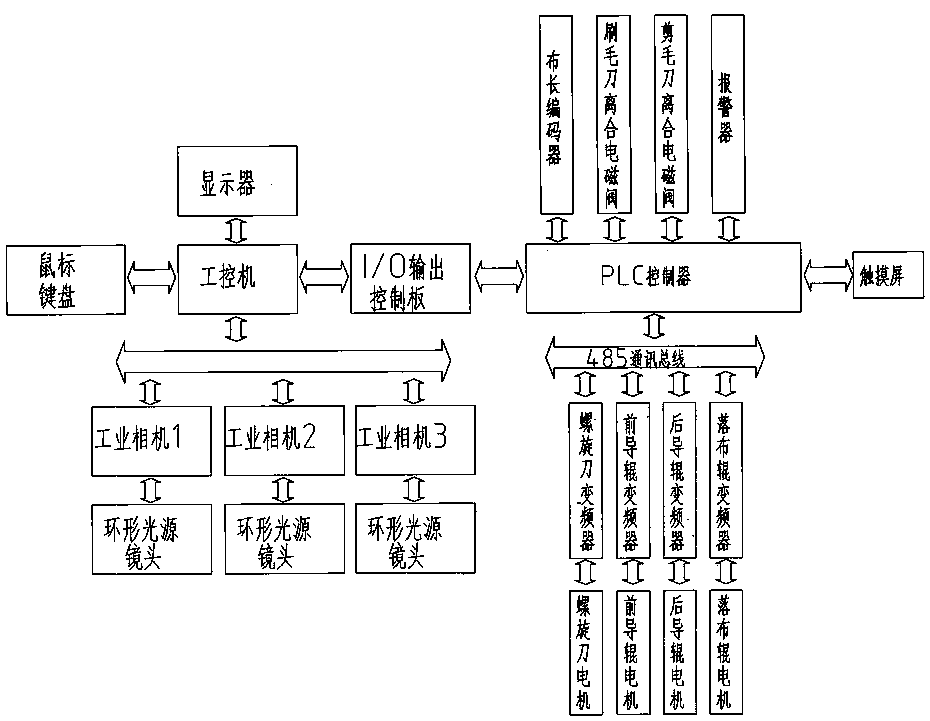

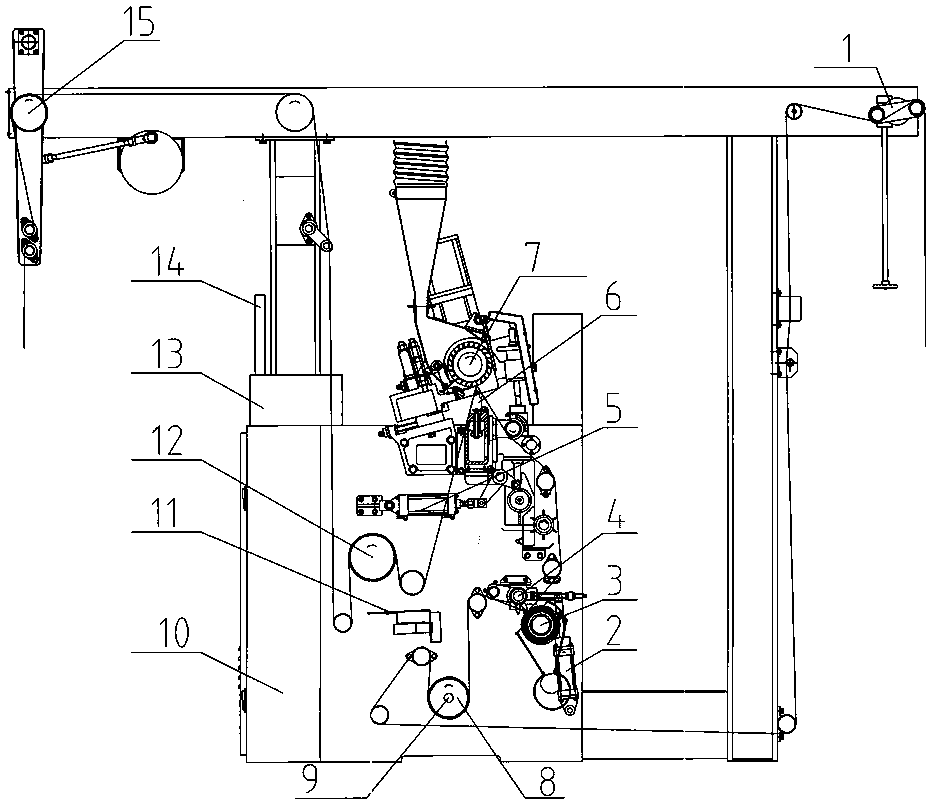

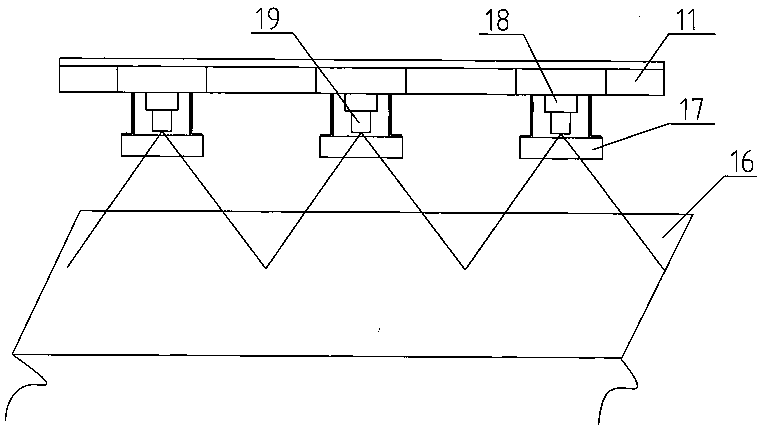

Machine-vision-based intelligent cutter lifting system of shearing machine and realization method thereof

PendingCN107643727AConducive to dealing with uncertaintiesGuaranteed to work togetherMaterial analysis by optical meansProgramme control in sequence/logic controllersControl systemEngineering

The invention discloses a machine-vision- based intelligent cutter lifting system of a shearing machine and a realization method thereof. The machine-vision-based intelligent cutter lifting system ofa shearing machine comprises a touch screen, a PLC controller and a machine vision cloth seam detection system, wherein the machine vision cloth seam detection system is arranged on a rack of the shearing machine and rightly faces to right ahead and an oblique forward direction of a fabric processed by the shearing machine. The machine vision cloth seam detection system comprises a vision cross beam, an image forming system and a control system. The image forming system comprises a light source device, a camera and a lens. The light source is arranged on the crossbeam. The crossbeam is arranged on the rack of the shearing machine, above a front guide roller and directly facing the front end of a detected fabric. The machine-vision-based intelligent cutter lifting system of the shearing machine is a non-contact detection system, has no impact on a fabric processing style, can guarantee the machine vision system can reliably detect the cloth seam and the hole through the robot vision system, and can make a hair brushing cutter or a shearing cutter lift automatically through the control system to let cloth seam to operate. The machine-vision-based intelligent cutter lifting system ofshearing machine is simple in structure, high in control accuracy, low in fault rate, and can save labor, can increase efficiency and can improve product quality.

Owner:HAINING TEXTILE MACHINERY FACTORY

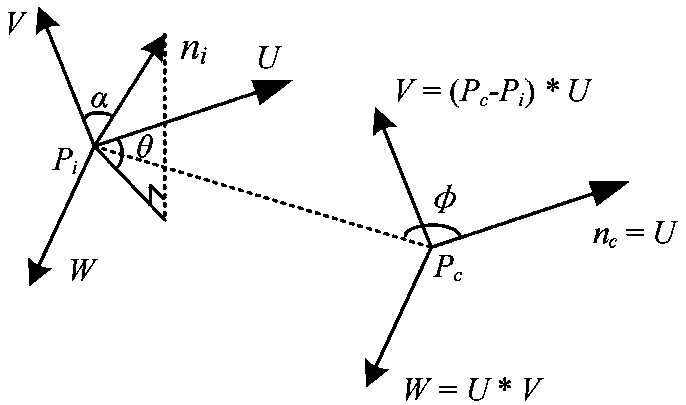

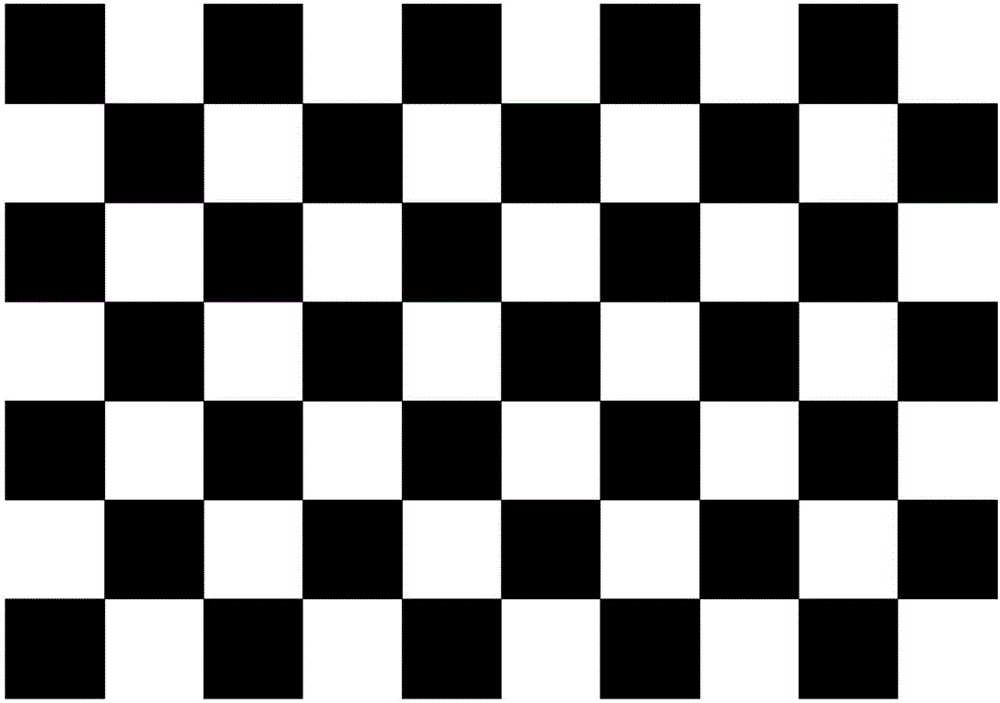

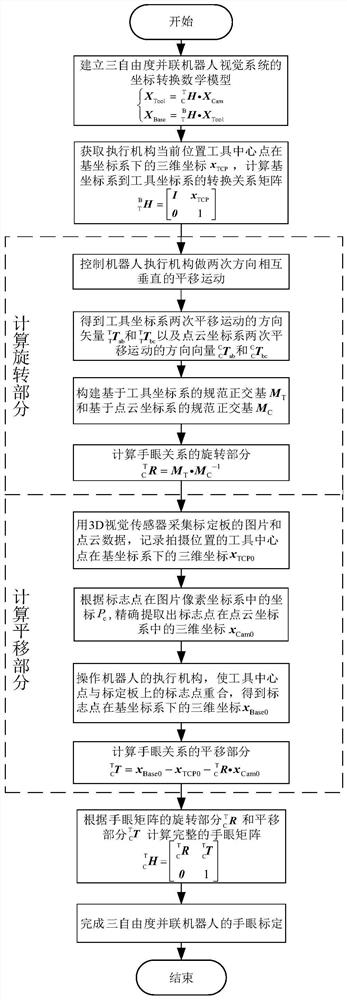

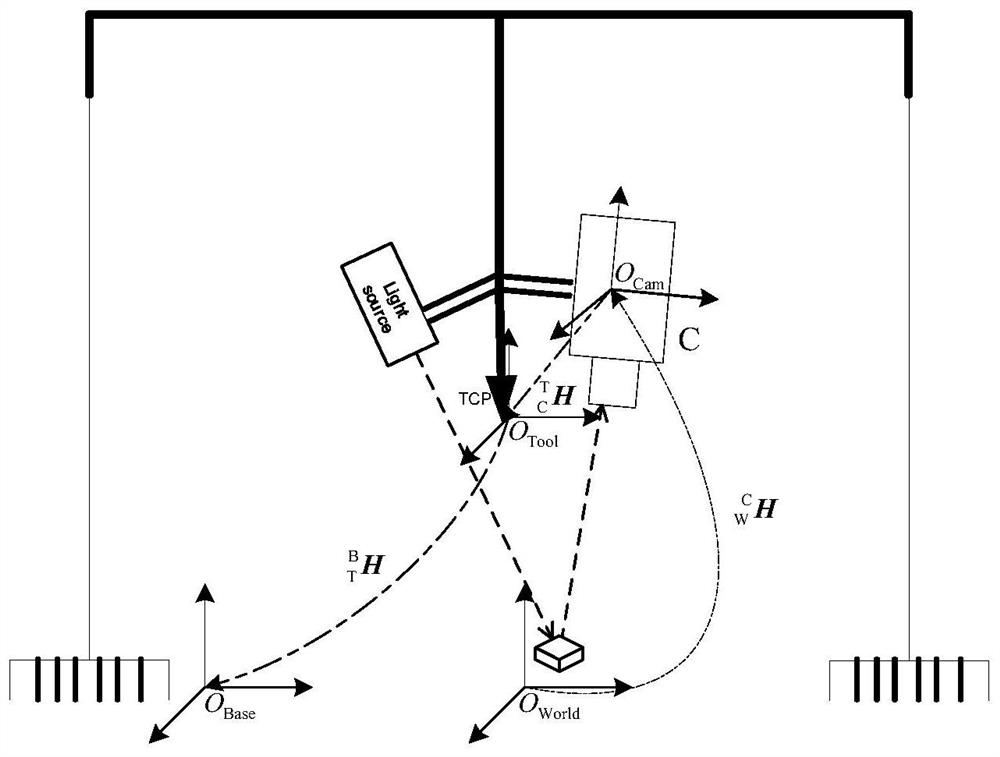

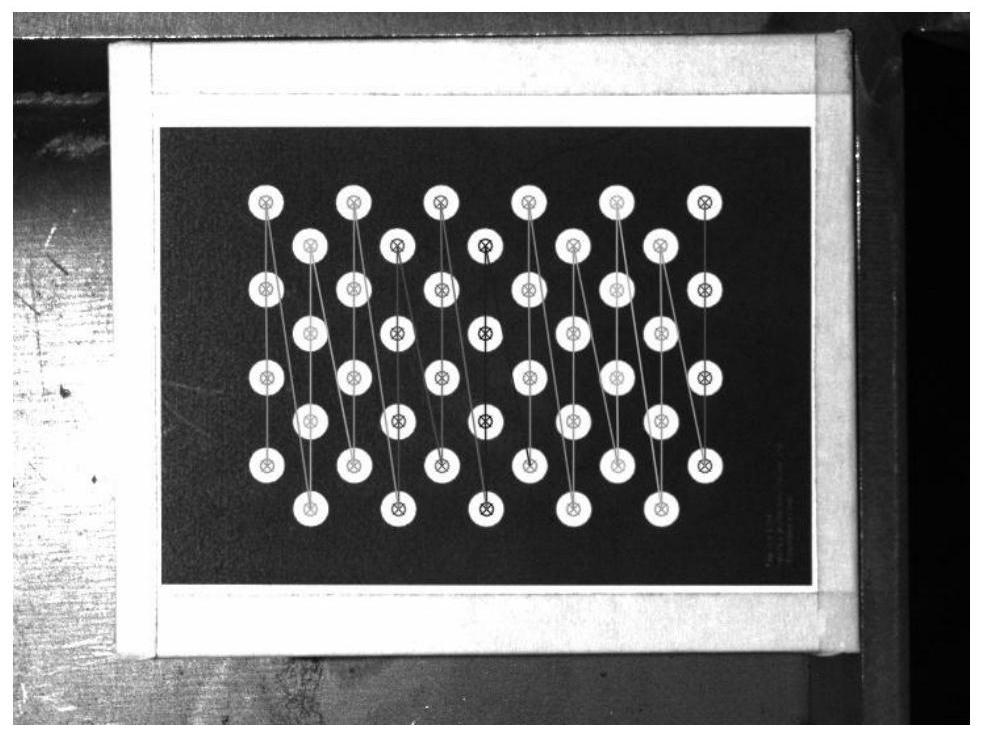

Three-degree-of-freedom parallel robot hand-eye calibration method based on 3D visual sensor

The invention discloses a three-degree-of-freedom parallel robot hand-eye calibration method based on a 3D visual sensor, and belongs to the field of robot vision. Firstly, a mathematical model of a three-degree-of-freedom parallel robot vision system is established, then an executing mechanism of a robot is controlled to perform two times of mutually-perpendicular translational motion, and two translational vectors of a robot tool coordinate system and two translational vectors of a point cloud coordinate system are obtained; and a standard orthogonal basis based on the tool coordinate systemand a standard orthogonal basis based on the point cloud coordinate system are established according to the two groups of translational vectors so as to solve a rotating part of a conversion relationship between the two coordinate systems, finally the execution mechanism is operated to carry out contact measurement on a mark point of a calibration plate, and a translation part is solved by combining the solved rotating part. The hand-eye calibration method is suitable for the visual system composed of the three-degree-of-freedom parallel robot and the 3D sensor, and has high precision.

Owner:GUIZHOU POWER GRID CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com