Patents

Literature

60results about How to "Implement parallel computing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

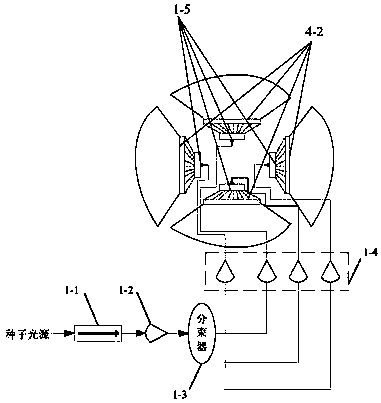

Onboard 3D imaging solid state laser radar system

PendingCN107678040AHigh precisionIncrease flexibilityElectromagnetic wave reradiationRadar systemsBeam splitter

The invention discloses an onboard 3D imaging solid state laser radar system, and relates to the field of onboard laser radars. The system herein can address the technical problems of slow scanning, large size, low receiving signal to noise ratio and low safety factor of conventional mechanical scanning laser radars. The system herein includes a laser device, a plurality of TR assembles and a central processing unit. The laser device includes an optoisolator, a pre-amplifier, a beam splitter, a main amplifier array, and a beam expanding collimated light path. Each TR assembly includes a transmitting system and an echo receiving system. The transmitting system includes a unidirectional glass array and a liquid crystal polarized grating array. The echo receiving system includes an optical filter array, a gathering lens array photoelectric detector array and a plurality of playback circuits. According to the invention, a radar has no mechanical rotary parts, which can greatly reduce the size of a laser radar system; a single TR assembly has no processor, and all the TR assemblies are under uniform control of a central processing unit of the laser radar system, which facilitates integration; and the arrangement of the plurality of TR assemblies can cover a 360 degree horizontal field of view and a 20 degree vertical field of view.

Owner:CHANGCHUN UNIV OF SCI & TECH

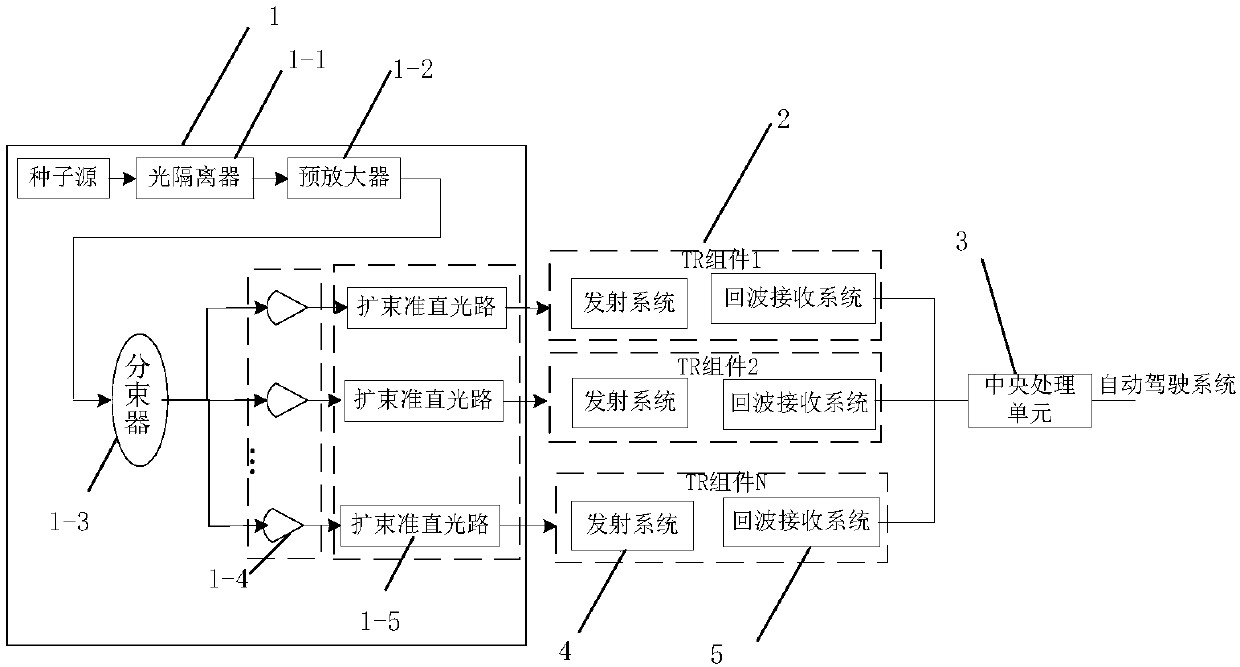

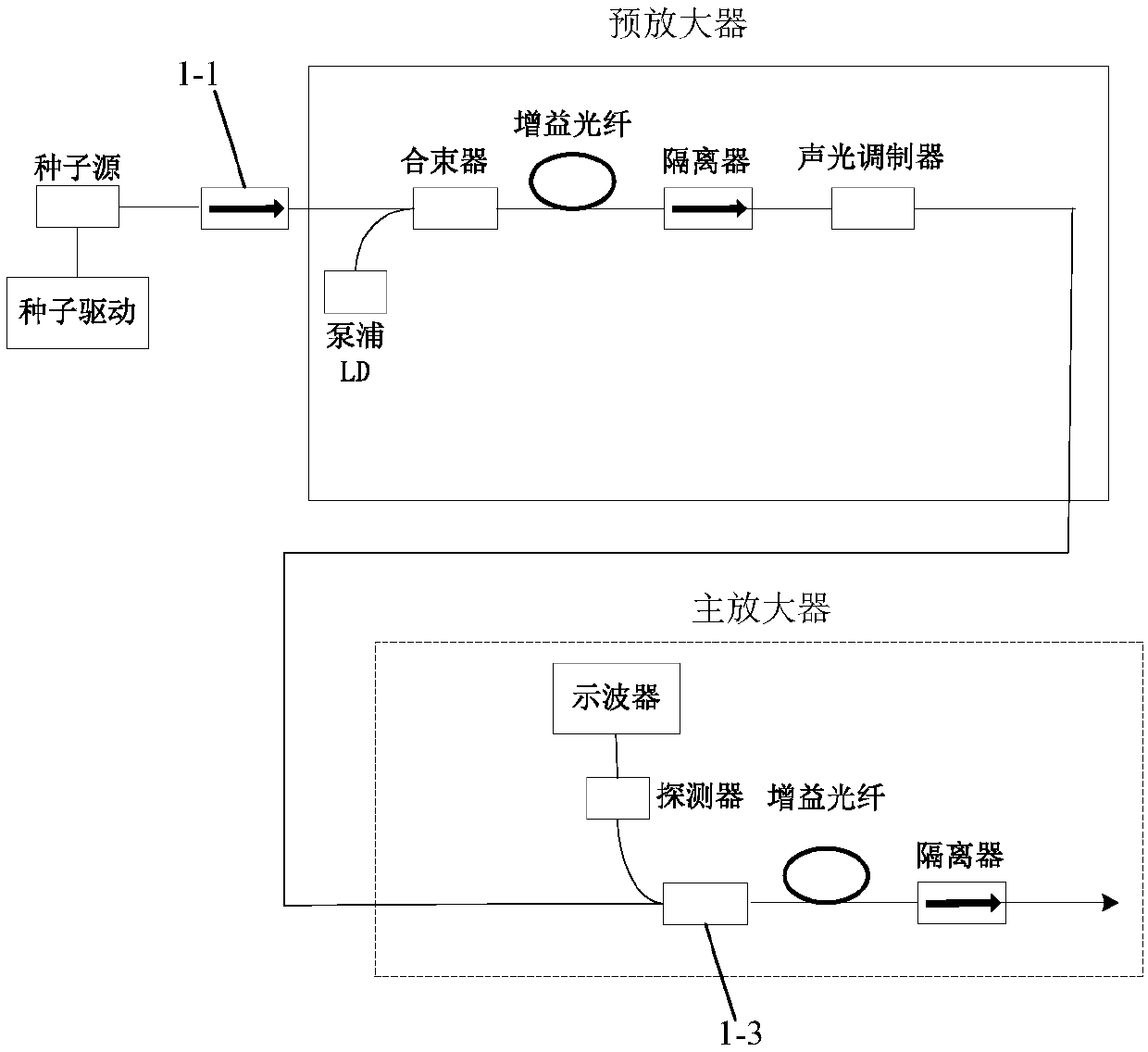

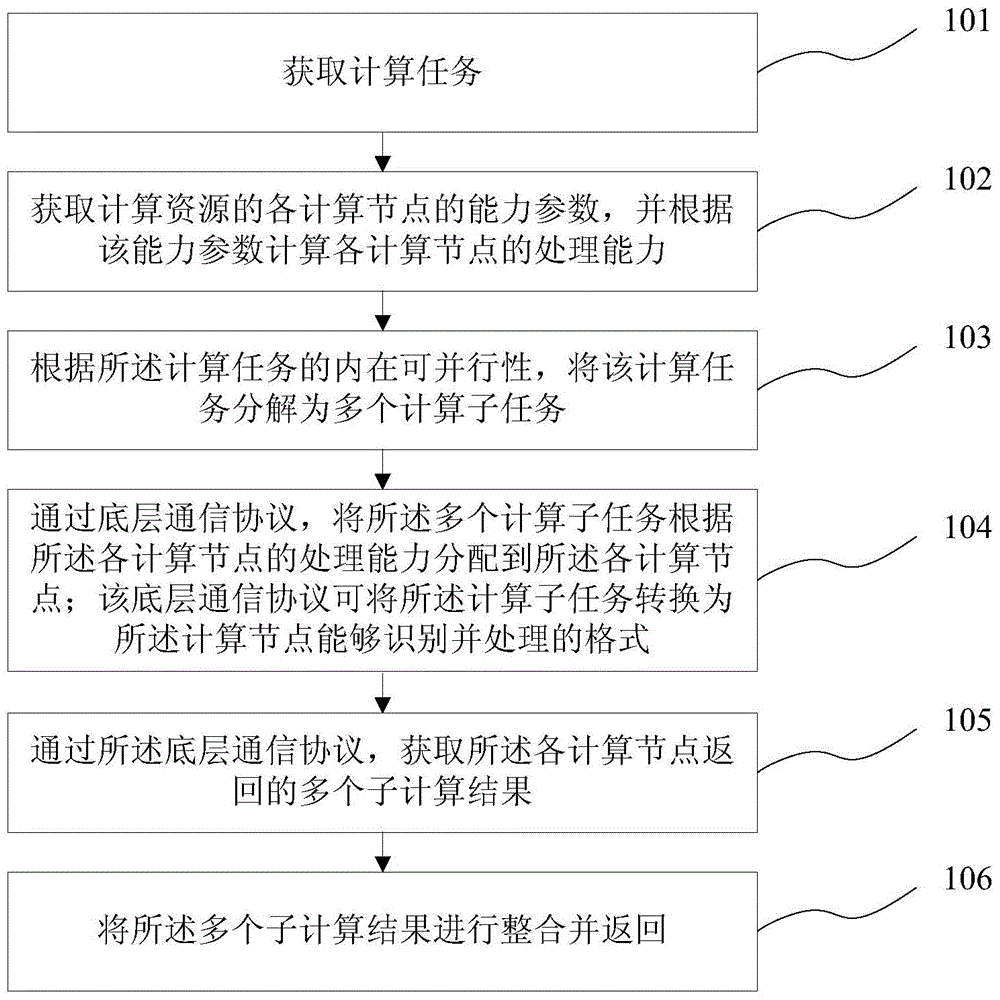

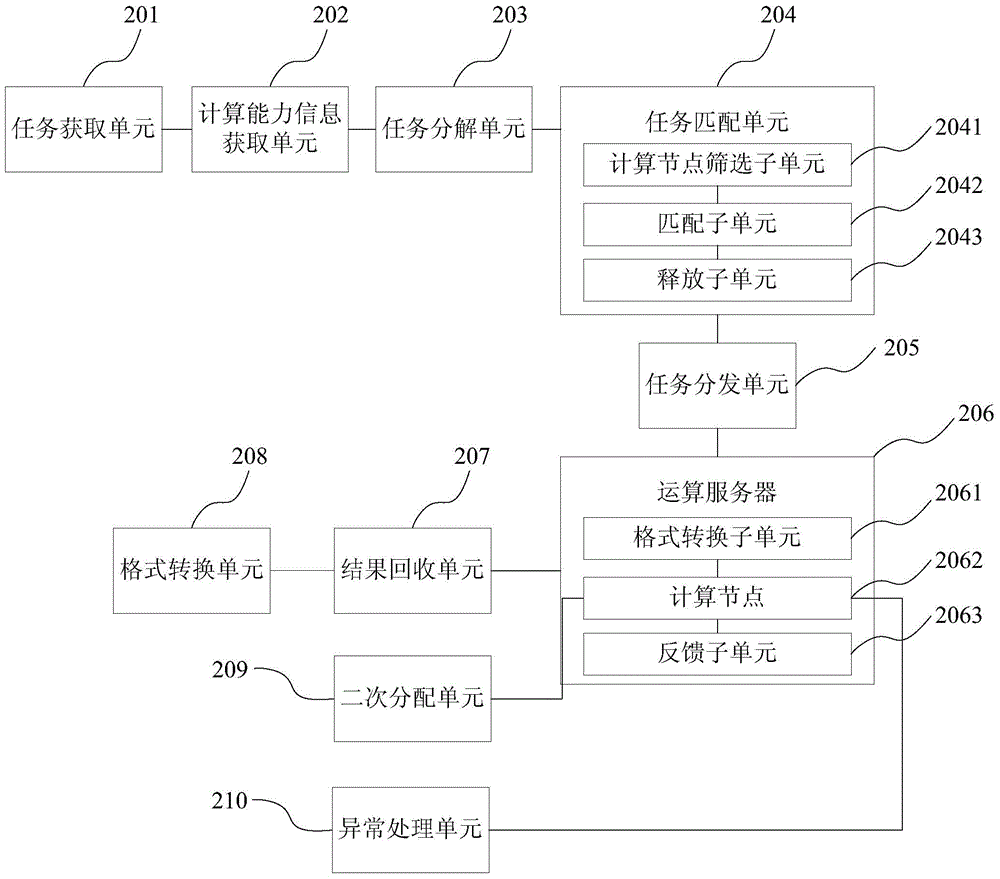

General multiprocessor parallel calculation method and system

ActiveCN104598425ADoes not affect completionImplement parallel computingConcurrent instruction executionMultiple digital computer combinationsParallel computingData format

The invention provides a general multiprocessor parallel calculation method and system. The method comprises the steps that a calculation task is obtained, hardware information and software information of all calculation nodes of a calculation resource are obtained, and the processing capacity of each the calculation node is calculated according to the hardware information and software information; according to the internal parallelizability of the calculation task, the calculation task is decomposed into multiple sub calculation tasks; according to the processing capacity of each calculation node, the sub calculation tasks are matched with all the nodes; according to a basic communication protocol, the data format of the sub calculation tasks is converted to a protocol format, and according to the matching relation, the sub calculation tasks are distributed to all the calculation nodes; according to the basic communication protocol, calculation results, of the protocol format, fed back by the calculation nodes are obtained, the data format of the calculation results is converted to the format of the original calculation task from the protocol format, and then the calculation results are fed back. Multiprocessor parallel calculation under the heterogeneous environment is achieved.

Owner:BC P INC CHINA NAT PETROLEUM CORP +1

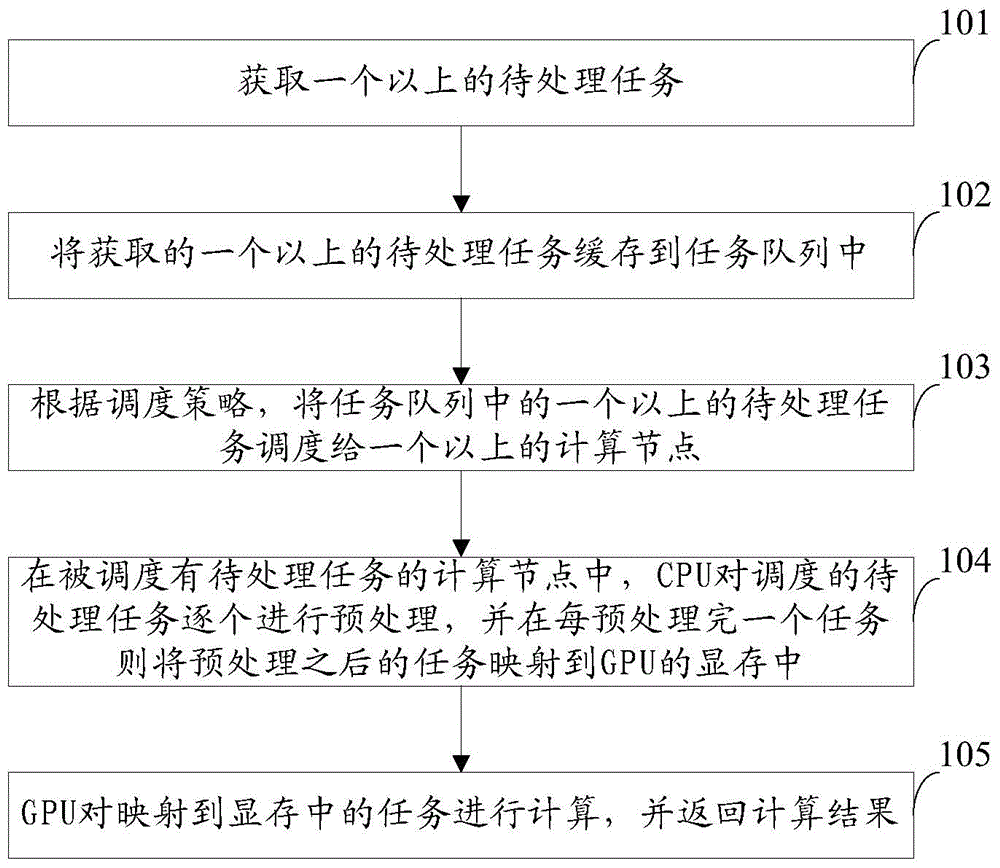

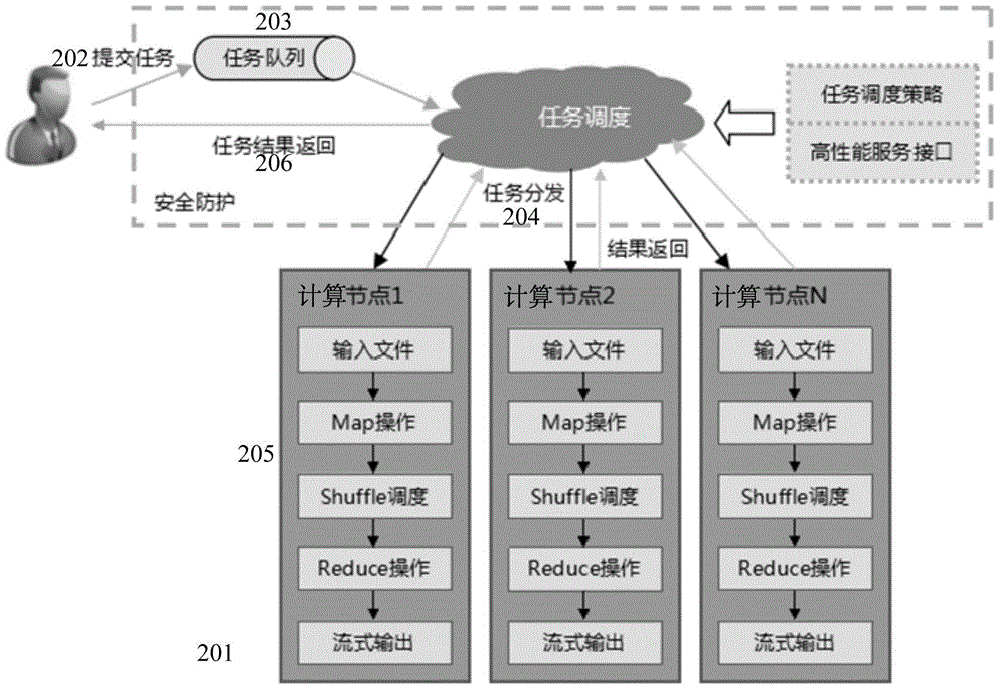

Hybrid parallel computing method and device for CPUs/GPUs

InactiveCN104965689AImplement parallel computingImprove computing efficiencyConcurrent instruction executionVideo memoryDistributed computing

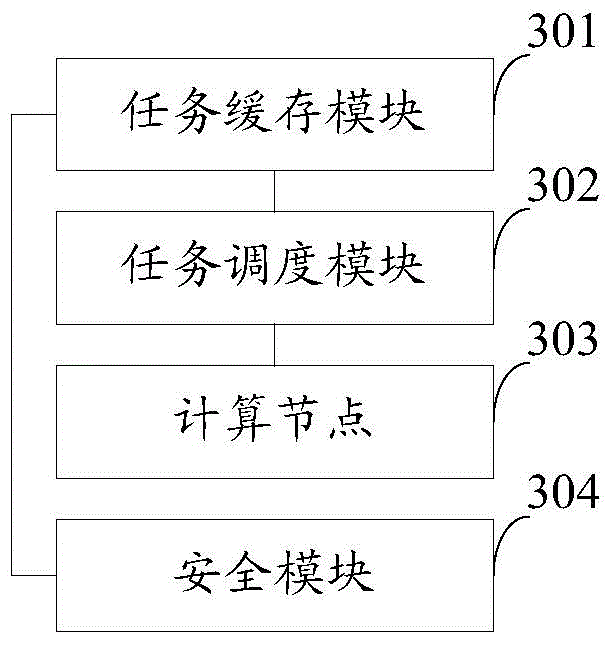

The invention provides a hybrid parallel computing method and a device for CPUs / GPUs. The method comprises following steps of utilizing more than one computing nodes to establish a computing cluster and determining a scheduling policy based on the fact that each computing node comprises a CPU and a GPU; acquiring more than one waiting task; caching more than one acquired waiting task to a task queue; scheduling more than one waiting task to more than one computing node in the task queue; pre-processing scheduled waiting tasks one by one by the CPUs in computing nodes scheduled with waiting tasks and mapping pre-processed tasks to video memory of the GPUs every time when one task is pre-processed; computing tasks mapped to video memory by the GPUs and returning computed results. According to the scheme, computing efficiency of the computing nodes is increased.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

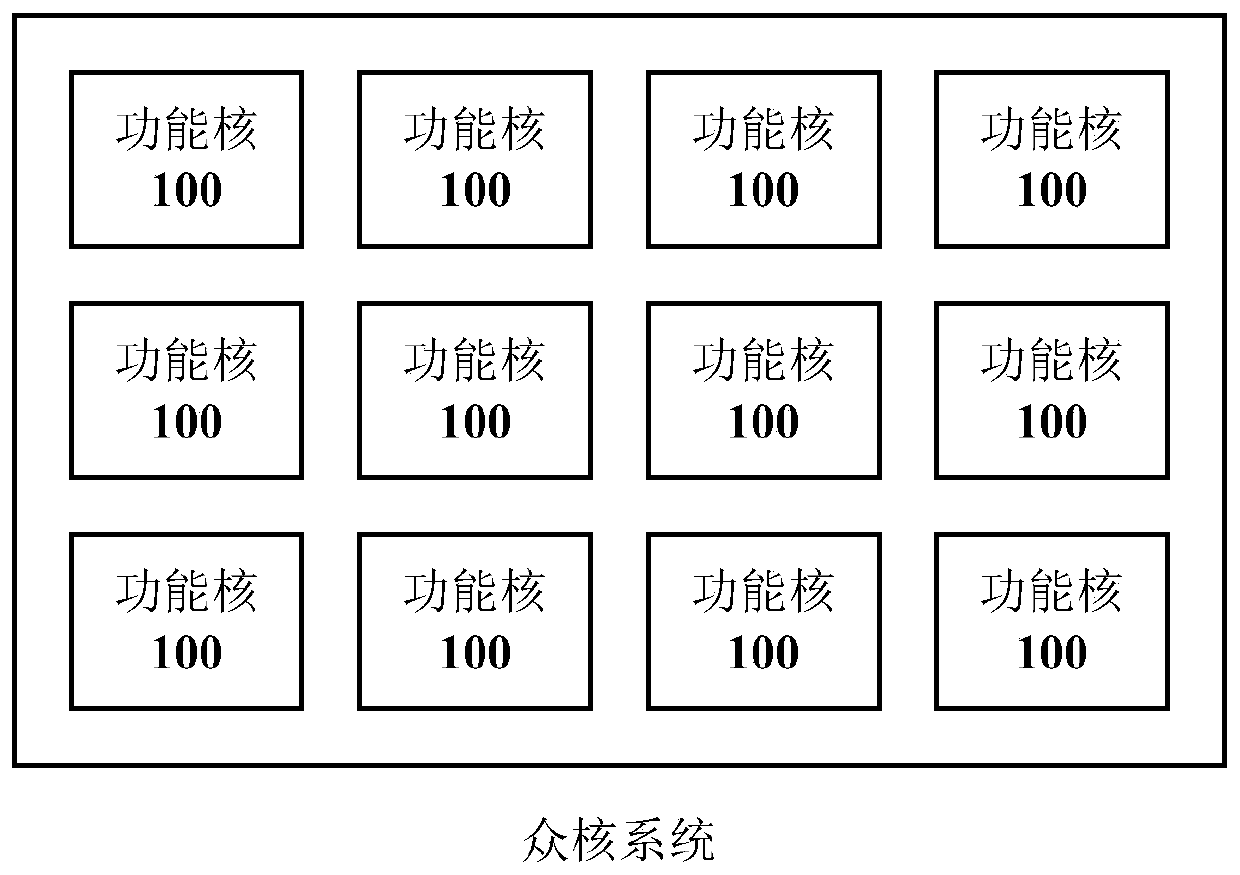

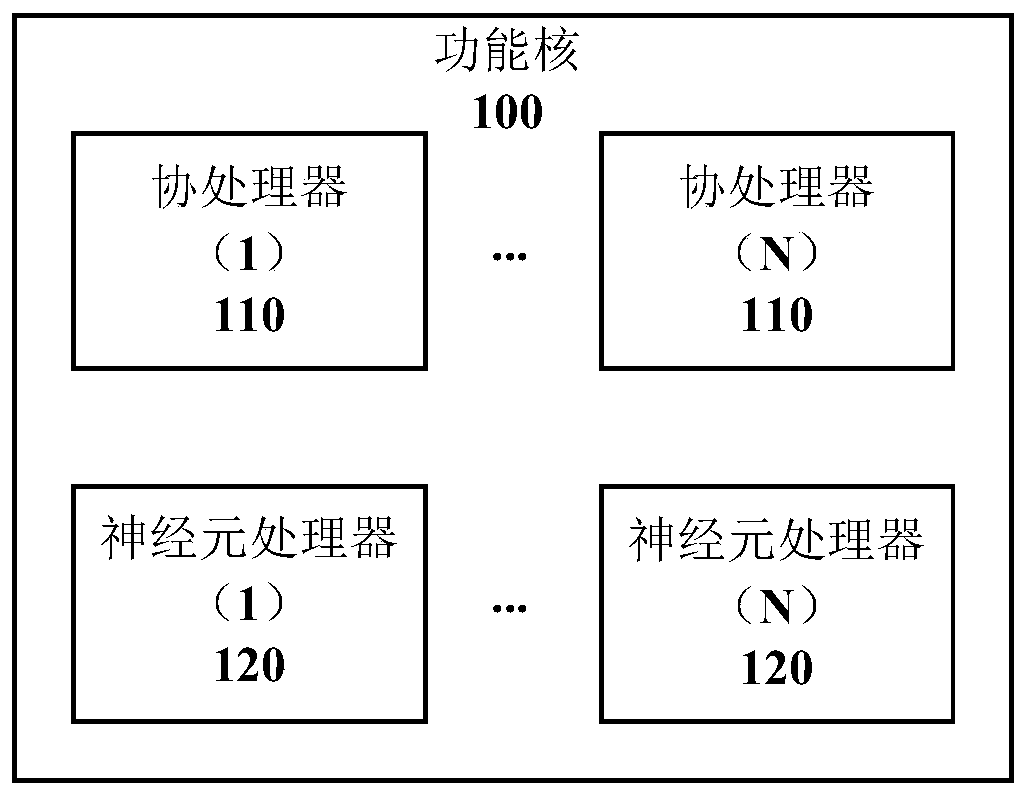

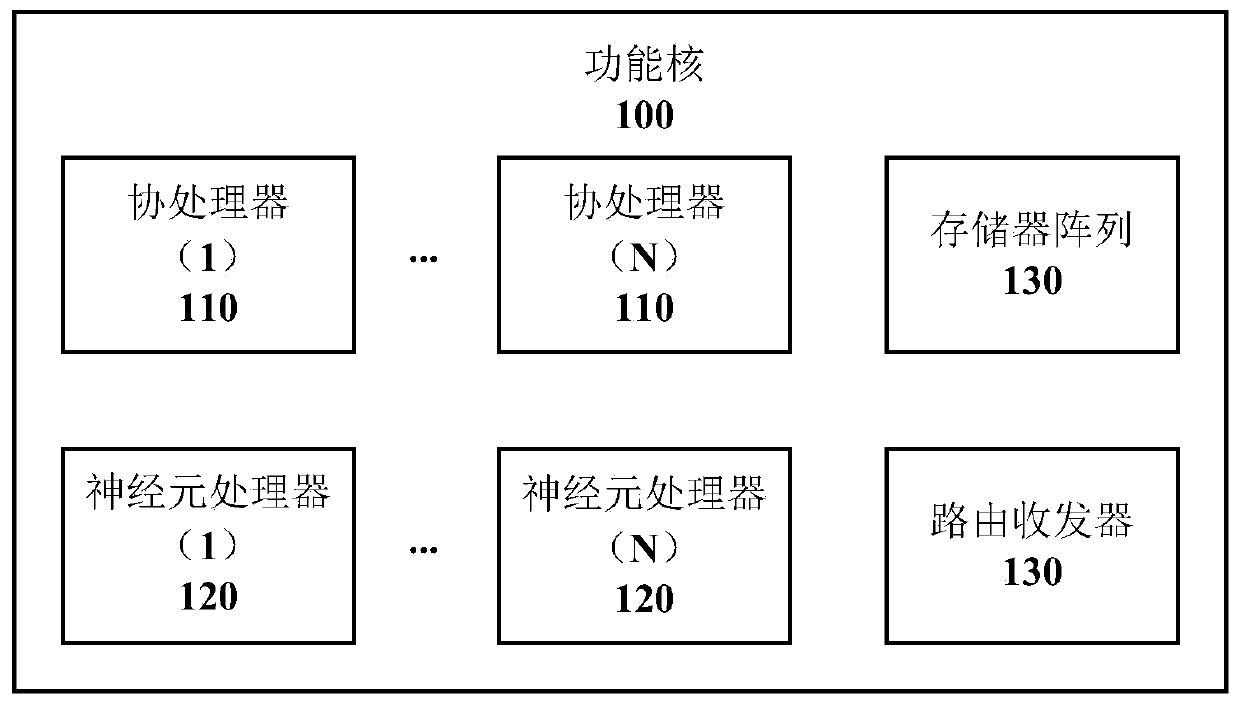

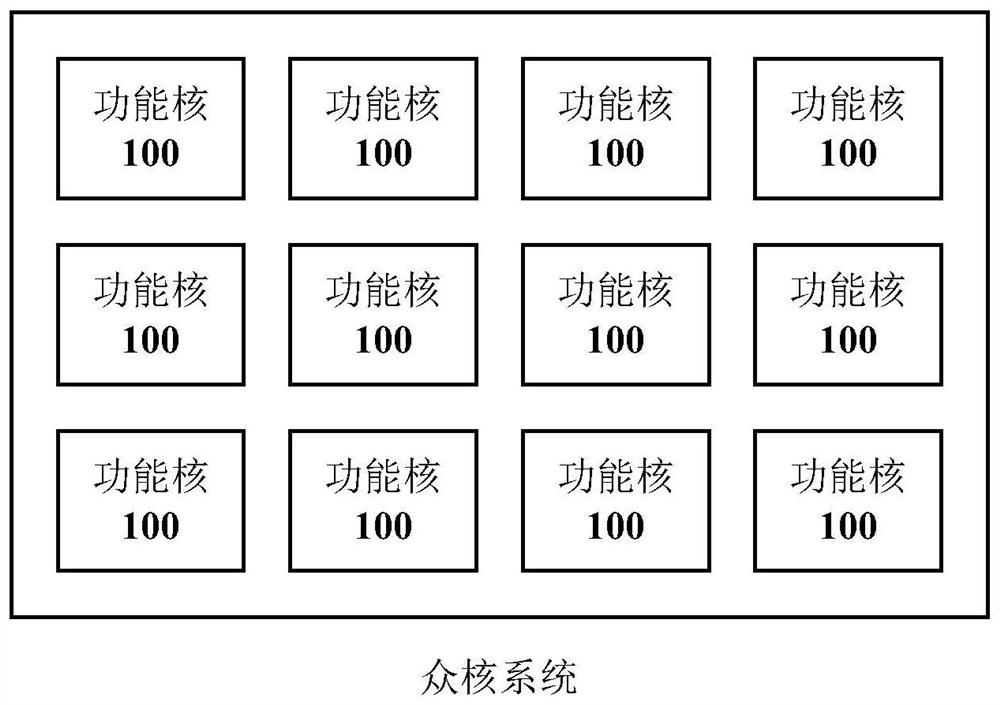

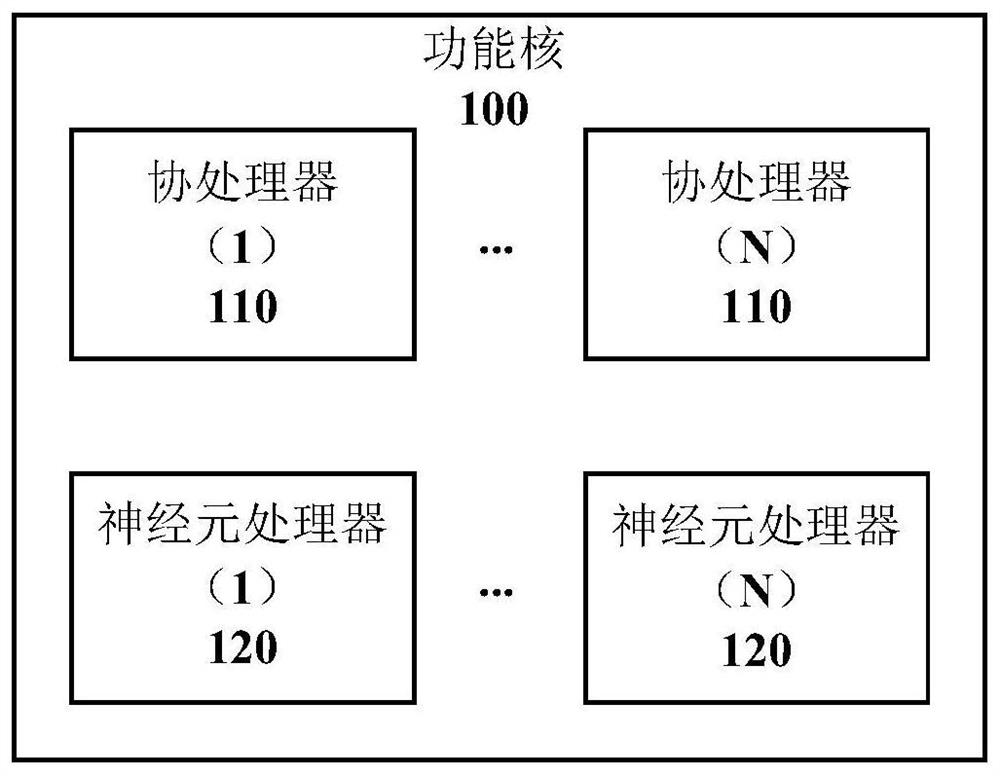

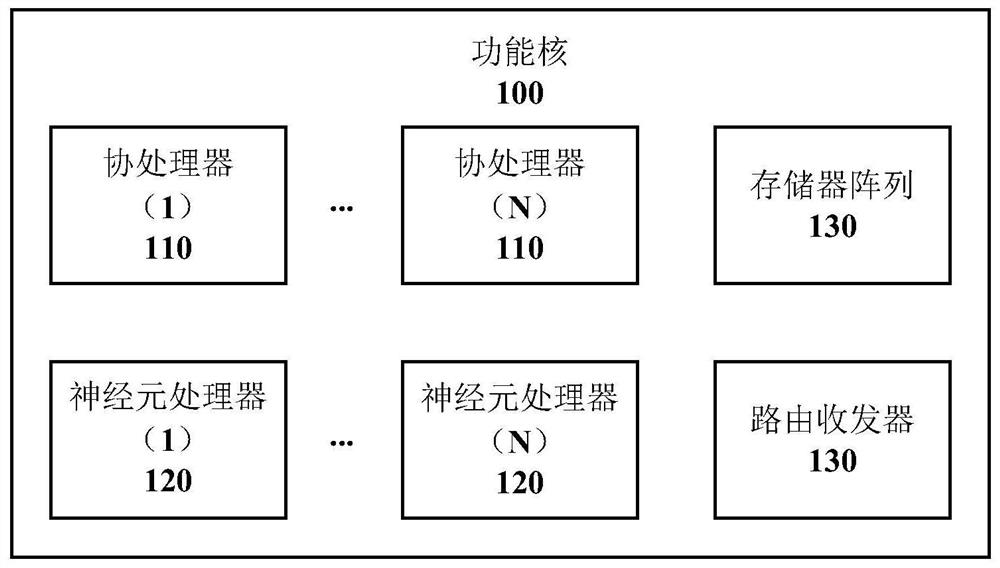

A brain-like computing chip and computing equipment

ActiveCN109901878ASolve efficiency problemsAddress flexibilityDigital data processing detailsNeural architecturesRich modelCoprocessor

The invention provides a brain-like computing chip and computing equipment, the brain-like computing chip comprises a many-core system composed of one or more functional cores, and the functional cores perform data transmission through a network-on-chip; each functional core comprises at least one neuron processor used for calculating a plurality of neuron models; and at least one coprocessor which is coupled with the neuron processor and is used for executing an integral operation and / or a multiply-add operation; Wherein the neuron processor can call the coprocessor to execute multiplicationand addition type operation. The brain-like computing chip provided by the invention is used for efficiently realizing various neural morphology algorithms, especially for computing characteristics ofan SNN-rich model, and has synaptic computing with high computing density and cell body computing with high flexibility requirements.

Owner:LYNXI TECH CO LTD

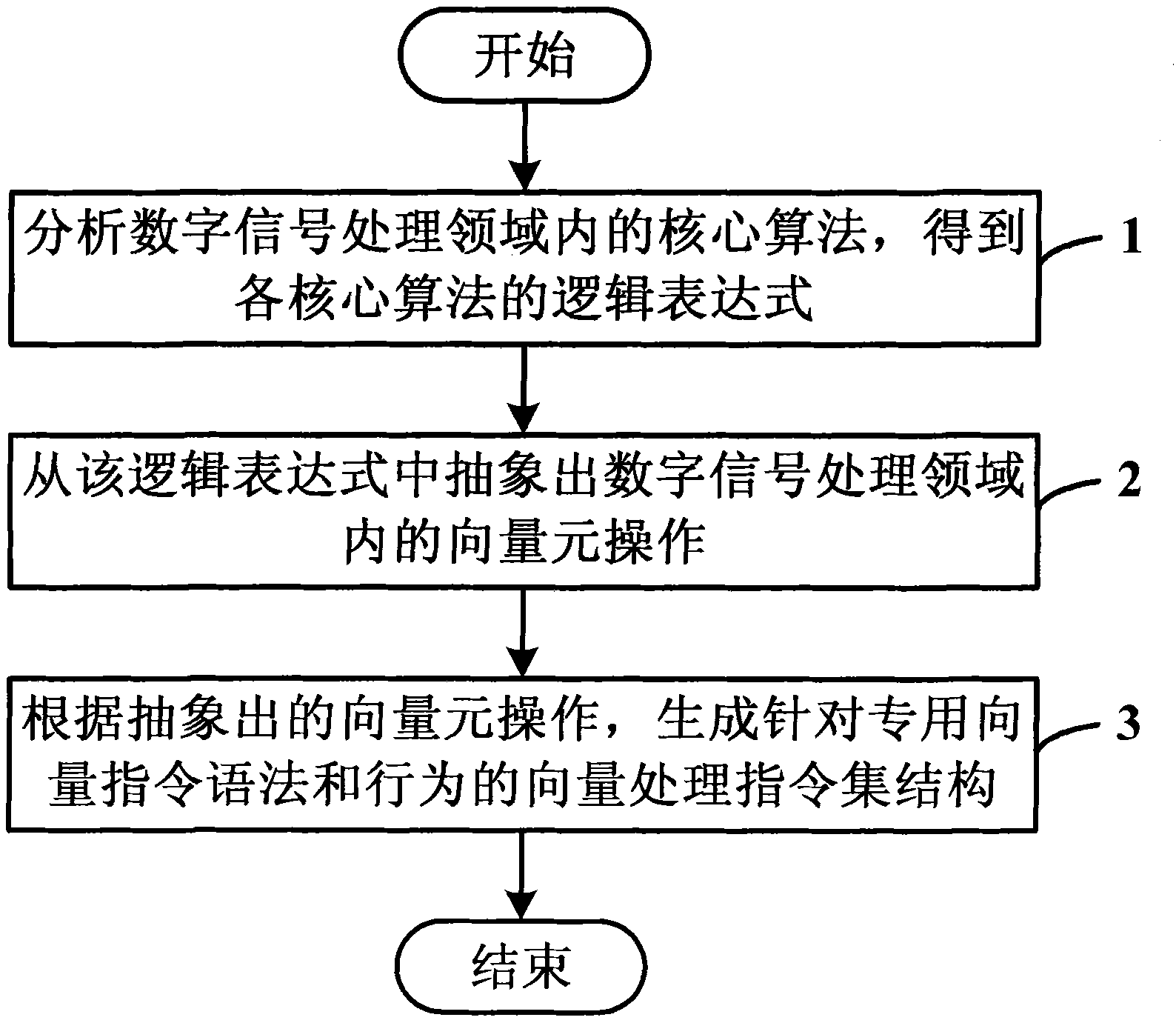

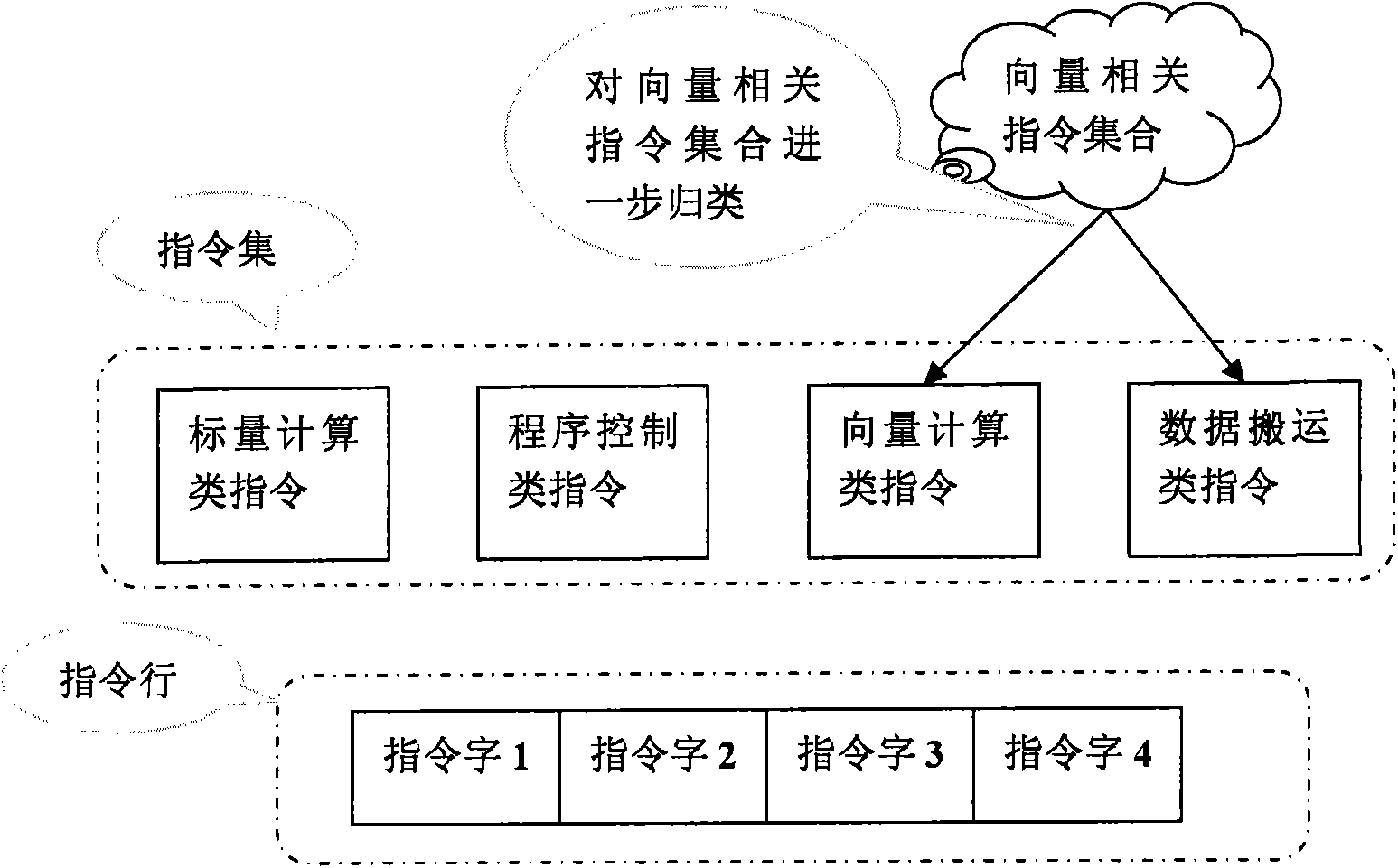

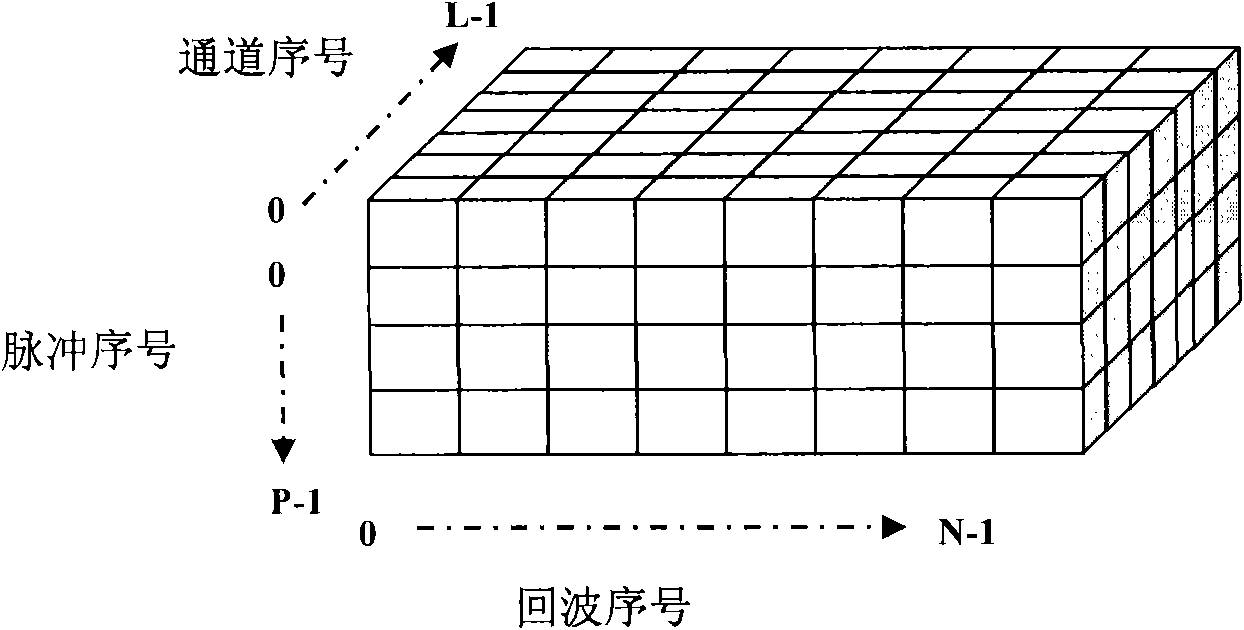

Method for generating vector processing instruction set architecture in high performance computing system

ActiveCN101833468AImplement parallel computingEfficient designProgram controlMemory systemsHigh performance computationSyntax

The invention discloses a method for generating a vector processing instruction set architecture in a high performance computing system, which comprises the following steps of: 1. analyzing core algorithms in a digital signal processing field to obtain the logical expression of each core algorithm; 2. abstracting element vector operation in the digital signal processing field from the logical expressions; and 3. generating the vector processing instruction set architecture specific to special vector instruction syntax and behaviors based on the abstracted element vector operation. The invention generates the vector processing instruction set applicable to the special field, thereby greatly improving the performance of the processor.

Owner:BEIJING SMART LOGIC TECH CO LTD

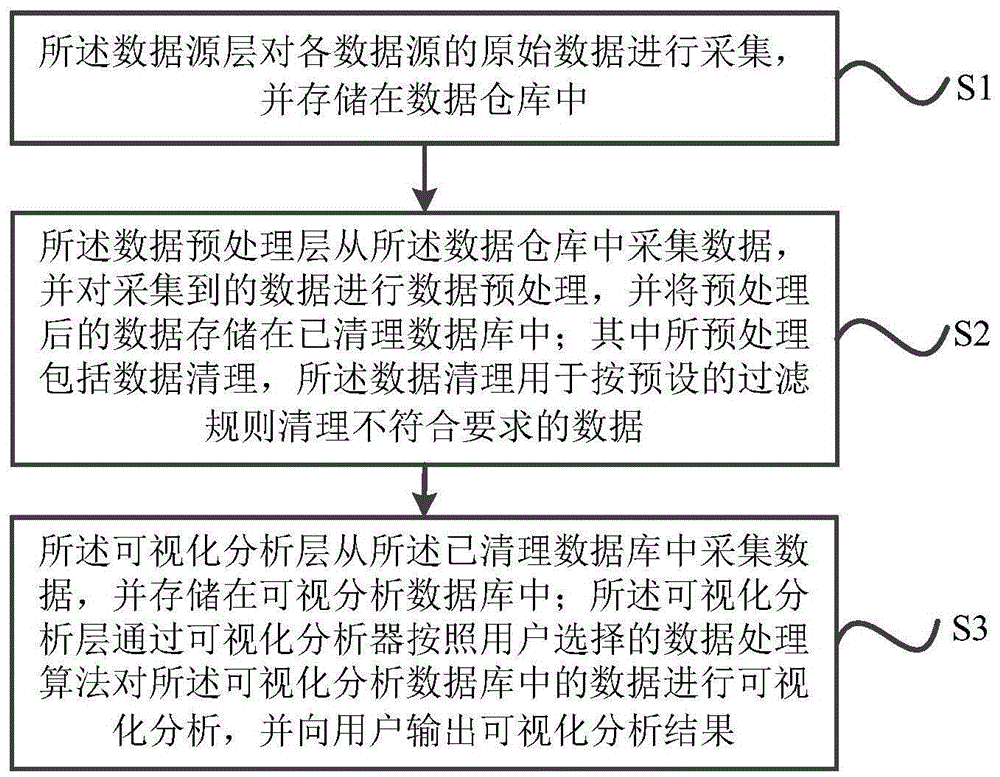

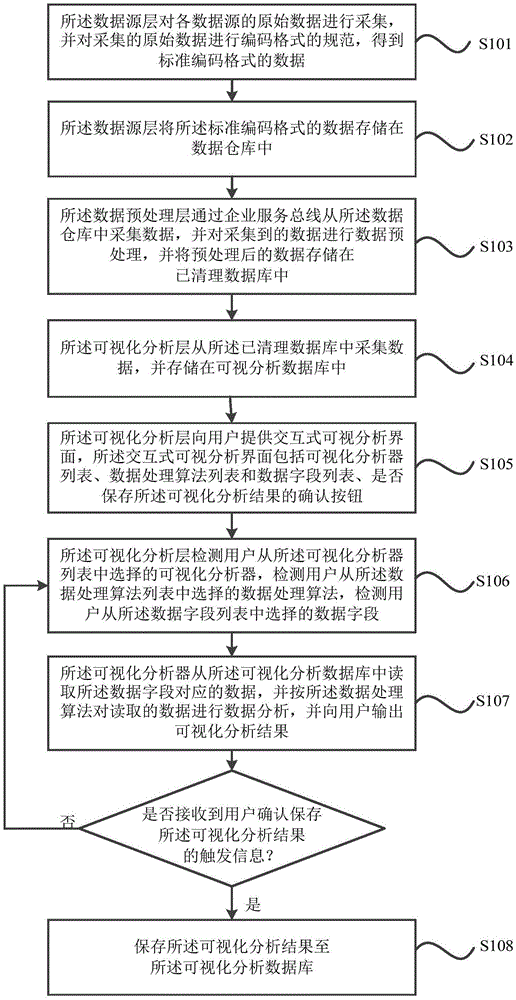

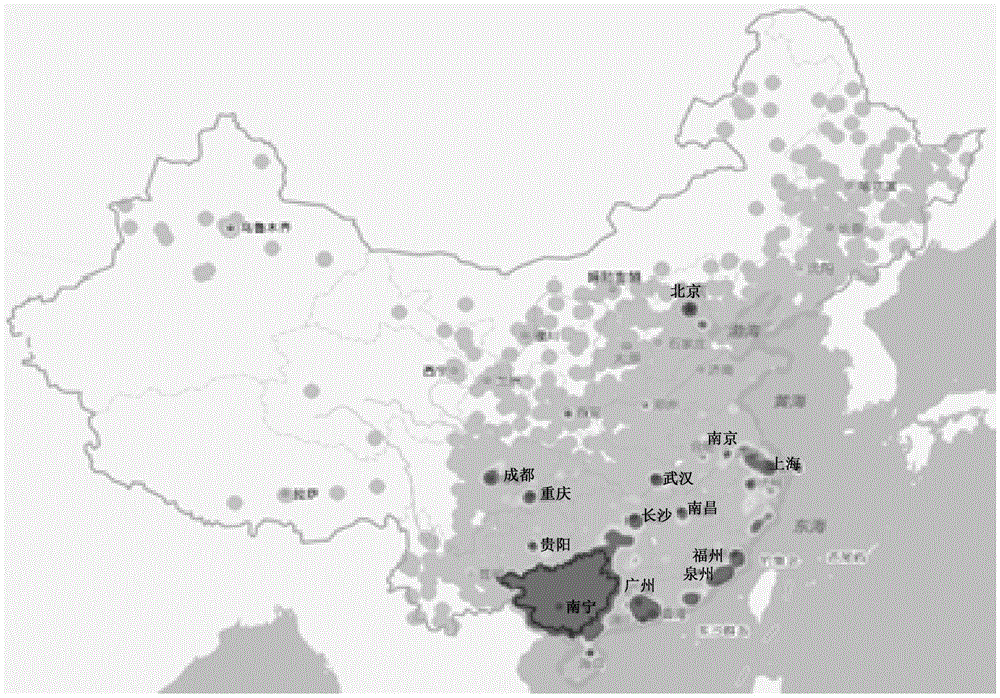

Data visualization analysis method and system for enterprise business intelligence

InactiveCN105631027AImplement parallel computingAchieving processing powerDatabase management systemsSpecial data processing applicationsAnalysis dataData processing

The invention particularly relates to a data visualization analysis method and system for enterprise business intelligence. The method is applied to a visualization analysis system comprising a data source layer, a data preprocessing layer and a visualization analysis layer. The data source layer collects original data of all data sources, and the data is stored in a data warehouse. The data preprocessing layer collects the data from the data warehouse, preprocesses the collected data and stores the preprocessed data into a cleared database. The visualization analysis layer carries out visualization analysis on data in a visualization analysis database through a visualization analyzer according to a data processing algorithm selected by a user, and outputs a visualization analysis result to the user. According to the technical scheme, data distributed management, parallel computing, visualization analysis and presentation can be achieved.

Owner:CHINA AGRI UNIV

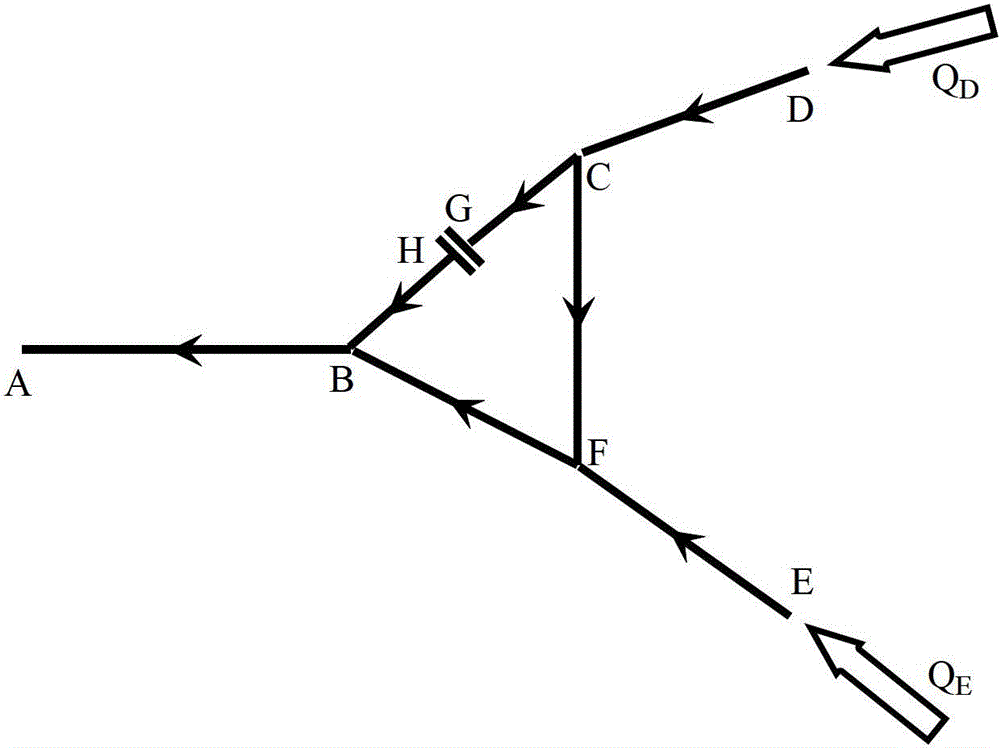

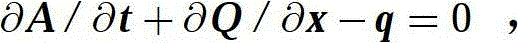

Method for carrying out parallelization numerical simulation on hydrodynamic force conditions of river provided with cascade hydropower station

InactiveCN102722611AImprove versatilityImprove portabilitySpecial data processing applicationsInformation technology support systemEngineeringWater level

Owner:TSINGHUA UNIV

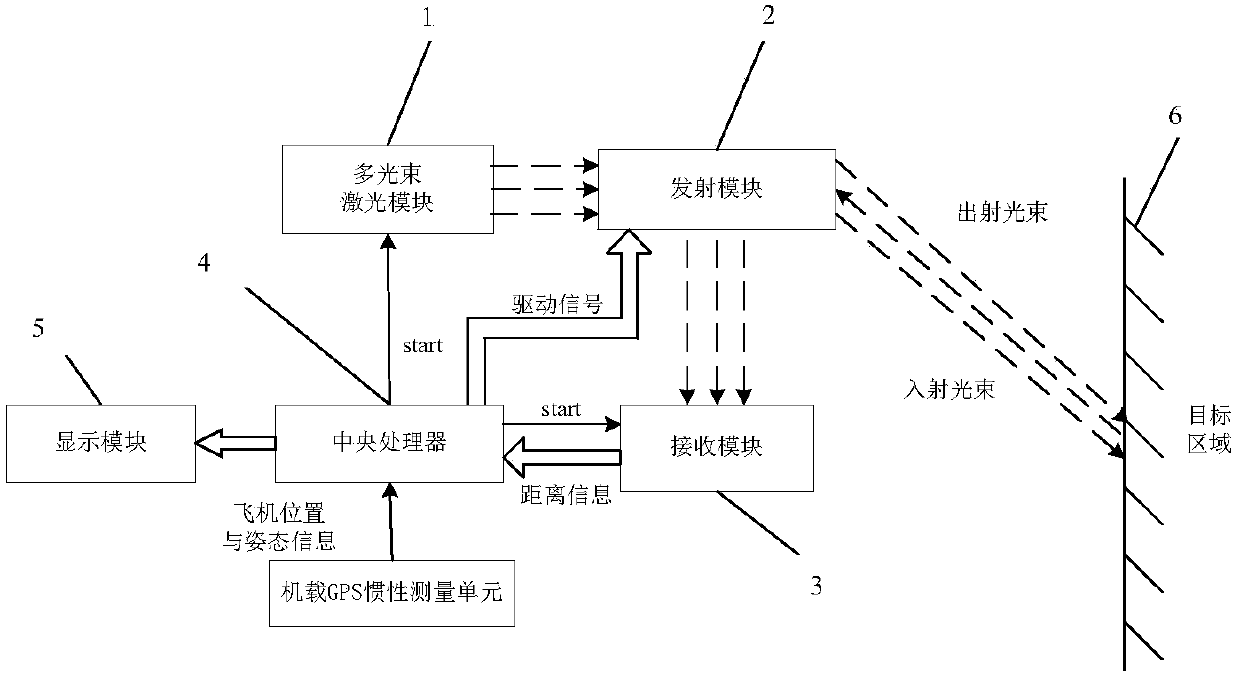

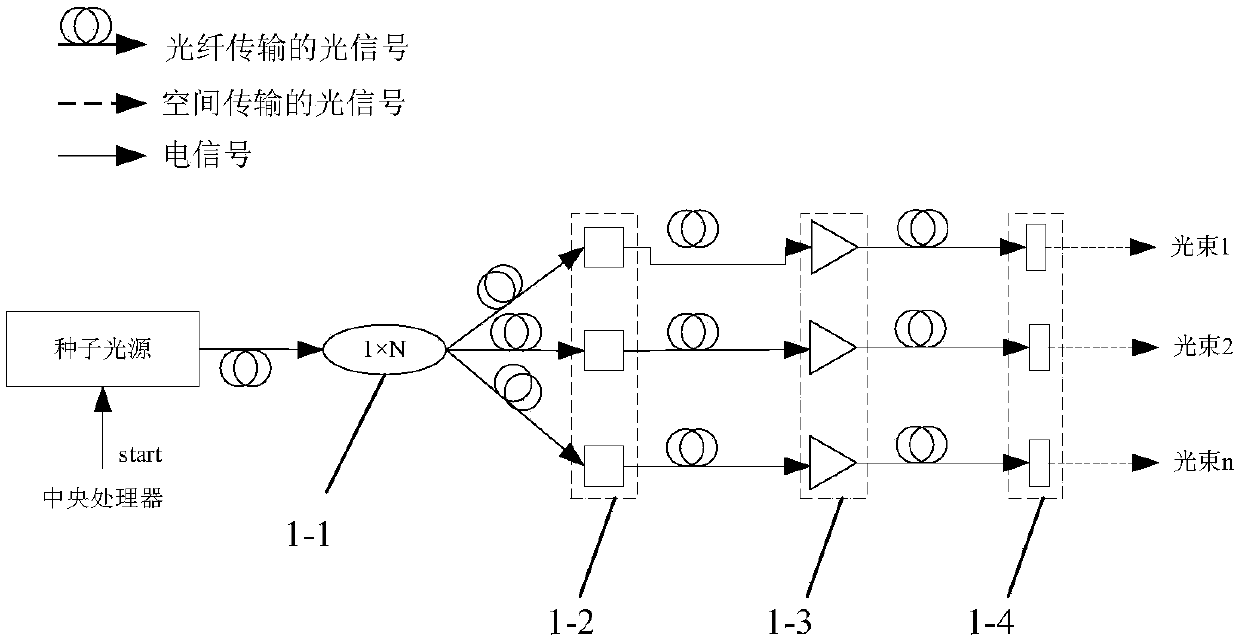

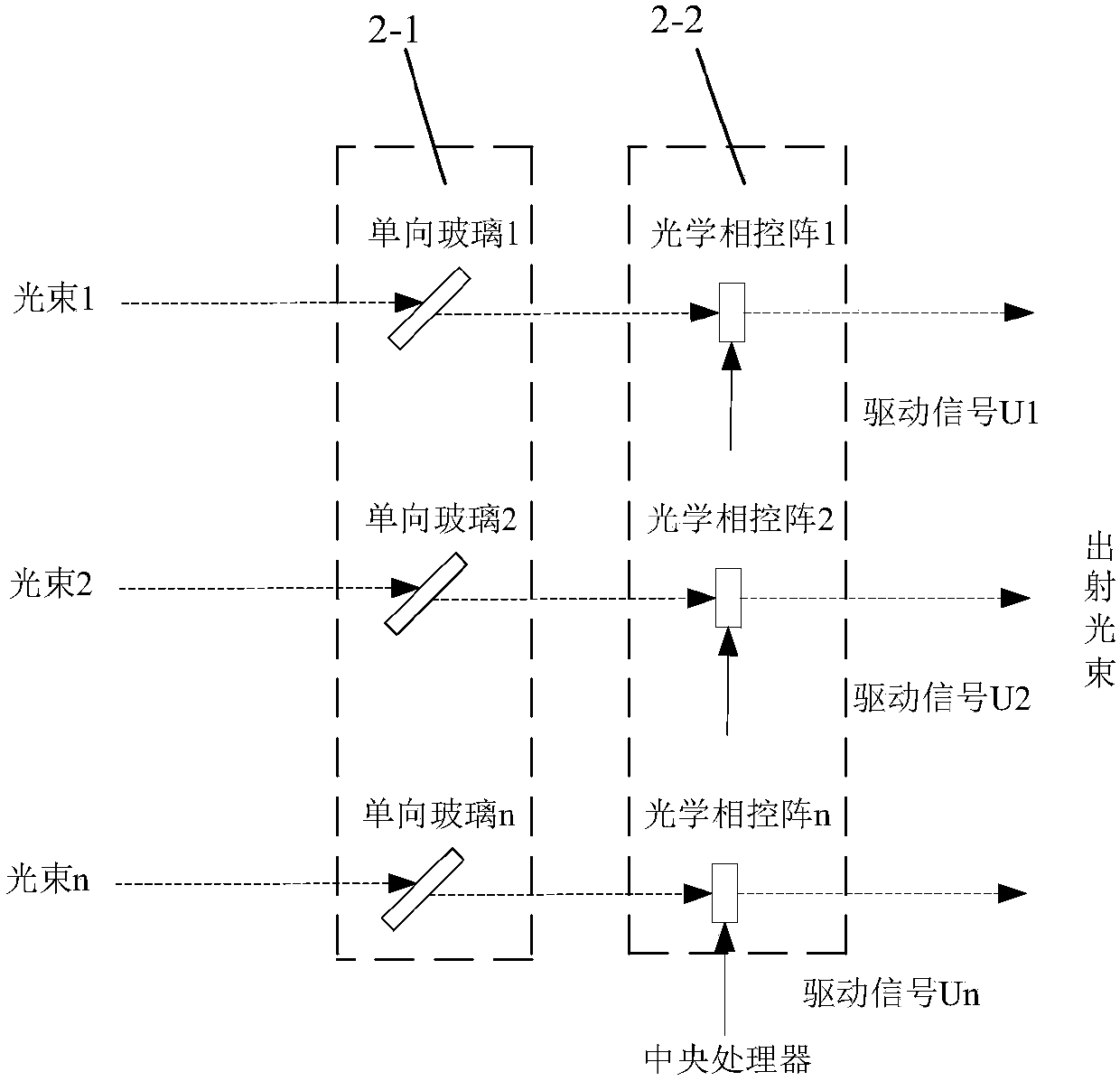

On-vehicle multiple-beam optical phased array laser three-dimensional imaging radar system

PendingCN107703517AImprove detection distanceIncrease the detection areaElectromagnetic wave reradiationICT adaptationPhysicsVisual field loss

An on-vehicle multiple-beam optical phased array laser three-dimensional imaging radar system relates to the technical field of radar engineering and settles problems of short-distance object detection and low imaging frame frequency in an existing laser three-dimensional imaging system. The on-vehicle multiple-beam optical phased array laser three-dimensional imaging radar system comprises a multi-beam laser module, a transmitting module, a receiving module, a central processor and a distance measuring unit. The multi-beam laser module comprises a beam splitter, a phase modulator array, a fiber amplifier array and a collimating beam spreading array. The transmitting module comprises a unidirectional glass array and an optical phased array. The receiving module comprises an optical filterarray, a double-bonding-lens array, a surface array APD array, a quenching circuit and a distance measuring unit. A ground objective area is scanned through multiple laser beams, thereby obtaining corresponding point cloud data. The obtained data are processed, thereby realizing objective three-dimensional image reconstruction with advantages of high frame frequency, high resolution and large visual field. A detecting task to a ground object is finished in real time in high speed. The on-vehicle multiple-beam optical phased array laser three-dimensional imaging radar system has advantages of high measurement speed and high measurement precision.

Owner:CHANGCHUN UNIV OF SCI & TECH

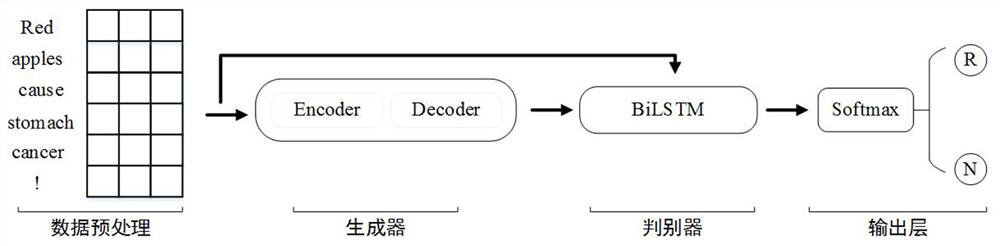

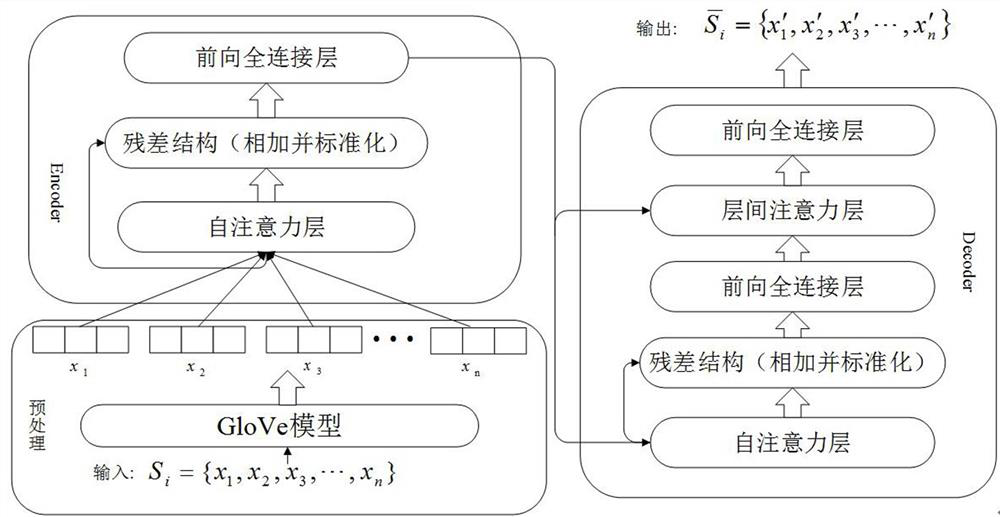

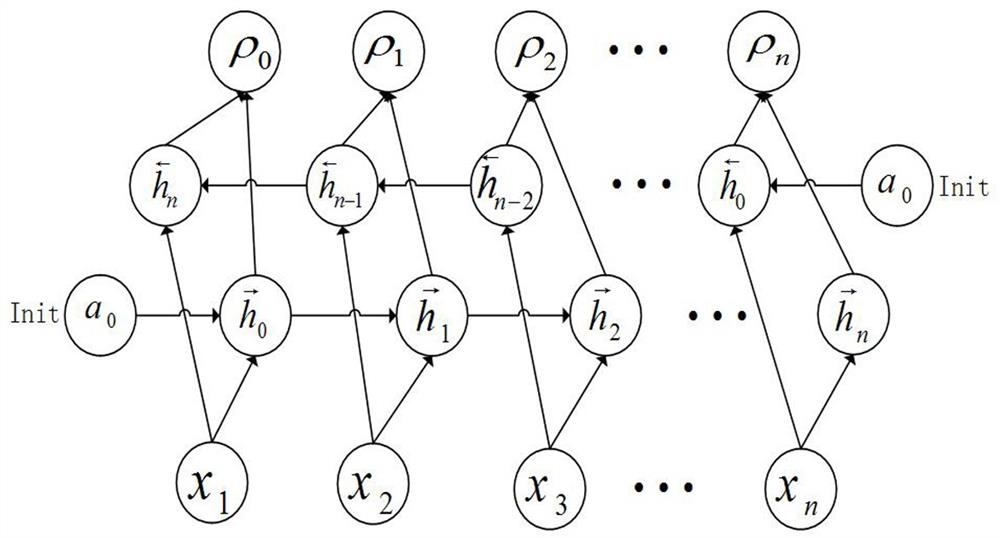

Rumor detection method combining self-attention mechanism and generative adversarial network

ActiveCN112069397AImprove detection accuracyImprove robustnessDigital data information retrievalSemantic analysisData setAlgorithm

The invention discloses a rumor detection method combining a self-attention mechanism and a generative adversarial network. The rumor detection method comprises the steps of collecting rumor text datato form a rumor data set; based on a self-attention mechanism, constructing a generative adversarial network generator comprising a self-attention layer; constructing a discriminator network, and respectively carrying out rumor detection and classification on the original rumor text and the text decoded by the generator; training the generative adversarial network, and adjusting model parametersof a generator and model parameters of a discriminator; and extracting a discriminator network of the generative adversarial network, and performing rumor detection on the to-be-detected text. Compared with an existing rumor detection method, the rumor detection method is higher in detection precision and better in robustness; a self-attention layer is adopted in the generator, key features are constructed through semantic learning of rumor samples, text examples rich in expression features are generated to simulate information loss and confusion in the rumor propagation process, and the semantic feature recognition capacity of the discriminator is enhanced through adversarial training.

Owner:CHINA THREE GORGES UNIV

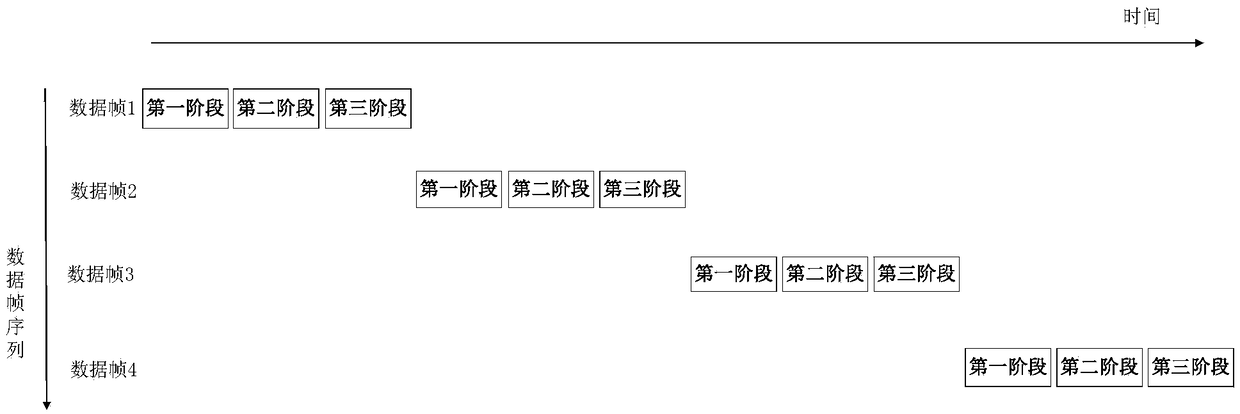

Data processing method and device based on AI chip

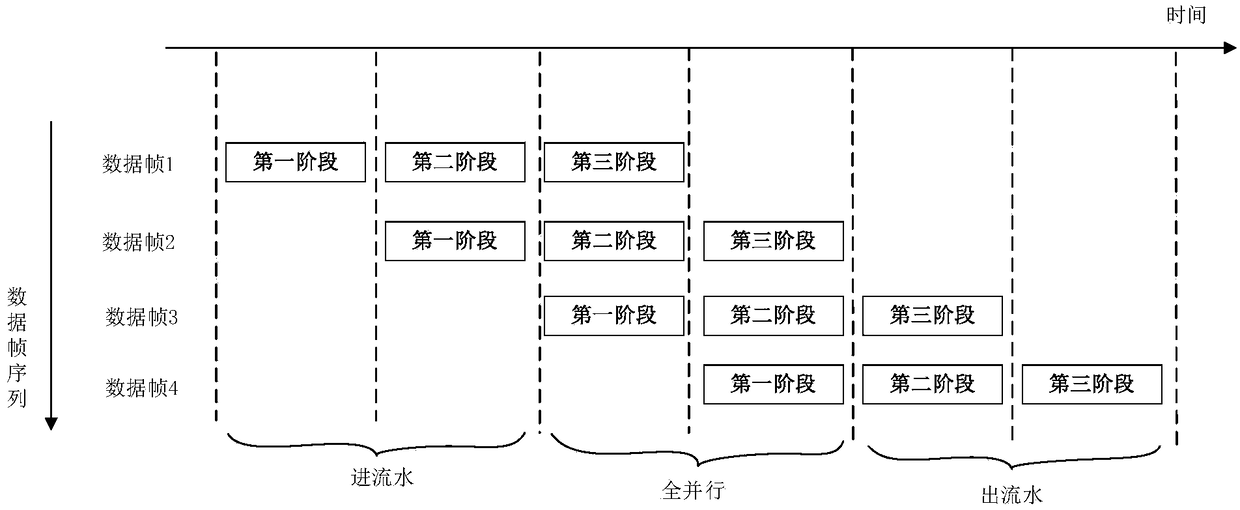

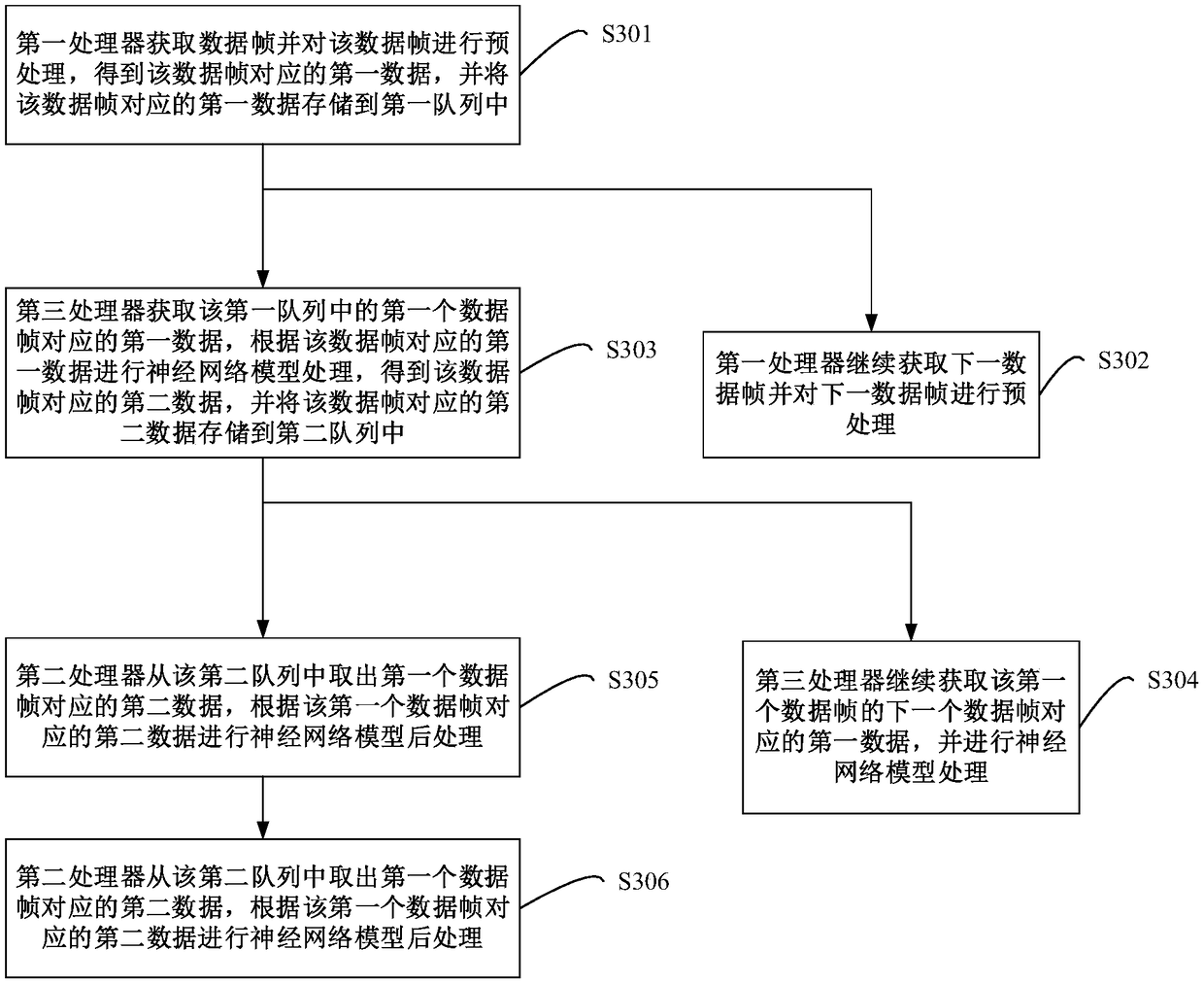

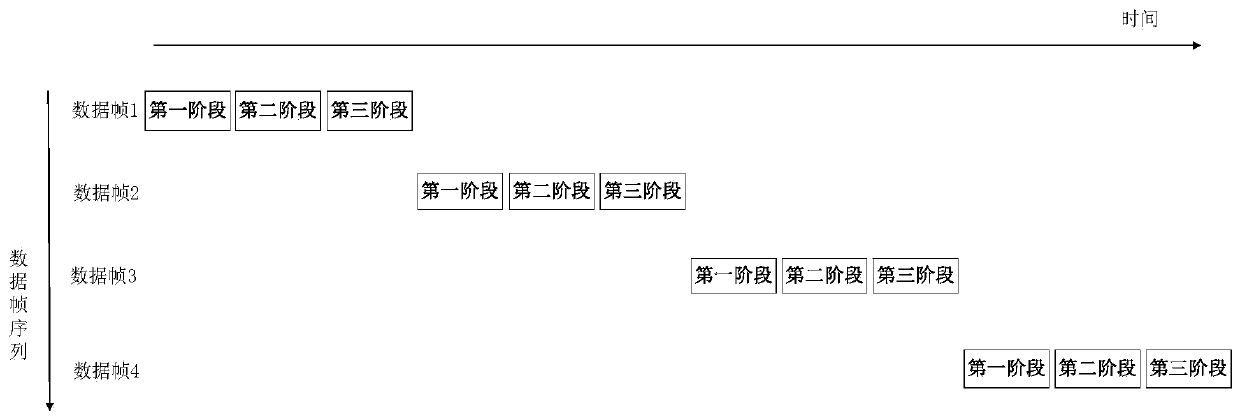

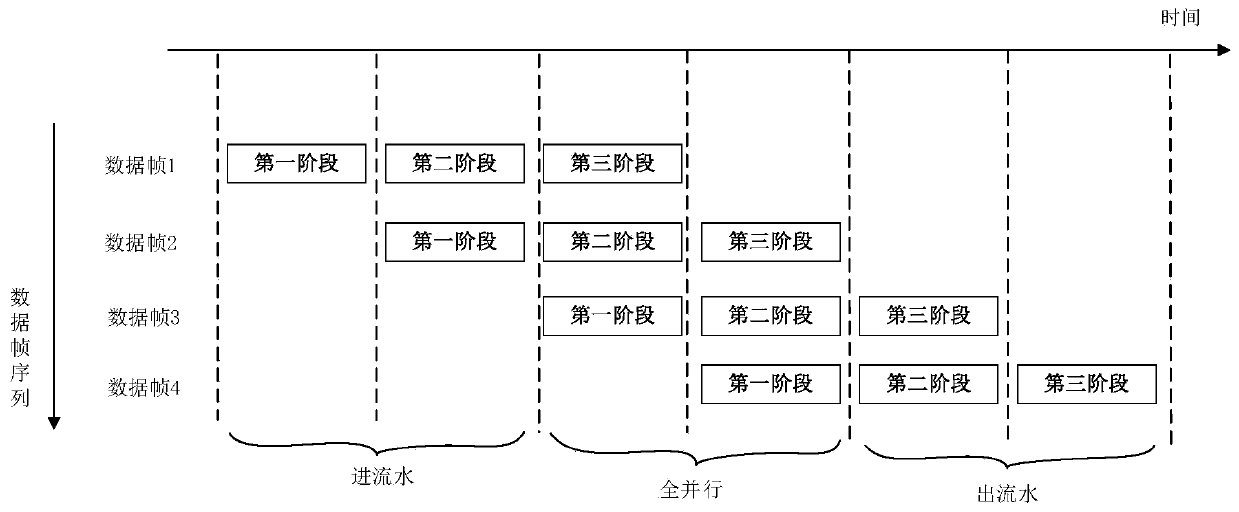

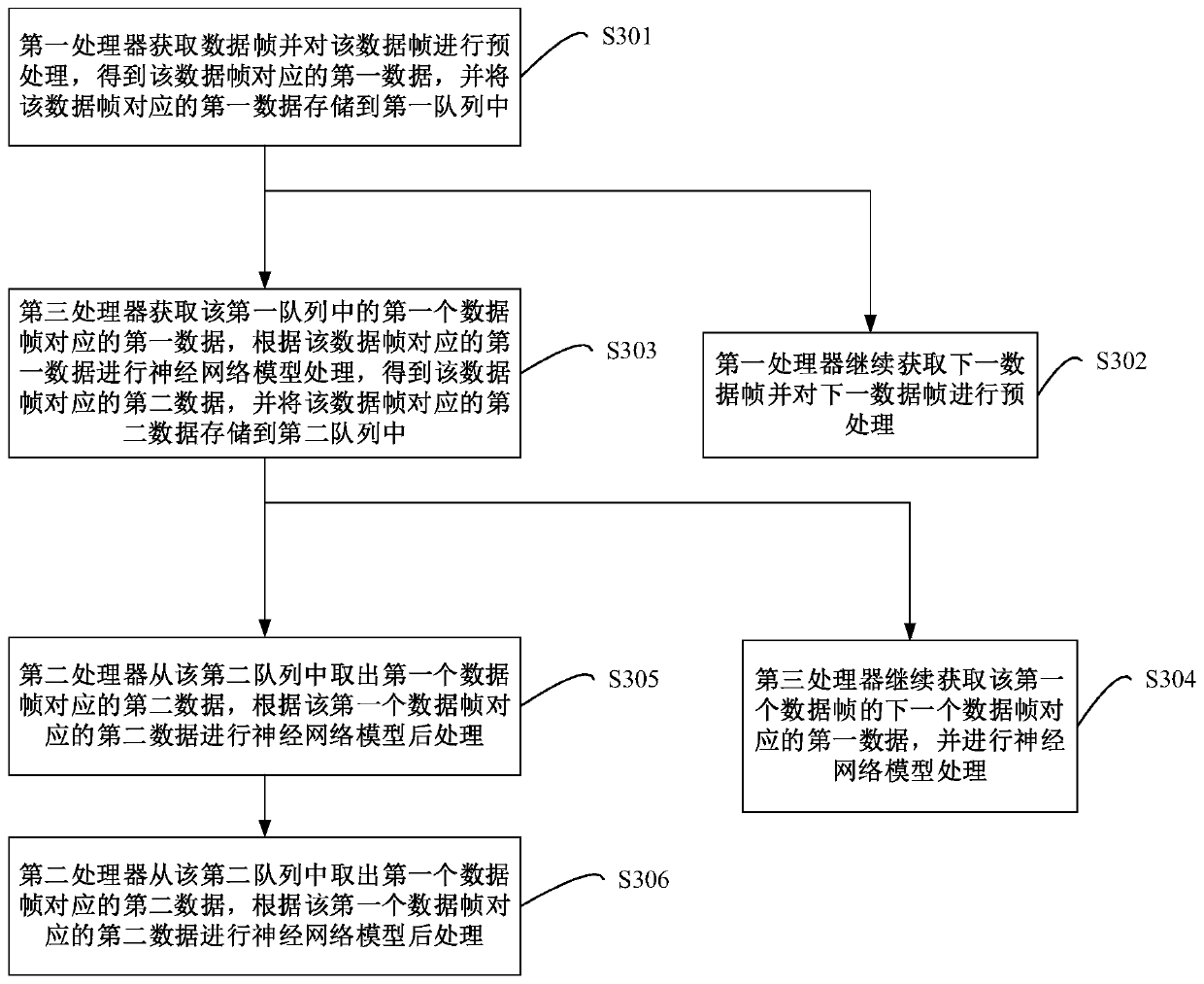

ActiveCN108985451AImprove data processing efficiencyIncrease frame rateCharacter and pattern recognitionPhysical realisationComputer hardwareThree stage

The invention provides a data processing method and device based on an AI chip. The method of the invention divides the data processing pipeline of the AI chip into the following three stages of processing: data acquisition and pretreatment, neural network model processing and neural network model post-processing. The processing of the three phases is of a parallel pipeline structure. The AI chipcomprises at least a first processor, a second processor and a third processor. The first processor is used for data acquisition and preprocessing, the third processor is used for neural network modelprocessing, and the second processor is used for neural network model post-processing. The first processor, the second processor and the third processor simultaneously carry out the processing of thethree stages, thereby reducing the mutual waiting time of the processors, maximizing the parallel computation of each processor, improving the efficiency of data processing of the AI chip, and then improving the frame rate of the AI chip.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

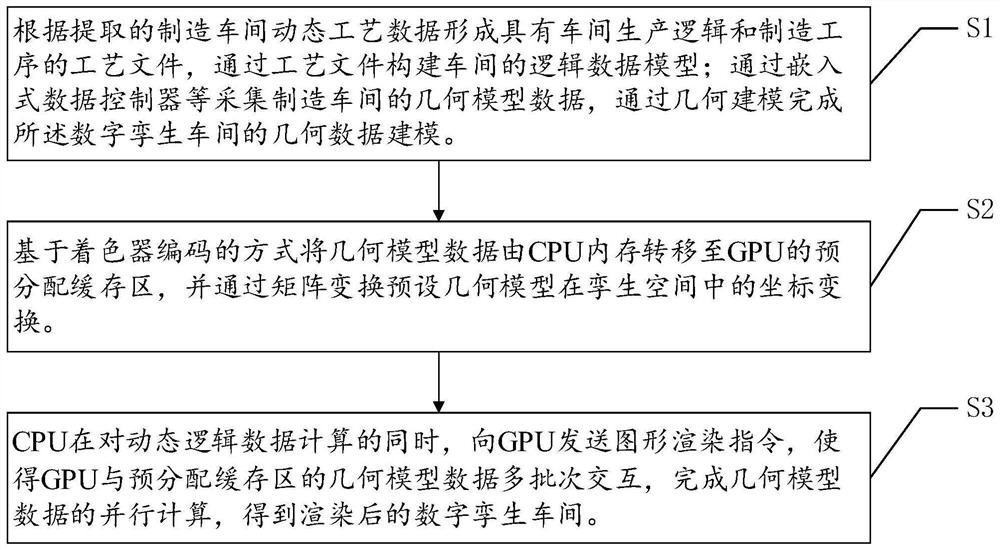

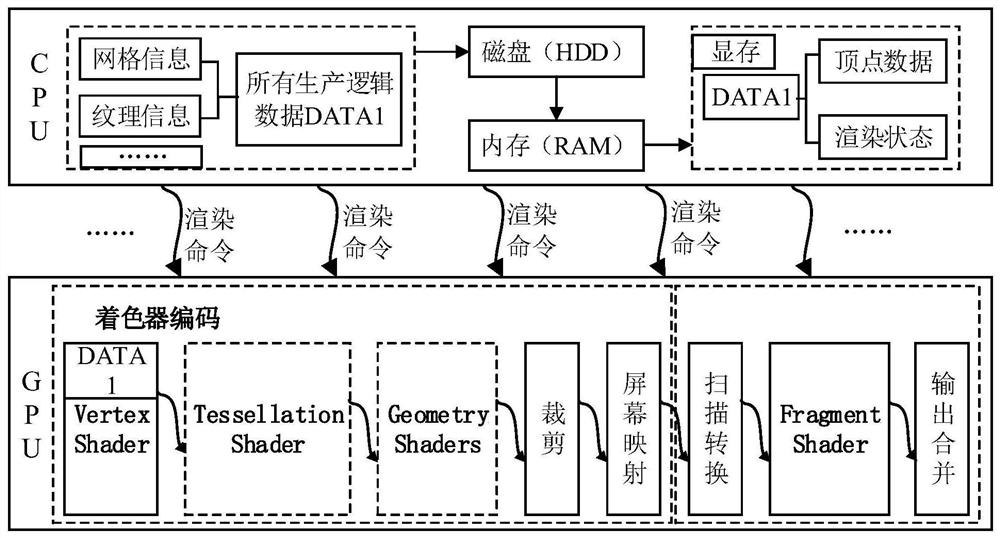

Quick architecture method and device for digital twin workshop system

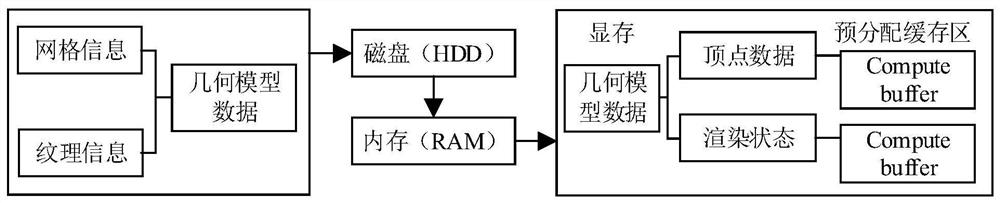

ActiveCN112506476ASave computing resourcesImplement parallel computingResource allocationImage memory managementConcurrent computationParallel computing

The invention provides a quick architecture method for a digital twin workshop system, which comprises the following steps: forming a process file with workshop production logic and manufacturing procedures according to extracted dynamic process data of a manufacturing workshop, and constructing a logic data model of the workshop according to the process file; collecting geometric model data of amanufacturing workshop through an embedded data controller and the like, and finishing geometric data modeling of the digital twin workshop through a geometric modeling method; transferring the geometric model data from a CPU memory to a pre-allocation cache region of a GPU based on a shader coding mode, and presetting coordinate transformation of a geometric model in a twin space through matrix transformation; and enabling the CPU to send a graphic rendering instruction to the GPU while calculating the dynamic logic data, so that the GPU interacts with the geometric model data of the pre-distributed cache region in multiple batches. According to the invention, the dynamic logic data and the geometric model data of the twin system are subjected to parallel computing, so that the problem ofdelay mapping of the digital twin workshop system due to large data volume can be solved.

Owner:ZHEJIANG CHINT ELECTRIC CO LTD +1

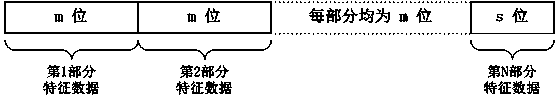

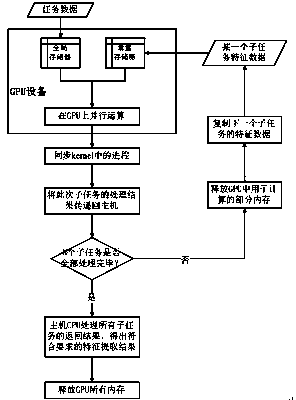

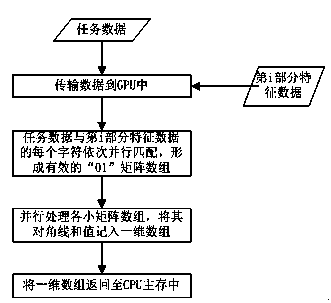

Adaptive parallel processing method aiming at variable length characteristic extraction for big data

InactiveCN103543989AImplement parallel computingResource allocationConcurrent instruction executionMatrix ArrayParallel processing

The invention discloses an adaptive parallel processing method aiming at variable length characteristic extraction for big data. The method aims at variable-length characteristic data, and big data are processed through a graphic processing unit (GPU) parallel computing power based on compute unified device architecture (CUDA). During processing of the big data, an adaptive parallel matrix array processing mode is used for performing multithreading concurrent execution processing on the data according to own hardware characteristics and lengths of the characteristic data, so that the characteristic extraction speed is accelerated. According to the method, the data are processed in batches according to the hardware own processing capacity and the lengths of the characteristic data, and characteristics of certain length are extracted every time, and matching results are recorded; after the whole characteristics are extracted, all matching results are processed according to the allowed error-tolerant rate of data sampling to obtain requested characteristic extraction results. Good parallelism of matrix arrays is used, and the method aims at extracting variable-length characteristics, so that data can be parallelized effectively and sufficiently, and the method is particularly applicable to big-data rapid characteristic extraction with certain fault tolerance.

Owner:ZHENJIANG ZHONGAN COMM TECH

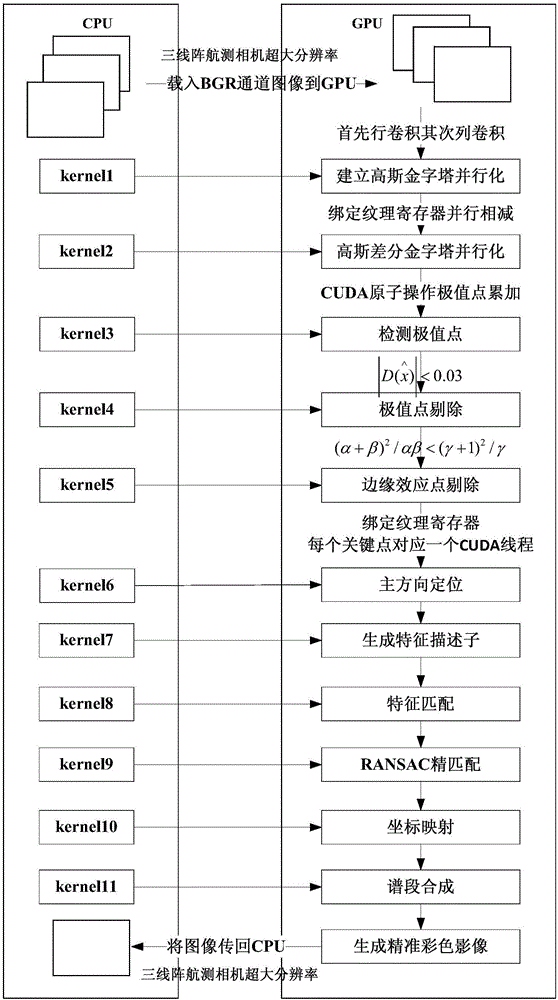

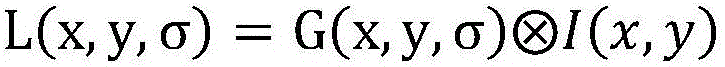

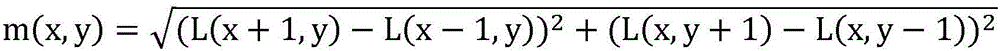

Three-line-array stereo aerial survey camera parallel spectrum band registration method based on GPU technology

InactiveCN105894494AGenerate accuratelyImplement parallel computingImage enhancementImage analysisGaussian pyramidImage resolution

The invention provides a three-line-array stereo aerial survey camera parallel spectrum band registration method based on GPU technology. The method comprises the following steps: 1) carrying out convolution on an original image I(x,y) at the GPU end, establishing Gaussian pyramid L (x, y, sigma) parallelization and establishing Gaussian differential pyramid D(X,Y, sigma) parallelization; 2) carrying out line convolution on gray images at the GPU end, and then, carrying out row convolution, and reducing algorithm complexity from O (N<2>) to O (N); 3) under the condition of storage space continuity, carrying out extreme point number accumulation storage to ensure that only one thread operates accumulation address at the same time; 4) binding texture registers and setting one CUDA thread for each key point; 5) generating a multidimensional descriptor, and utilizing one thread block to process multi-dimensional vector of one feature point; and 6) carrying out KD-tree construction at a CPU. Through actual test, processing resolution can reach or be higher than 16378*8192; the method based on GPU technology solves the speed difficulty in carrying out real-time image registration on super-resolution images; the registration time is in a millisecond level; and the method can be applied to an aerial survey camera image processing system easily.

Owner:XI'AN INST OF OPTICS & FINE MECHANICS - CHINESE ACAD OF SCI

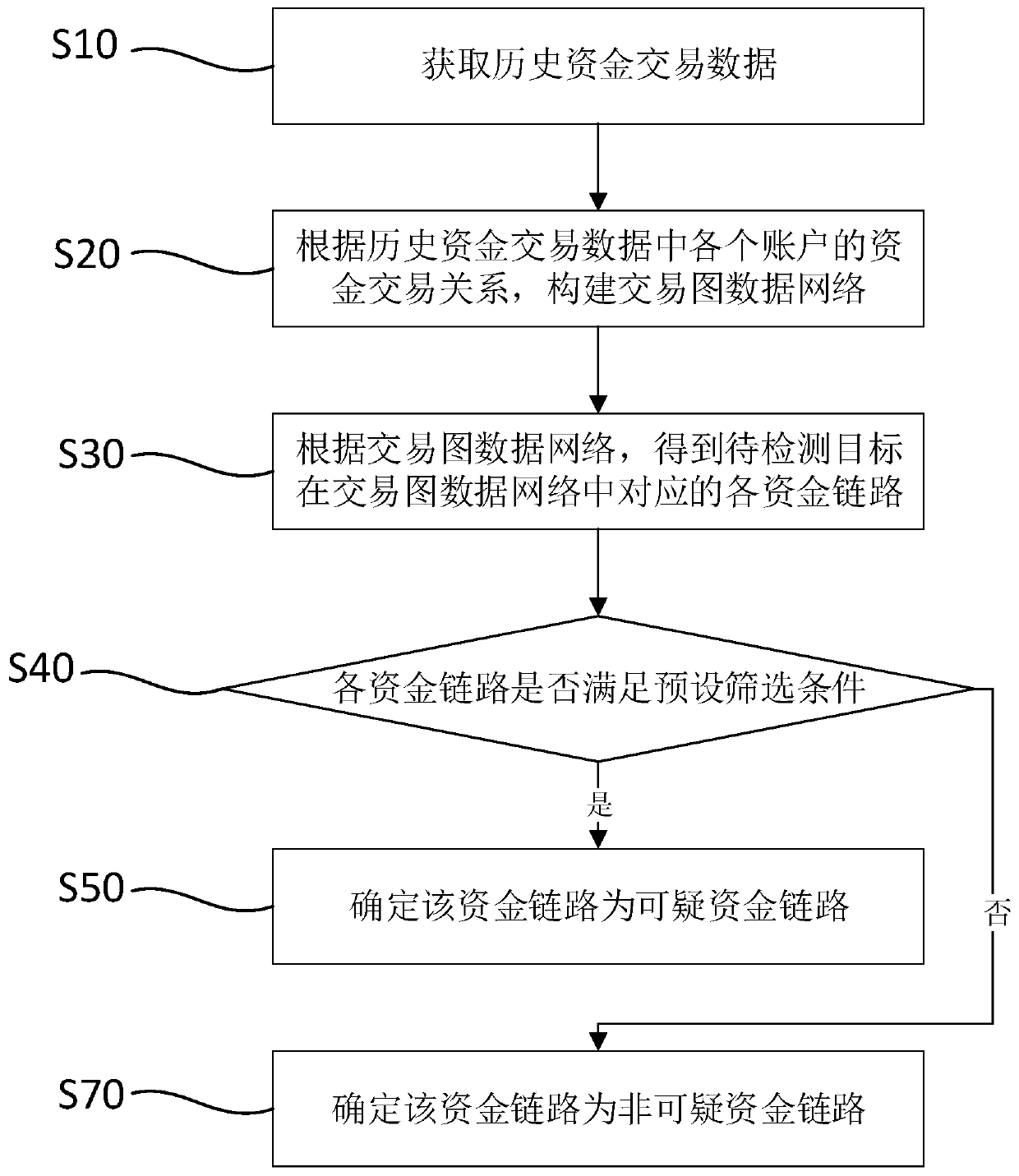

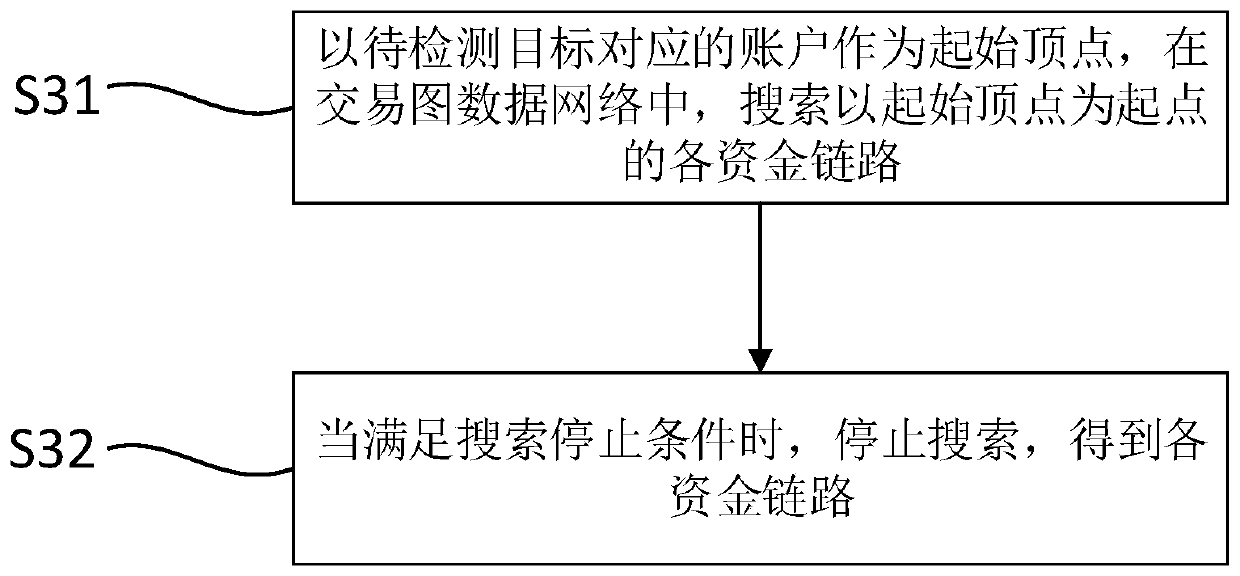

Suspicious fund link detection method and device

PendingCN111127024AAccurate and Efficient TrackingImprove tracking efficiencyFinanceProtocol authorisationThird partyPayment

The invention relates to the technical field of the Internet, in particular to a suspicious fund link detection method and a device. The suspicious fund link detection method is applied to a third-party payment platform and comprises the following steps: acquiring historical fund transaction data; constructing a transaction graph data network according to the fund transaction relationship of eachaccount in the historical fund transaction data; according to the transaction graph data network, obtaining corresponding fund links of a to-be-detected target in the transaction graph data network; judging whether each fund link meets a preset screening condition; and when the fund link meets a preset screening condition, determining that the fund link is a suspicious fund link. According to thesuspicious fund link detection method provided by the invention, the suspicious fund can be automatically tracked and screened, the fund link does not need to be manually tracked, the human input is greatly saved, and the fund tracking efficiency is improved.

Owner:ALIPAY (HANGZHOU) INFORMATION TECH CO LTD

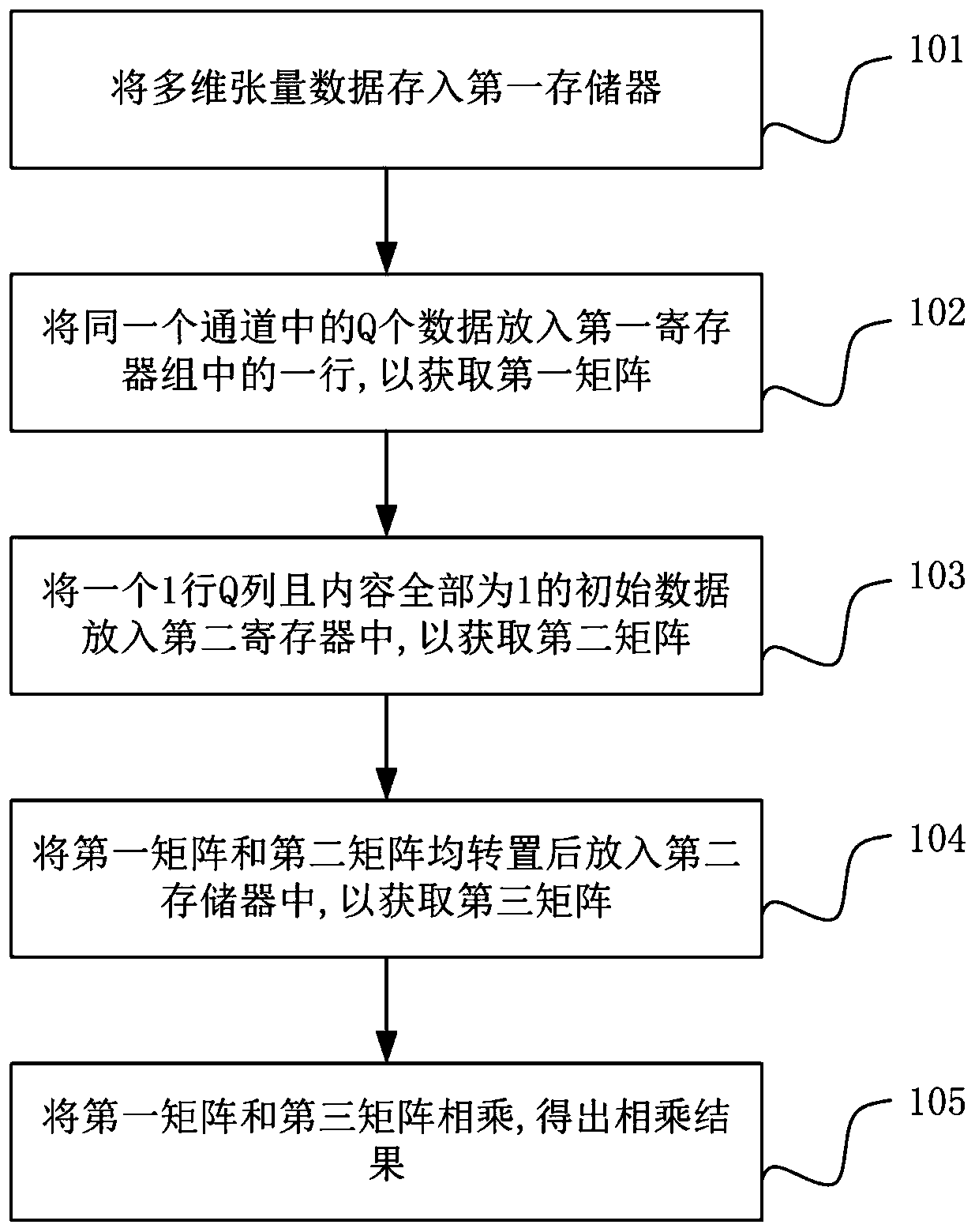

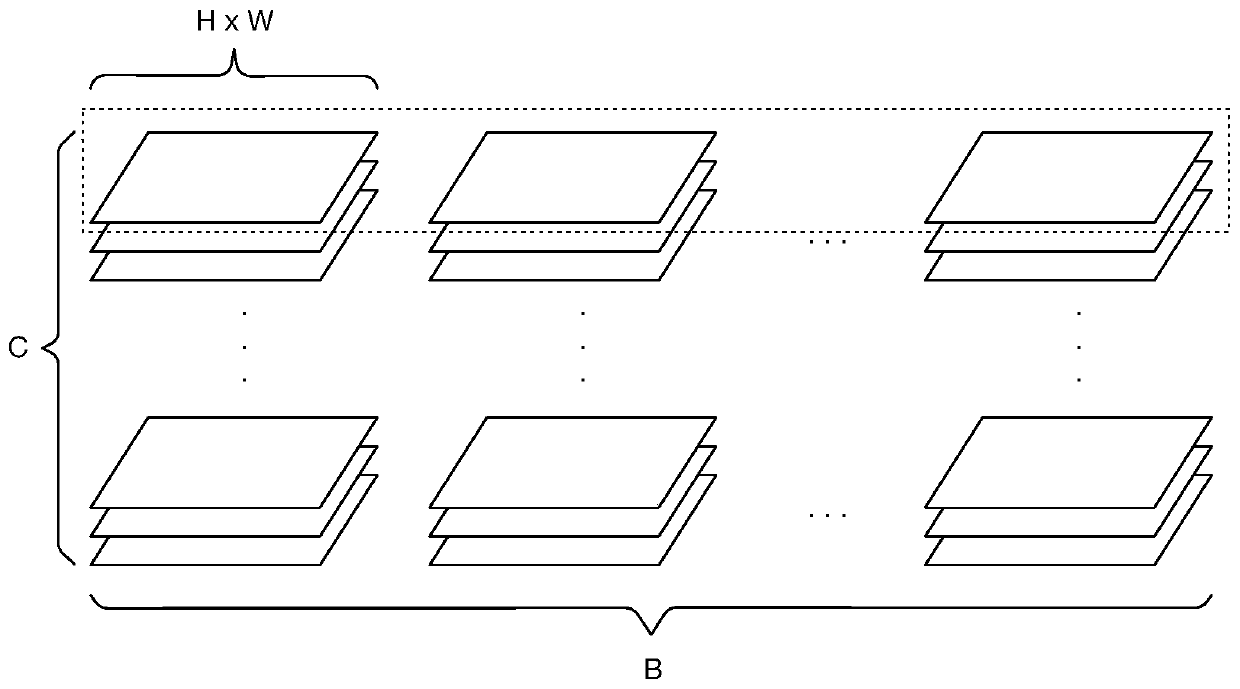

Data processing method and device, chip and computer readable storage medium

PendingCN111428879AFast operationShorten the timeMachine learningNeural architecturesMatrix multiplicationData processing

The embodiment of the invention discloses a data processing method and device, a chip and a computer readable storage medium, which are used for accelerating the operation of a batch of standardized layers in deep learning model training. Multi-dimensional tensor data is stored in a first memory according to a preset rule; taking out in the form of two-dimensional data and carrying out operation;a third matrix is constructed through cooperative use of a plurality of register sets and a second memory; matrix multiplication is performed on the first matrix and the third matrix, so that the element sum and the element quadratic sum of each row in the first matrix can be solved at the same time; parallel calculation of element summation and element quadratic sum calculation is realized, so that calculation related to mean values and variances in a batch standardization layer is accelerated, and the problem of long operation time consumption caused by overlarge data volume in the operationprocess of the batch standardization layer is solved. And finally, the operation speed of batch standardized operation is improved, and the time required for training the whole deep learning model isgreatly shortened.

Owner:中昊芯英(杭州)科技有限公司

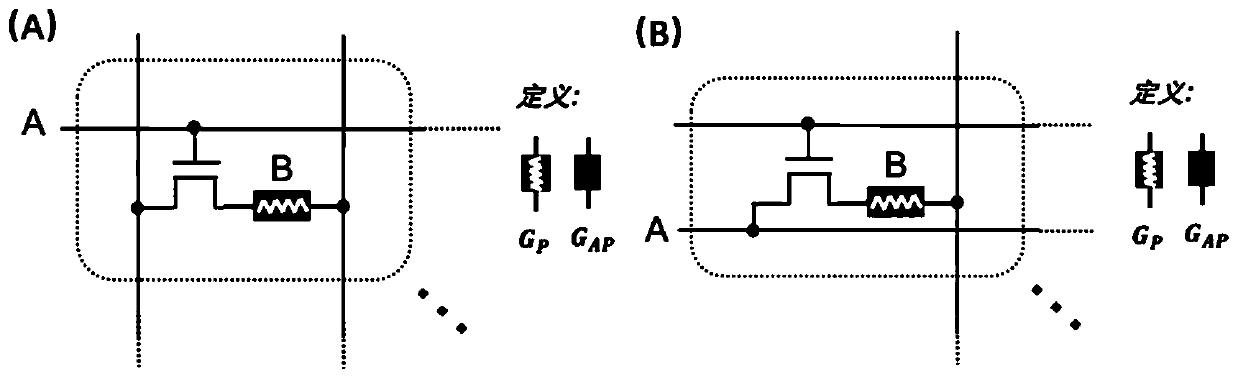

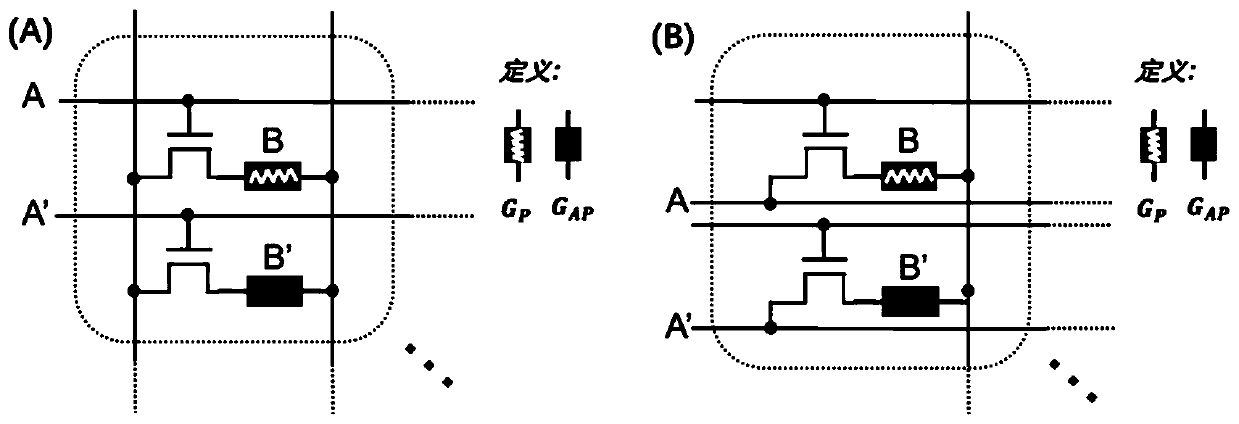

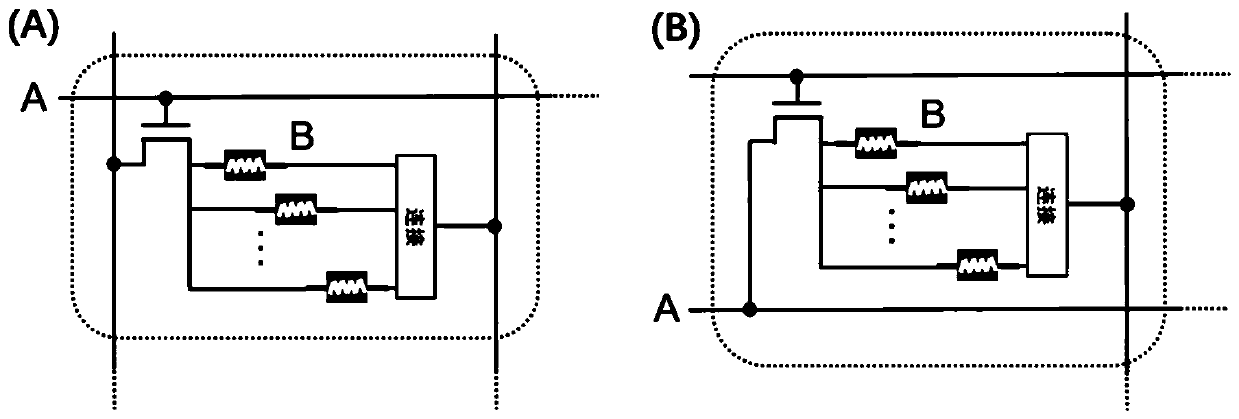

Computing system of resistive memory

ActiveCN110390074AAchieving Massively Parallel ComputingImplement parallel computingDigital storageComplex mathematical operationsComputing systemsDistributed computing

The invention discloses a computing system of a resistive memory. In a neural network and scientific calculation, a large number of matrix operations need to be carried out, so that a large number ofdata needs to be transported, and a large number of operations needs be carried out on the data, which consume limited data bandwidth (bus resources) and computing resources in the computing system. The memory is reconstructed into a calculation module, so that the calculation capability and the data bandwidth are greatly improved. The AND-XOR operation and the analog multiplication operation arerealized by utilizing the grid control capability of the transistor and the current modulation capability of the resistance value of the memory. The reconstruction can greatly reduce the calculation cost, thereby enhancing the calculation capability of natural tasks with parallelism, such as a neural network and the like. Due to the fact that complete Boolean logic can be formed through AND and XOR, complete Boolean logic can be achieved.

Owner:ZHEJIANG UNIV

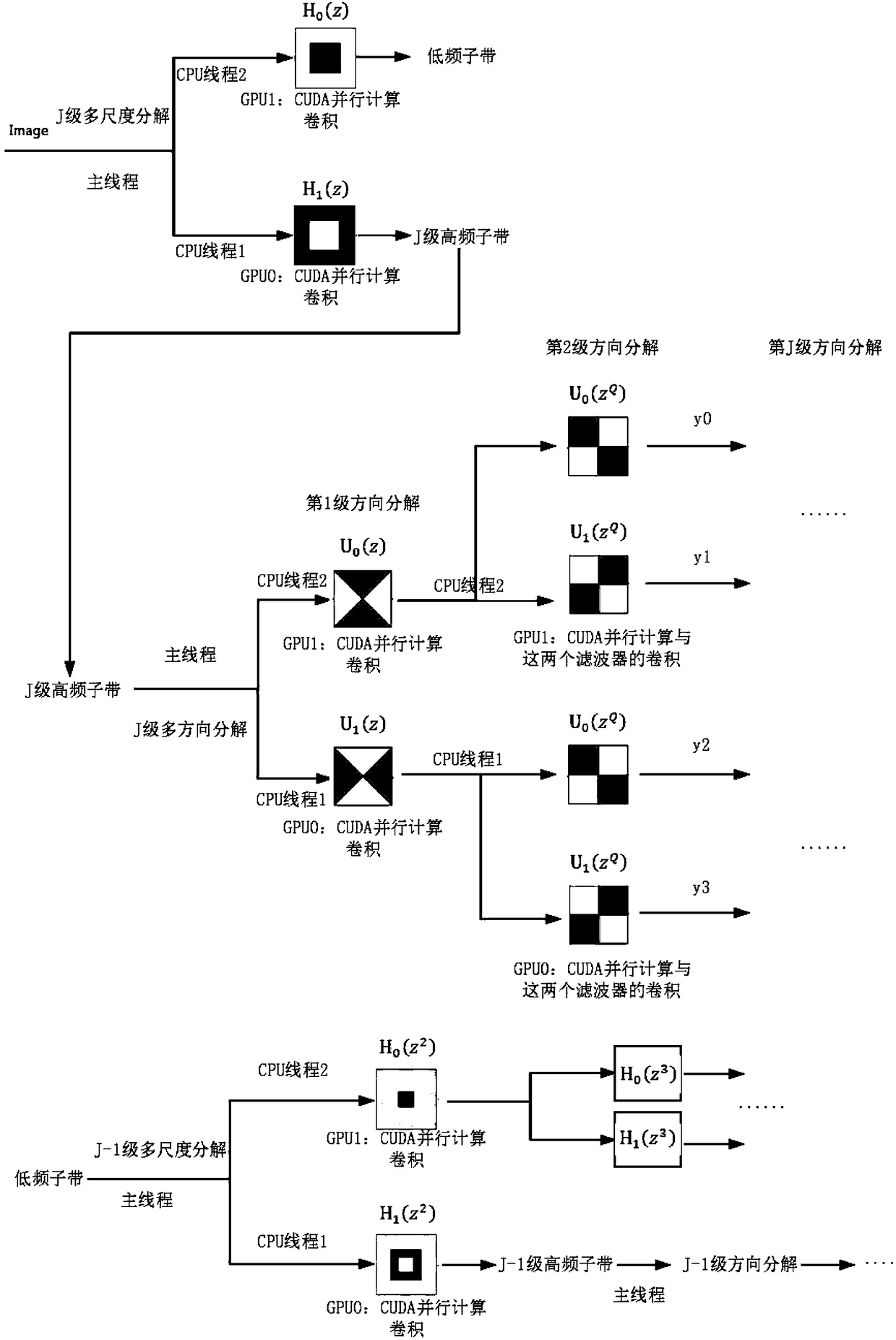

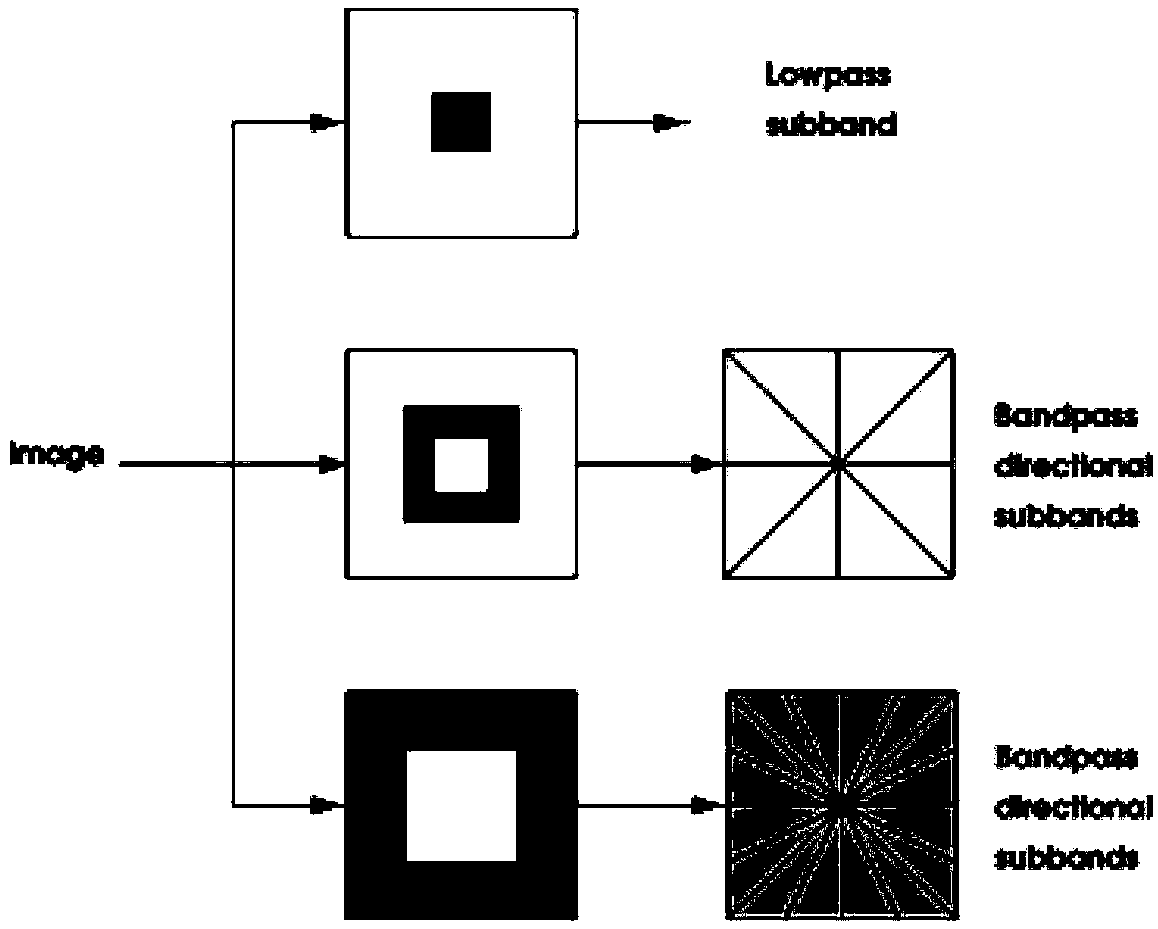

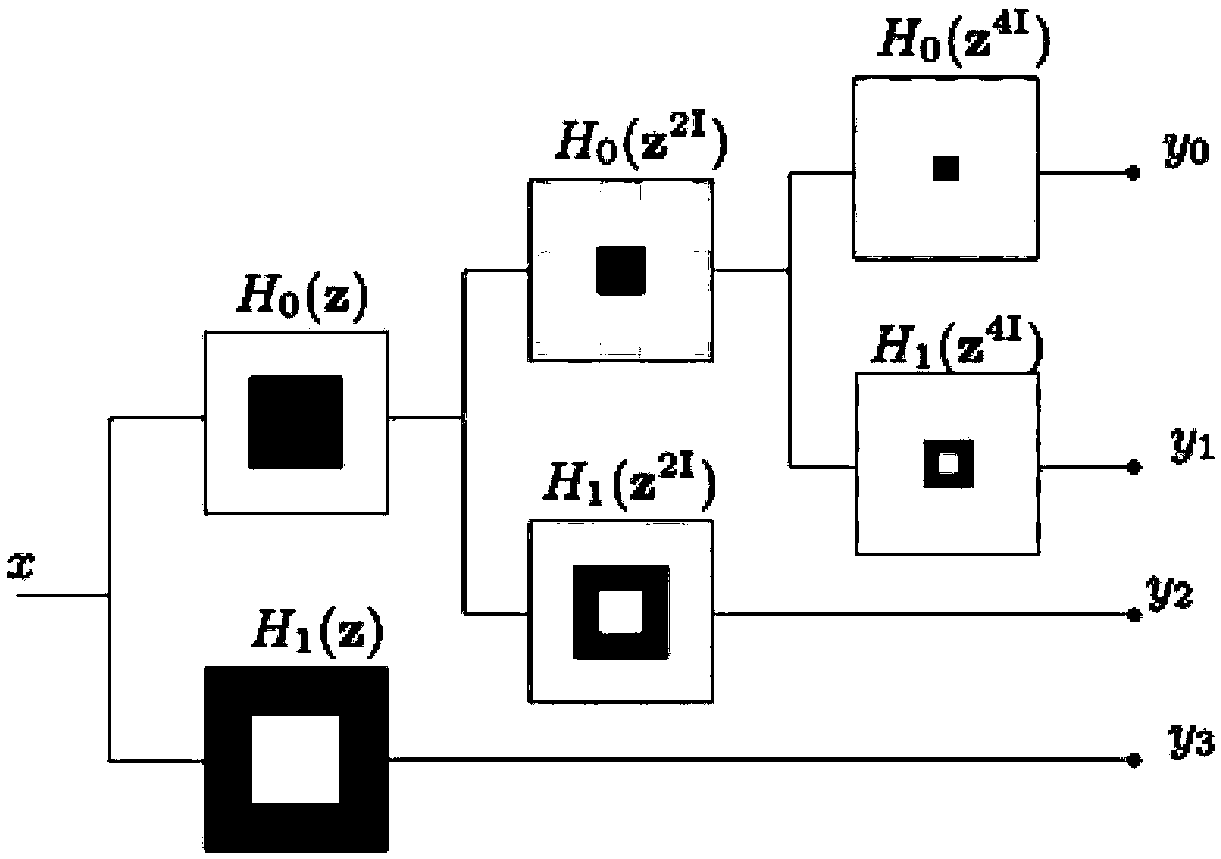

Non-subsampled contour wave transform optimization method based on parallel computing

ActiveCN108897616ATake full advantage of parallel computingReduced execution timeTransformation of program codeResource allocationMultiscale decompositionDecomposition

The invention discloses a non-subsampled contour wave transform optimization method based on parallel computing. The method comprises the following steps: (1) computing the number of the enabled GPUsand the number of the started CPU threads required for executing multi-scale decomposition and different-level direction decomposition in the NSCT algorithm according to the configuration situation ofthe GPU and the CPU and the actual computation amount allocated to each GPU; (2) performing parallelism analysis on the NSCT decomposition and reconstruction process, it is found that the image datacan be moved to the GPU and parallel processing of the convolution computation and computation result back storage process can be performed; and (3) using OpenMP and CUDA to perform NSCT decompositionand reconstruction in parallel. According to the method, the NSCT computation speed can be remarkably improved, the running time can be reduced and the practicability of the NSCT algorithm can be improved through the processes of parallel execution of data movement, pixel-level parallel computation convolution and the like.

Owner:SICHUAN UNIV

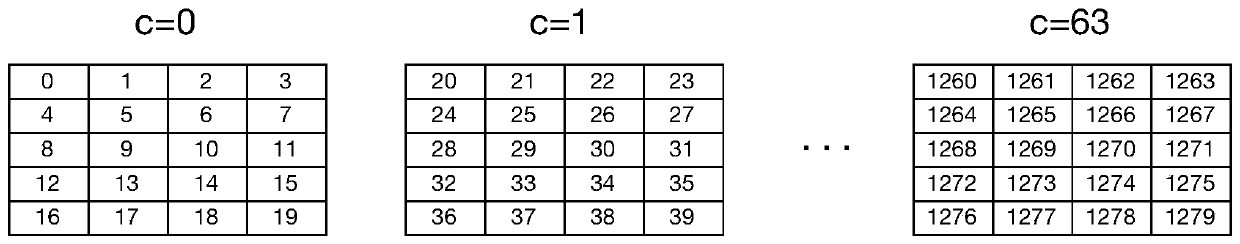

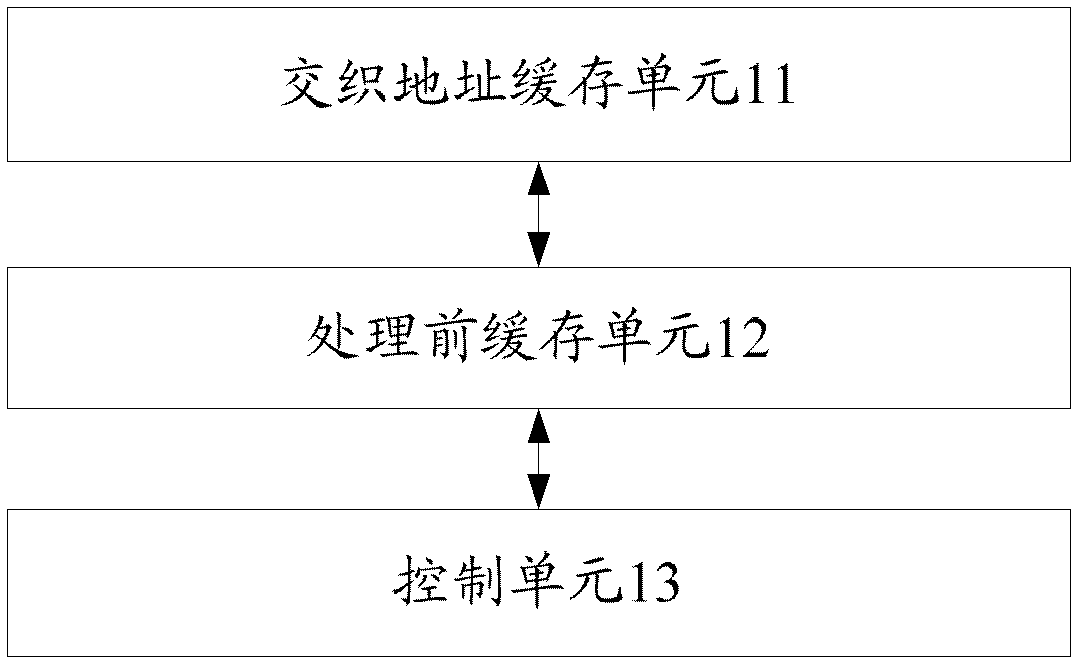

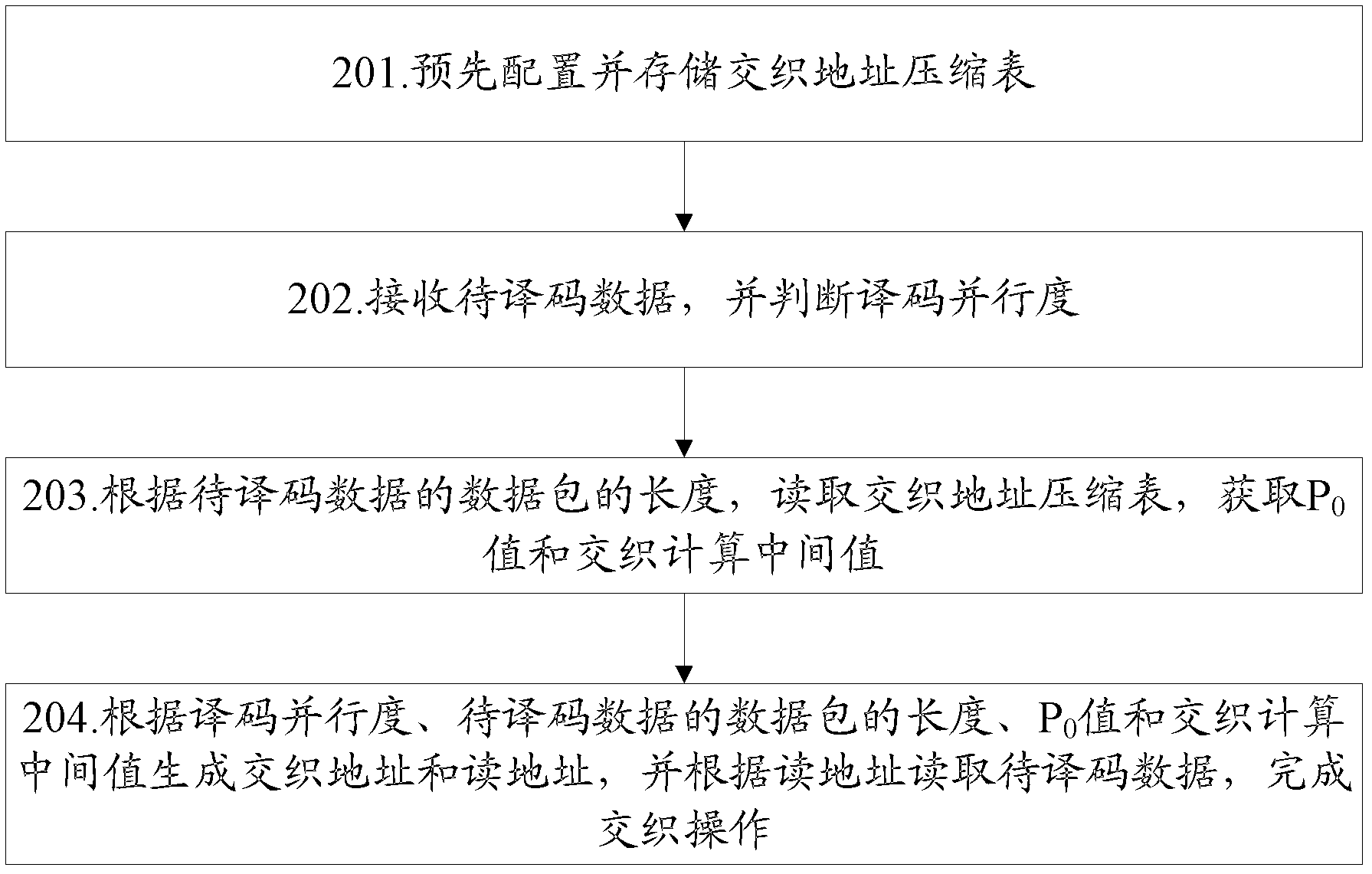

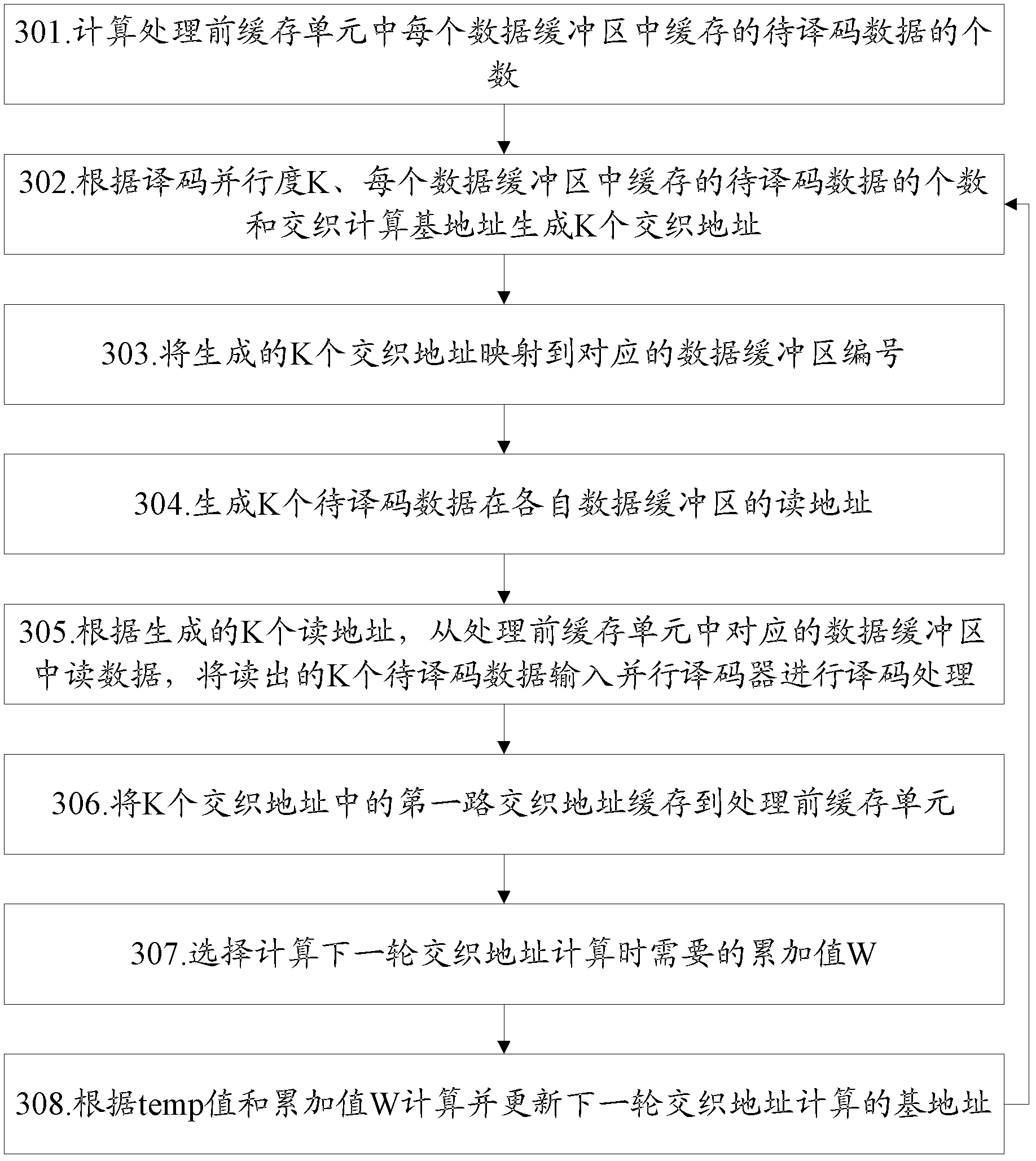

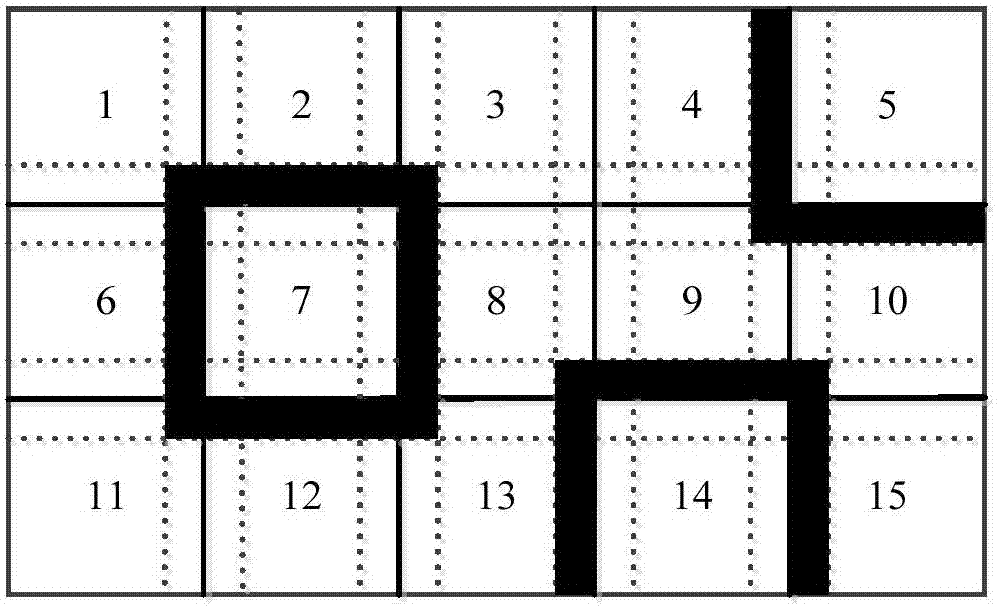

Method and system for parallel computing of interweaved address

ActiveCN102324999AImplement parallel computingLow costError preventionCode conversionMicrowaveNetwork packet

The invention discloses a method and system for parallel computing of an interweaved address. The method comprises the steps of: pre-configuring and storing an interweaved address compress table; receiving data to be decoded and judging decoding parallelism; reading the interweaved address compress table according to the length of a packet of the data to be decoded, so as to acquire a value P0 and an interweaving computing intermediate value; and generating the interweaved address and a reading address according to the decoding parallelism, the length of the packet of the data to be decoded, the value P0 and the interweaving computing intermediate value, and reading the data to be decoded according to the reading address so as to complete interweaving operation. According to the technical scheme in the invention, the parallel computing of the interweaved address inside a decoder in a WiMAX (Worldwide Interoperability for Microwave Access) system can be realized.

Owner:南通东湖国际商务服务有限公司

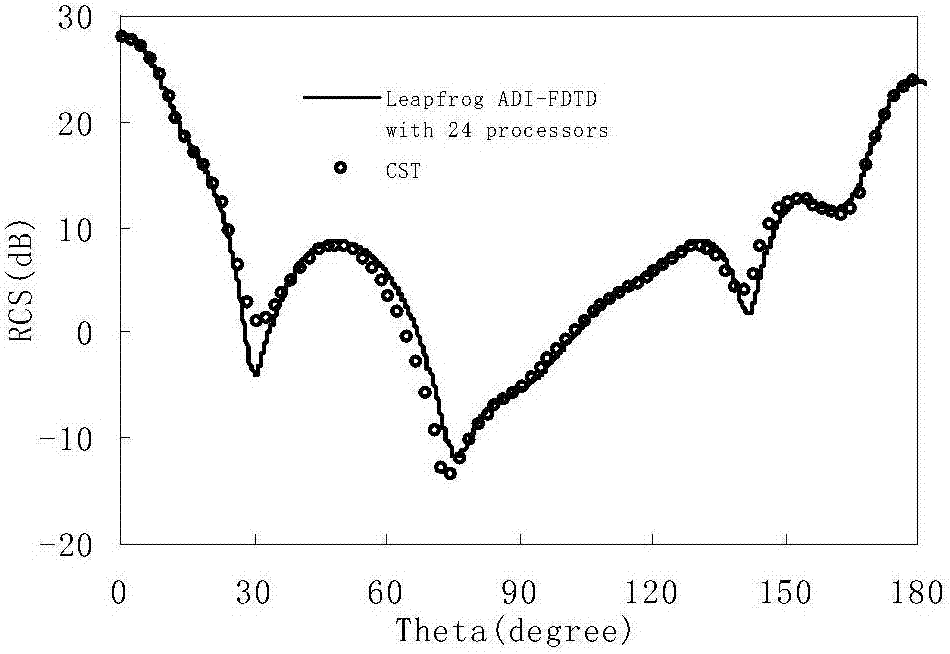

Domain decomposition parallel method effective for unconditional stable time-domain finite difference method

ActiveCN107239586AAchieving Massively Parallel ComputingImplement parallel computingInterprogram communicationDesign optimisation/simulationTime domainDecomposition

The present invention discloses a domain decomposition parallel method effective for an unconditional stable time-domain finite difference method. In the present invention, the causal domain decomposition method is used to realize the height parallelization of the frog jump alternating direction implicit scheme time-domain finite difference method, and height parallel calculation can be carried out while the unconditional stability is maintained. According to the method disclosed by the present invention, the simulating calculation time on the time-domain finite difference method can be effectively saved, and the programming is simple and the method has a strong practical engineering application value.

Owner:NANJING UNIV OF SCI & TECH

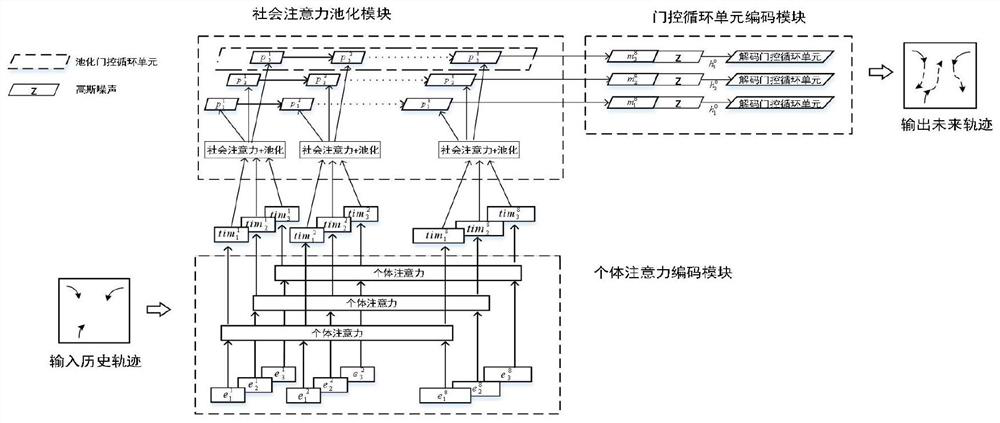

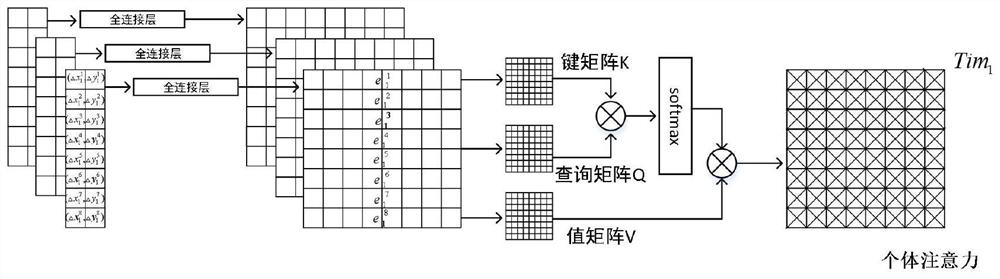

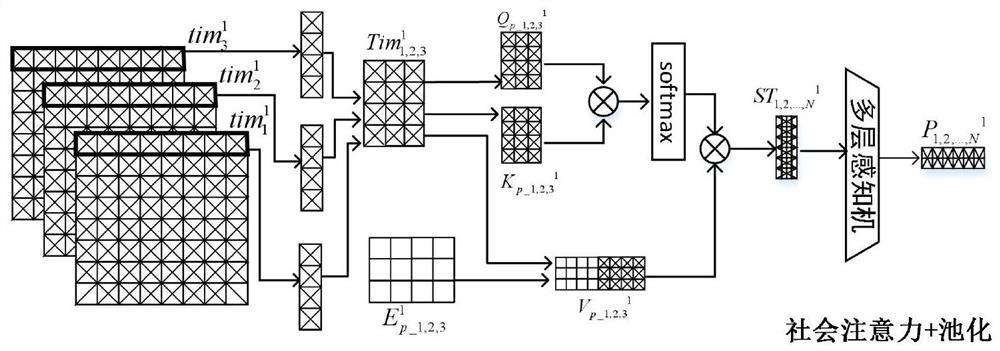

Attention mechanism-based pedestrian trajectory prediction method

PendingCN113160269AImplement parallel computingShorten forecast timeImage enhancementImage analysisComputer graphics (images)Algorithm

The invention relates to an attention-based pedestrian trajectory prediction method, which is used for more accurately and quickly predicting a future trajectory of a pedestrian. The invention specifically comprises three modules: an individual attention coding module used for calculating similarity of hidden vectors in a historical trajectory of a pedestrian and outputting an individual attention feature matrix so as to obtain main influence factors of the pedestrian in a motion process; the social attention pooling module that is used for receiving a calculation result, namely an individual attention feature matrix, of the individual attention coding module, calculating the similarity of hidden vectors in historical tracks of all pedestrians in a scene and outputting a comprehensive motion feature matrix so as to obtain a mutual influence relationship among the pedestrians in a motion process; the gating circulation unit decoding module that is used for receiving a calculation result of the social attention pooling module, namely a comprehensive motion characteristic matrix, and calculating and outputting future trajectory coordinates of pedestrians by utilizing a gating circulation unit. The method effectively improves the prediction precision and speed.

Owner:BEIJING UNIV OF TECH

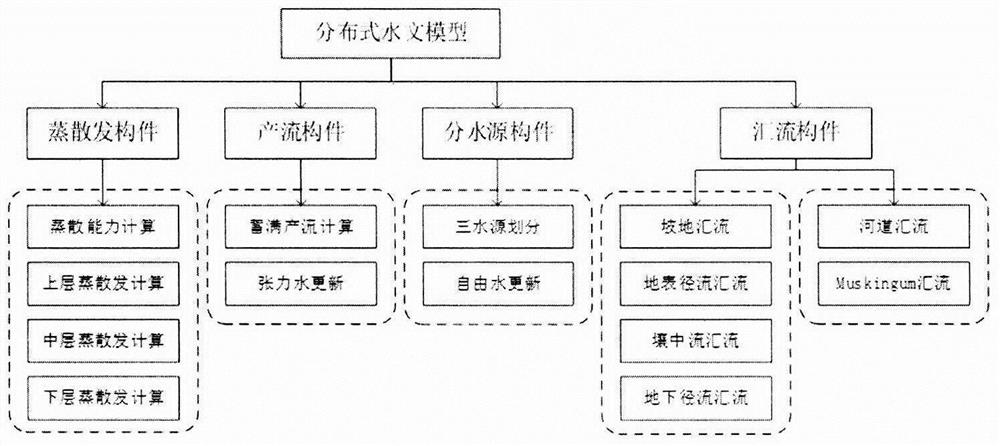

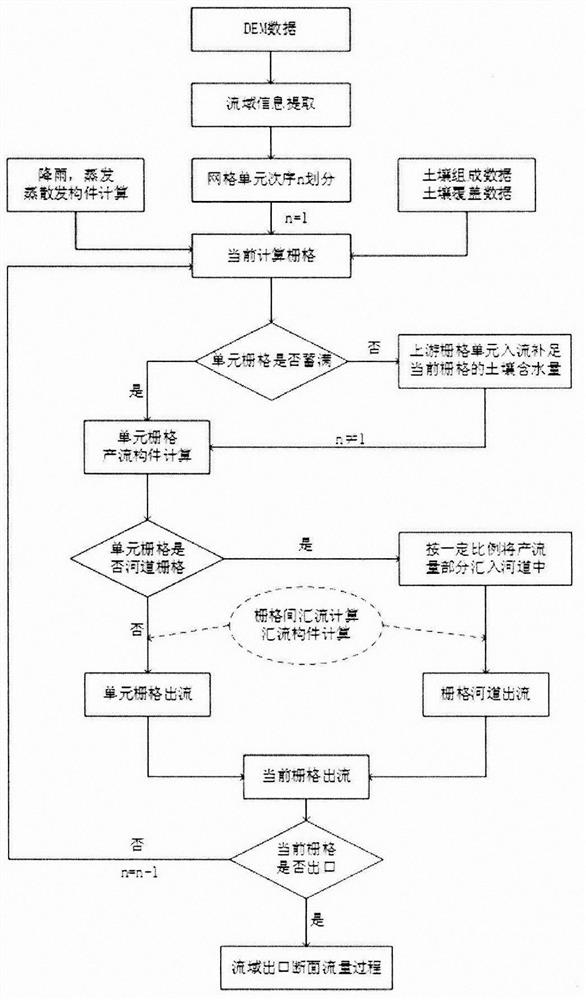

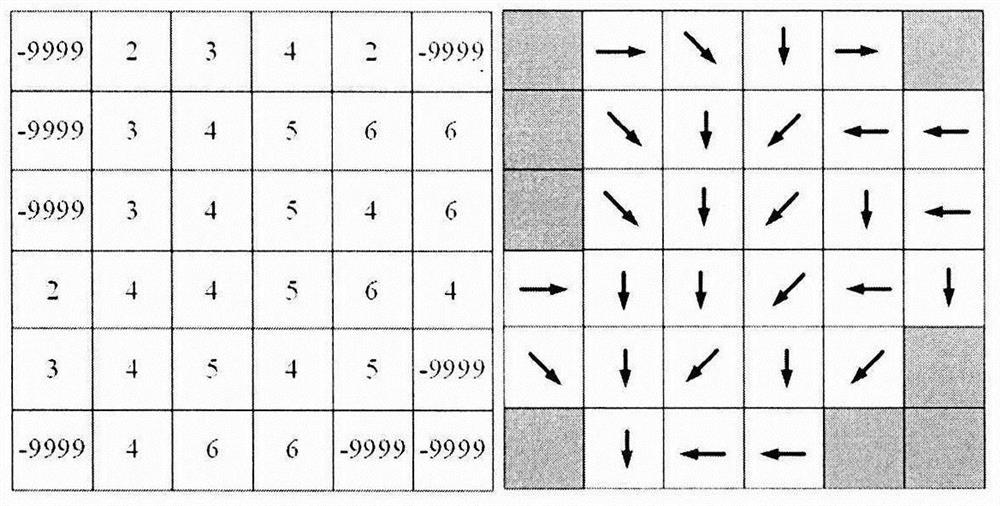

Distributed hydrological model parallel computing method based on flow direction

PendingCN114462254AClearly describe the implementationImplement parallel computingDesign optimisation/simulationSpecial data processing applicationsHydrometryConcurrent computation

The invention discloses a distributed hydrological model parallel computing method based on a flow direction. The distributed hydrological model parallel computing method comprises the following steps: providing a parameter description method based on a NetCDF (Network Common Data Format); standardized construction of the distributed hydrological model is realized; realizing watershed discretization and grid construction; according to the river relation and the confluence relation, a parallel calculation sequence is extracted, and distributed hydrological model parallel calculation is achieved; according to the method, the traditional serial calculation mode of the distributed hydrological model is changed, and the model calculation efficiency is improved based on the grid flow division (GFD).

Owner:HOHAI UNIV

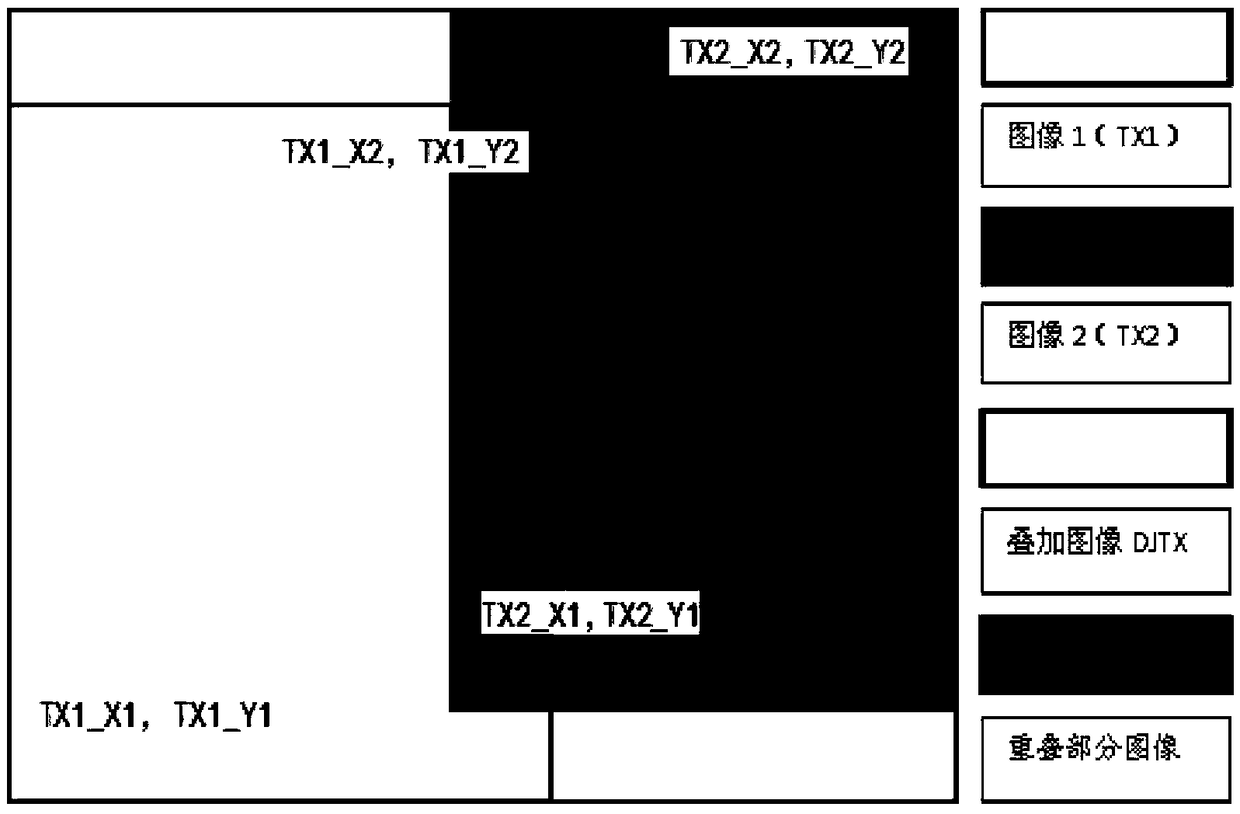

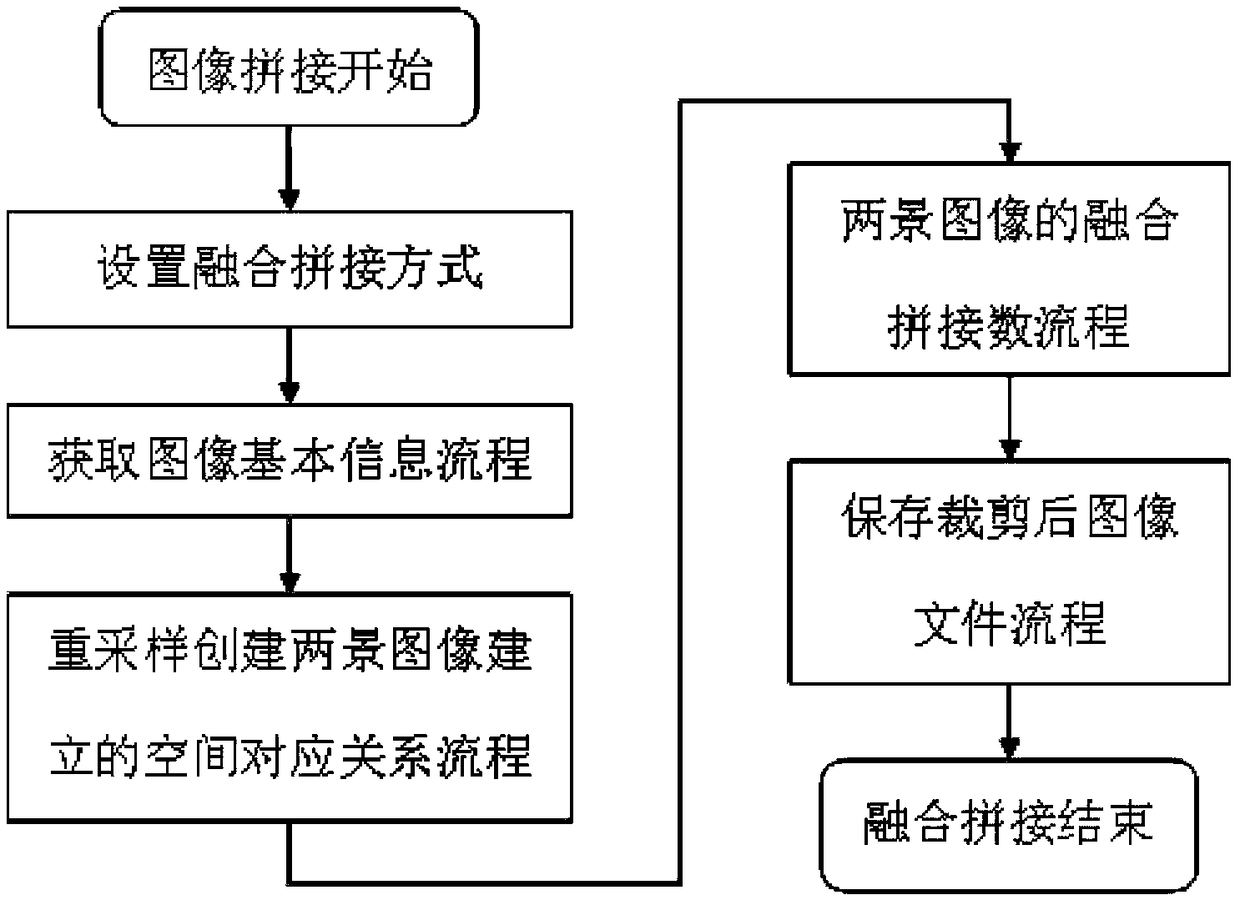

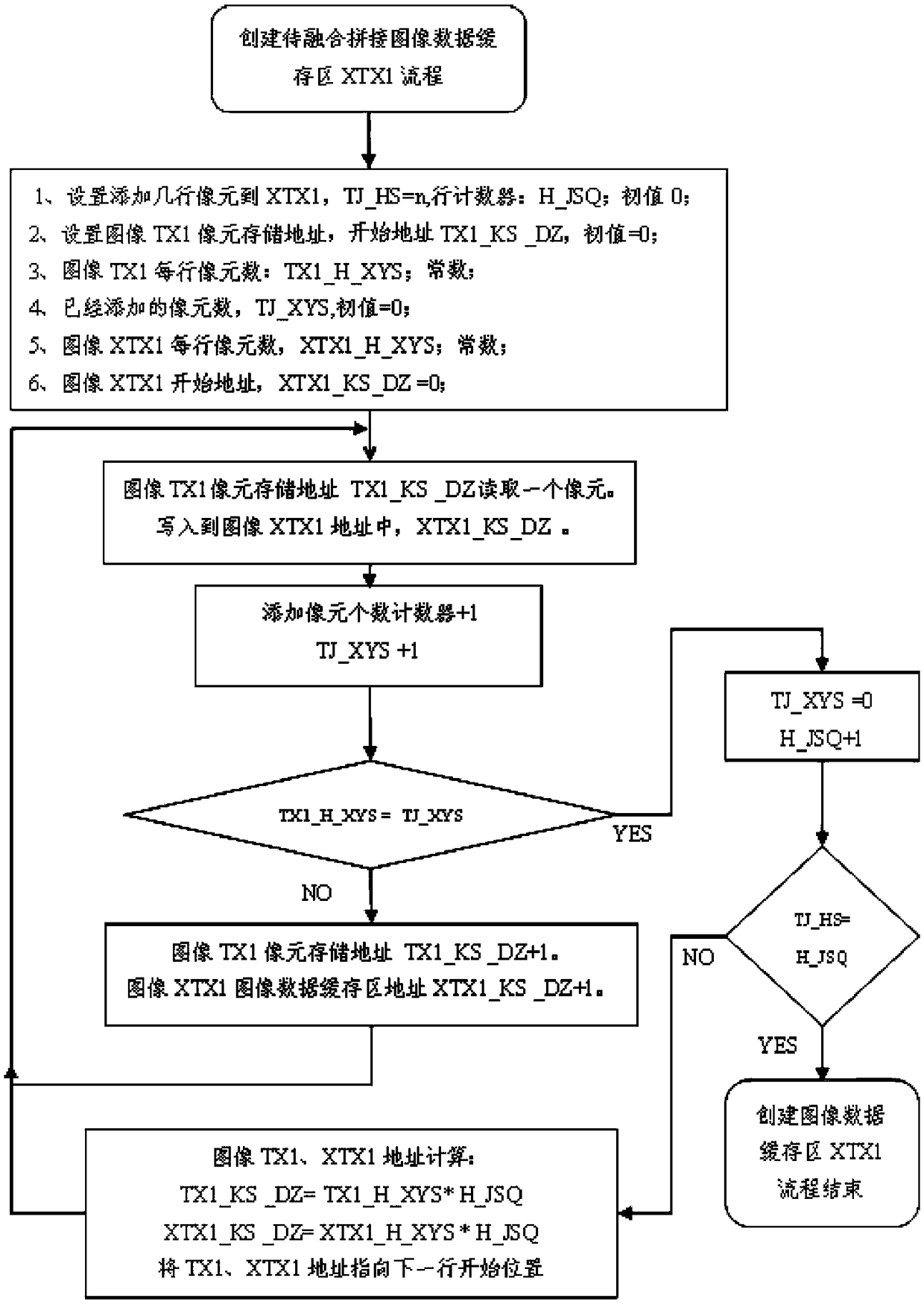

An image fusion and mosaic method based on parallel computing algorithm

ActiveCN109461121AA wide viewing angleRich relevant informationImage enhancementImage analysisImaging processingFusion splicing

The invention relates to an image fusion mosaic method based on a parallel computing algorithm, belonging to the technical field of image processing. Comprising the steps of: Setting splicing mode; Acquiring basic information of two images to be fused; Creating spatial correspondence between two scene images by data resampling; Fusion and mosaic of two images; Save the image file after fusion splicing; End fusion splicing. The invention puts forward the technical idea of exchanging space for time. By establishing the space between two scene images and the one-to-one correspondence relation onthe image data, the invention achieves the data processing ability suitable for parallel calculation, simplifies the algorithm logic relation, strengthens the program structure, automates the operation, and has high efficiency and high speed.

Owner:RES INST OF FOREST RESOURCE INFORMATION TECHN CHINESE ACADEMY OF FORESTRY +1

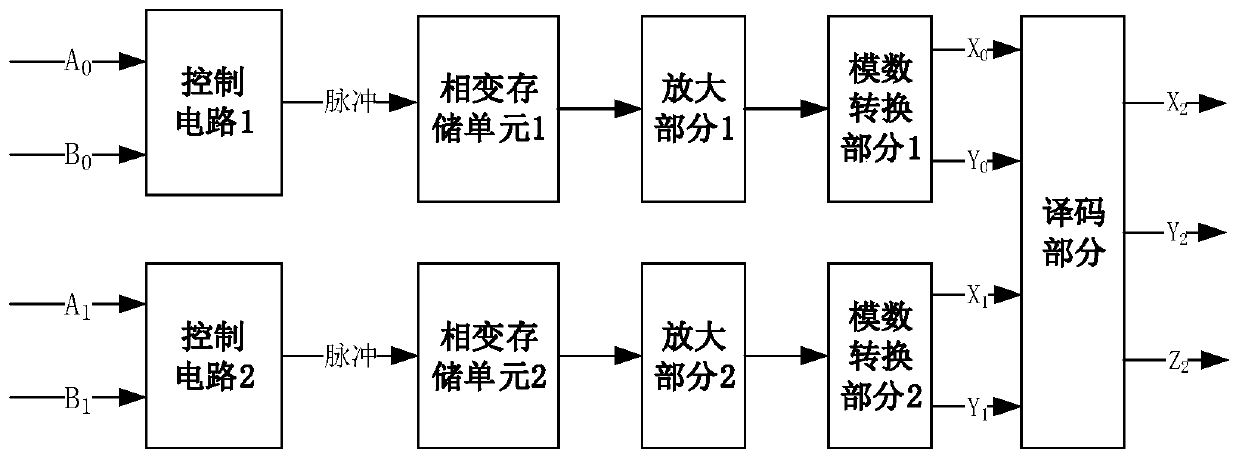

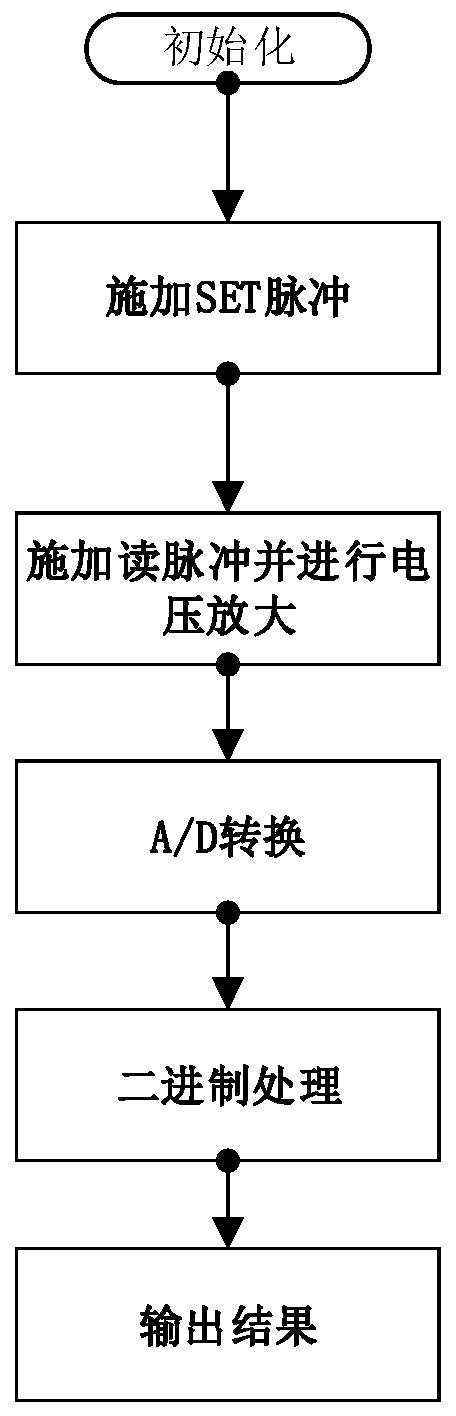

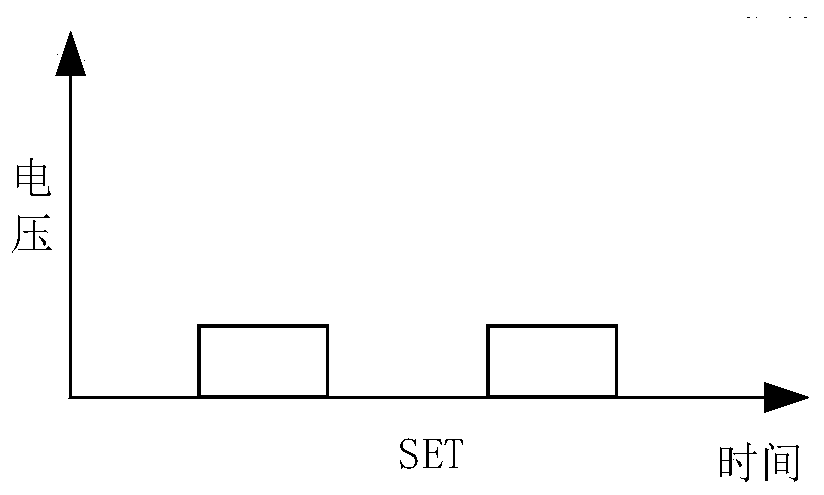

A method and a system for realizing binary parallel addition based on a phase change memory

InactiveCN109841242ARealize the mergerImplement parallel computingDigital storagePhase-change memoryEngineering

The invention discloses a method and system for realizing binary parallel addition based on a phase change memory, and the method comprises the steps: a first phase change memory receives a first pulse signal, and converts the first pulse signal into a corresponding first resistance value; The first amplification circuit reads the first resistance value and amplifies the first resistance value toobtain a corresponding first voltage value; The first analog-to-digital conversion circuit converts the first voltage value into first two-bit binary data; The second phase change memory receives thesecond pulse signal and converts the second pulse signal into a corresponding second resistance value; The second amplification circuit reads the second resistance value and amplifies the second resistance value to obtain a corresponding second voltage value; The second analog-to-digital conversion circuit converts the second voltage value into second two-bit binary data; And the decoding circuitis used for carrying out addition operation on the first two-bit binary system and the second two-bit binary system to obtain three-bit binary data and outputting the three-bit binary data. Accordingto the invention, the two-bit binary parallel addition calculation is realized by applying pulses to the phase change memory unit and combining the processing of a subsequent circuit.

Owner:HUAZHONG UNIV OF SCI & TECH

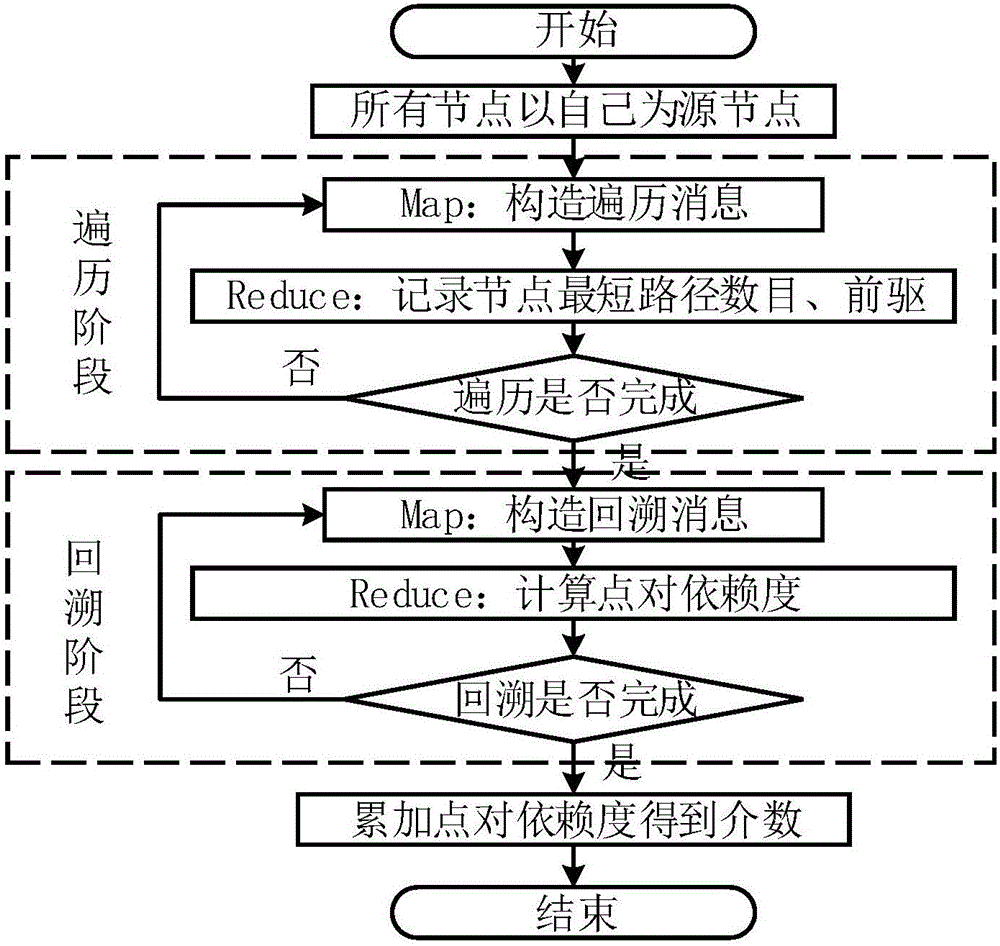

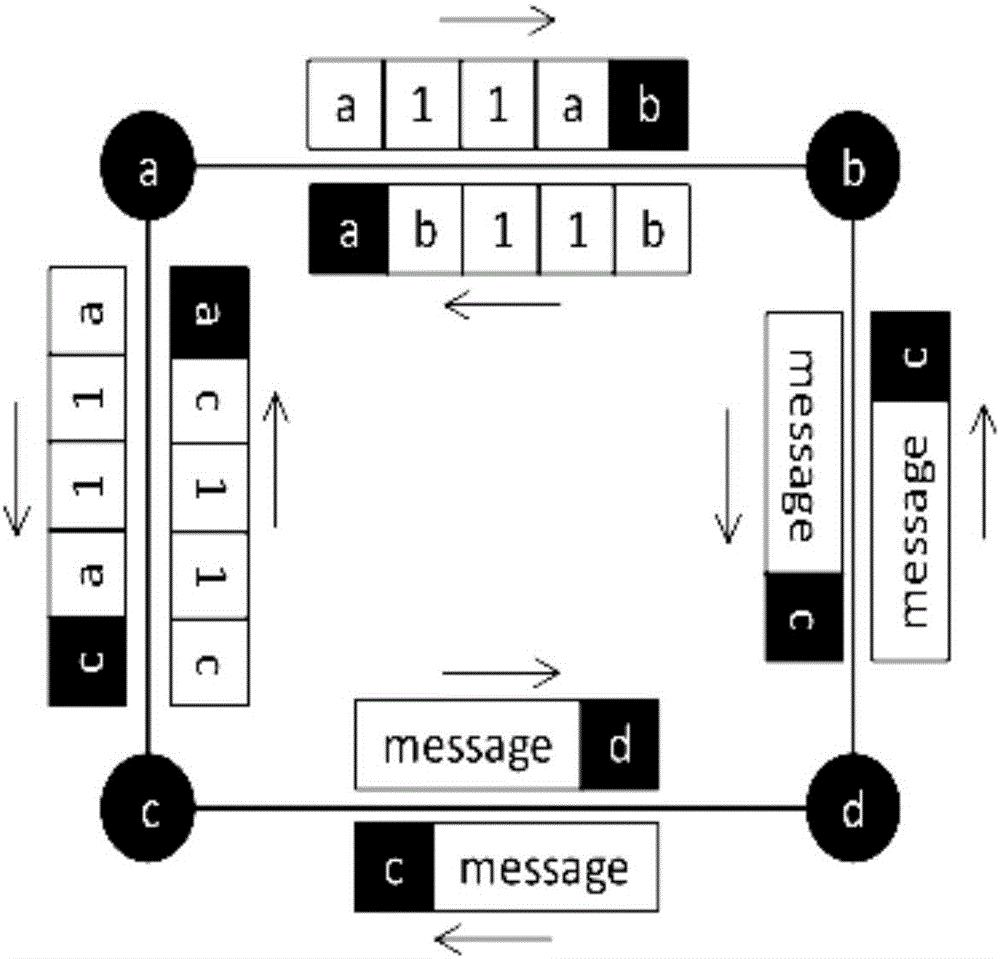

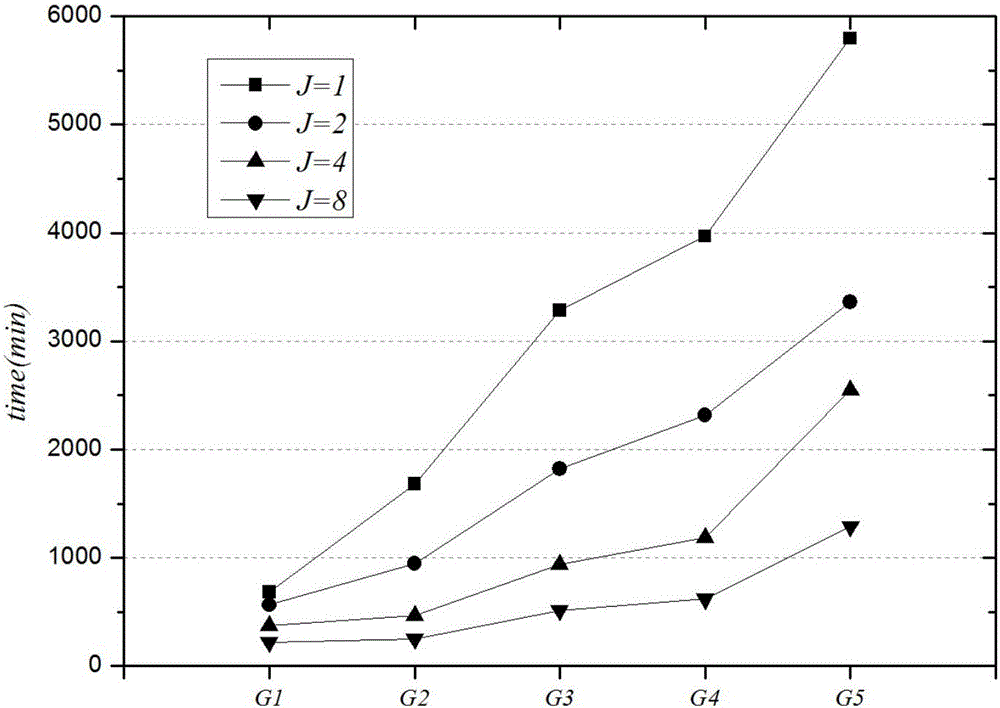

Complex network topology characteristic parameter calculation method and system based on MapReduce

ActiveCN106330559AImplement parallel computingImprove computing efficiencyData switching networksFeature parameterMessage passing

The invention provides a complex network topology characteristic parameter calculation method and system based on MapReduce. An algorithm parallel method based on message transmission is employed. The method comprises the steps of S1, generating update messages; S2, transmitting the messages; and S3, updating internal state information of nodes. For the problem that the efficiency is relatively low when conventional stand-alone algorithms are used for calculating large-scale network topology characteristic parameters, the invention provides a method for transplanting the stand-alone algorithms for the network topology characteristic parameters to a MapReduce calculation framework in parallel, the problem occurred in the process of transplanting the stand-alone algorithms to the MapReduce in parallel is overcome, the network topology characteristic parameters are calculated in parallel through utilization of a Hadoop calculation platform, and the calculation efficiency of the network topology characteristic parameters is improved.

Owner:安徽奥里奥克科技股份有限公司

AI chip-based data processing method and device

ActiveCN108985451BImprove data processing efficiencyIncrease frame rateCharacter and pattern recognitionPhysical realisationComputer hardwareConcurrent computation

Owner:吉林省华庆云科技集团有限公司

A brain-inspired computing chip and computing device

ActiveCN109901878BSolve efficiency problemsAddress flexibilityDigital data processing detailsNeural architecturesCoprocessorNeuronal models

The invention provides a brain-like computing chip and a computing device. The brain-like computing chip includes a many-core system composed of one or more functional cores, and data transmission is performed between the functional cores through an on-chip network; the functional cores include : at least one neuron processor for calculating multiple neuron models; at least one coprocessor, coupled with the neuron processor, for performing integral operations and / or multiply-add operations; wherein the neuron The metaprocessor may invoke the coprocessor to perform multiply-add-like operations. The brain-like computing chip provided by the invention is used to efficiently implement various neuromorphic algorithms, especially for the computing characteristics of the rich SNN model, synaptic computing with high computing density, and cell body computing with high flexibility requirements.

Owner:LYNXI TECH CO LTD

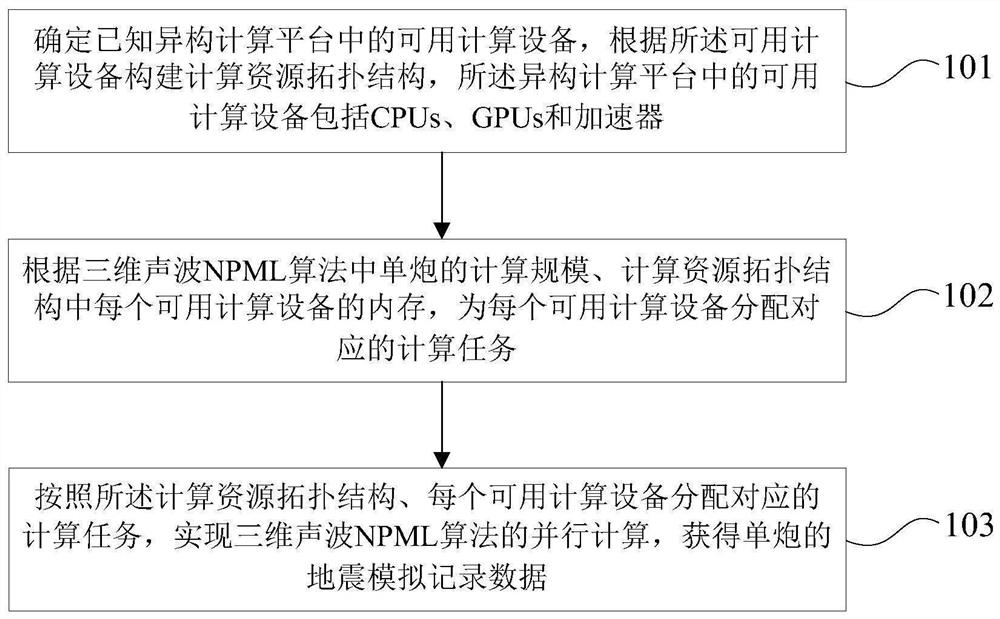

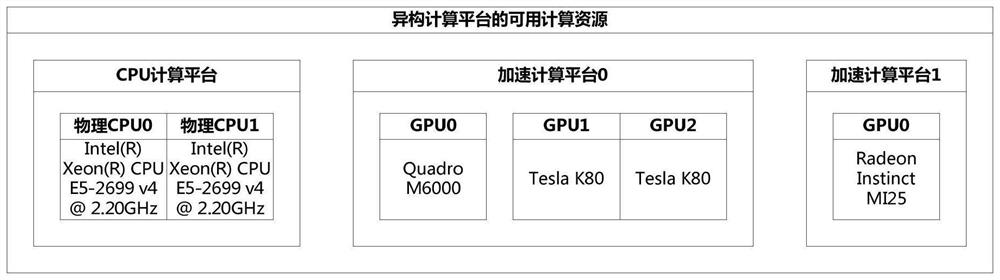

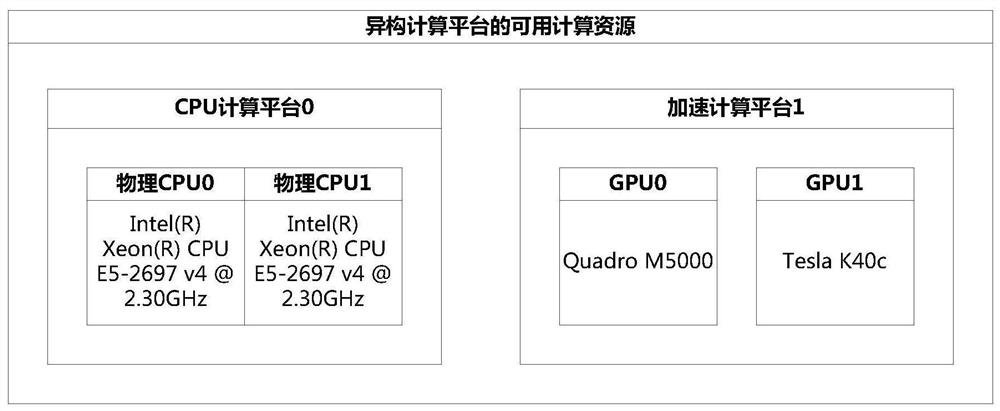

Heterogeneous parallel computing implementation method and device for three-dimensional sound wave NPML algorithm

PendingCN112099936AUnleash comprehensive computing performanceShorten the total simulation calculation timeResource allocationComputational scienceConcurrent computation

The invention provides a heterogeneous parallel computing implementation method and device for a three-dimensional sound wave NPML algorithm, and the method comprises the following steps: determiningavailable computing equipment in a known heterogeneous computing platform, constructing a computing resources topological structure according to the available computing equipment, wherein the available computing equipment in the heterogeneous computing platform comprises CPUs, GPUs and accelerators; according to the calculation scale of a single shot in the three-dimensional sound wave NPML algorithm, computing the memory of each available computing equipment in the resource topological structure, and allocating a corresponding computing task to each available computing equipment; according tothe computing resource topological structure, enabling each available computing equipment to allocate a corresponding computing task, achieving parallel computing of the three-dimensional sound waveNPML algorithm to acquire single-shot seismic simulation record data. According to the scheme, the total simulation calculation time of a single shot is shortened, the application timeliness is enhanced, the unit time efficiency is improved, and the maximum computing capability of the heterogeneous computing platform is exerted.

Owner:BC P INC CHINA NAT PETROLEUM CORP +1

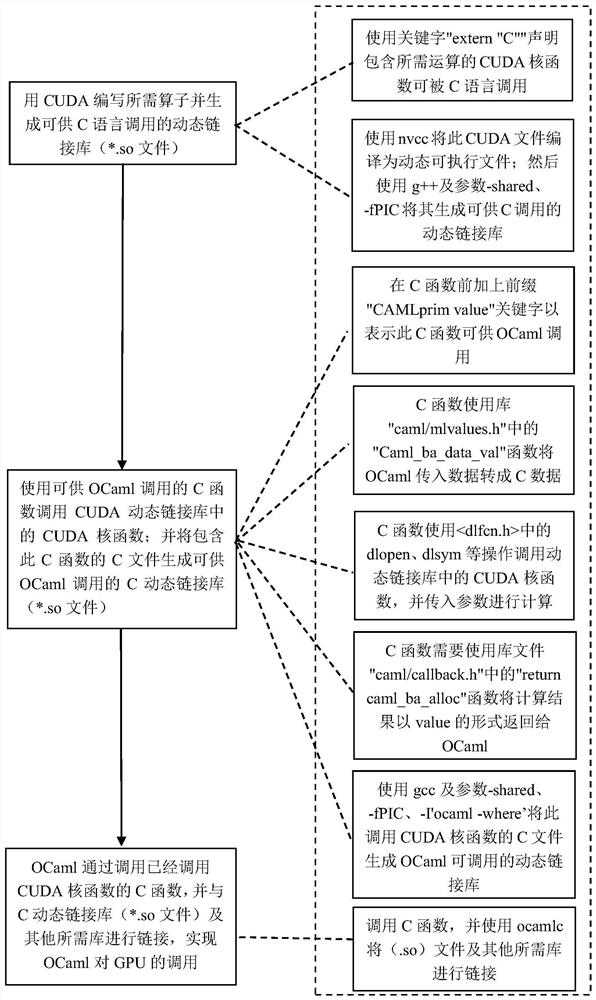

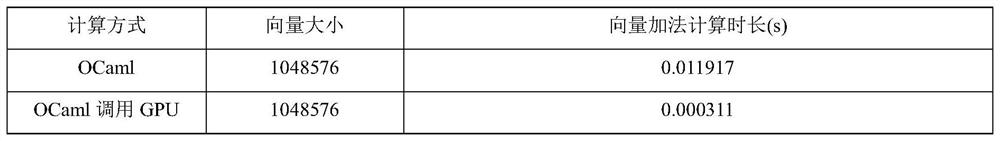

Method for accelerating operator by calling GPU (Graphics Processing Unit) through OCaml functional language

PendingCN114153433AImplement parallel computingIncrease computing speedResource allocationCreation/generation of source codeProgramming languageConcurrent computation

The invention discloses a method for carrying out operator acceleration by calling a GPU (Graphics Processing Unit) through an OCaml functional language. Comprising the following steps: compiling a required operator by using a CUDA code and generating a dynamic link library capable of being called by a C language; calling a CUDA kernel function in the CUDA dynamic link library by using a C function capable of being called by an OCaml functional language, and generating a C dynamic link library capable of being called by the OCaml; and calling the C function which has called the CUDA kernel function by the OCAML, and linking the C function with the C dynamic link library and other required libraries to realize the calling of the GPU by the OCAML. According to the method, from the perspective of creating and calling the dynamic link library, the OCaml functional language calls the GPU to accelerate the operator, the problem that the OCaml is too low in operator calculation speed for a large amount of data is solved, cross-order-of-magnitude calculation efficiency is improved, and meanwhile the problem that the OCaml calls the GPU to conduct parallel calculation is solved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

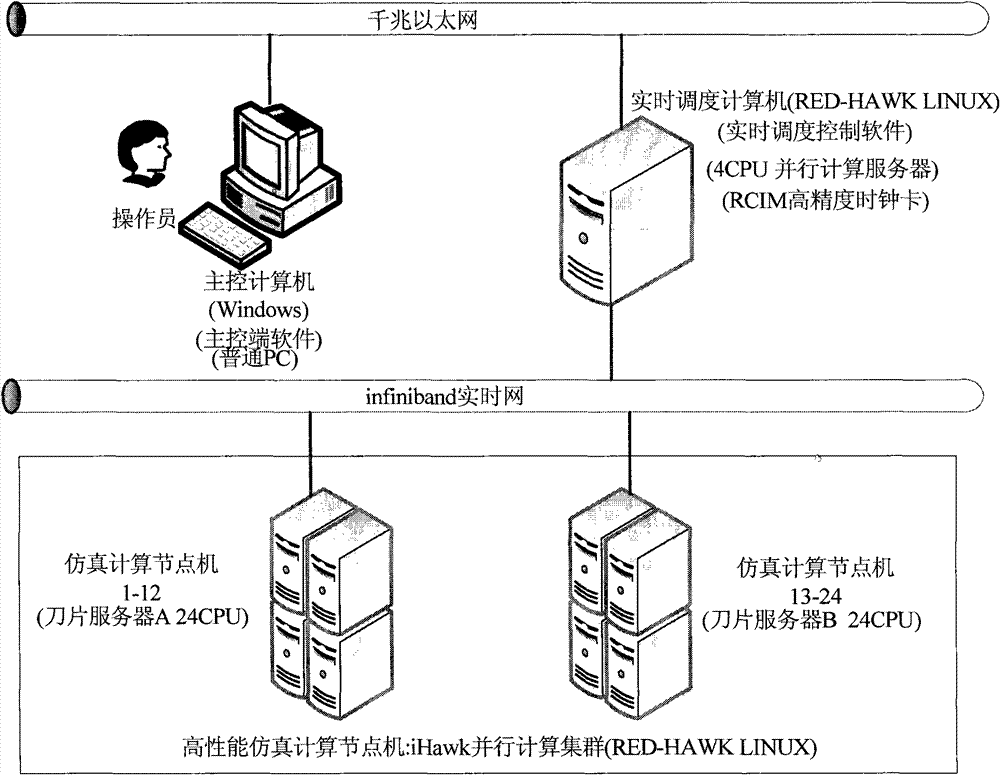

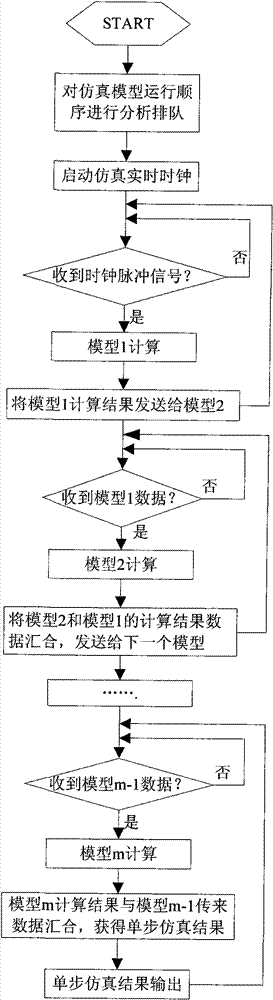

Parallel computation management-based autonomous navigation simulation and scheduling management system

ActiveCN101719078BGuaranteed real-timeGuaranteed parallelismMultiprogramming arrangementsSoftware simulation/interpretation/emulationOperational systemSoftware system

The invention discloses a parallel computation management-based autonomous navigation simulation and scheduling management system, which consists of a hardware system and a software system, wherein the hardware system consists of a master control computer, a real-time scheduling computer, a simulation computing node, a real-time communication network and a clock card; the real-time communication network consists of a giga Ethernet network and an infiniband high-performance real-time network; and the software system consists of a supporting operating system, master control end software and real-time scheduling control software. The parallel computation management-based autonomous navigation simulation and scheduling management system ensures the real-time property of a simulation system due to the adoption of the infiniband high-performance real-time network and an REDHAWK real-time operating system, ensures the possibility that the system realizes parallel computations in terms of hardware due to the adoption of a computing server and a blade server, realizes the parallel computations among simulation system models based on a data driving asynchronous pipeline parallel real-time scheduling algorithm, and in combination with the hardware, greatly speeds up the computation of the simulation system and ensures the parallelism and the real-time property of the complex simulation system.

Owner:BEIJING INST OF SPACECRAFT SYST ENG

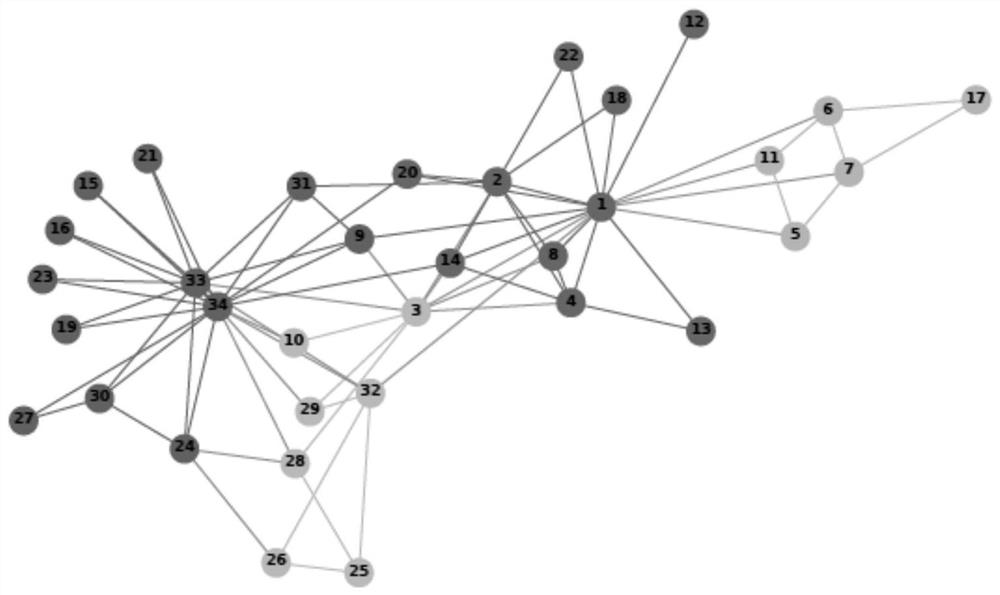

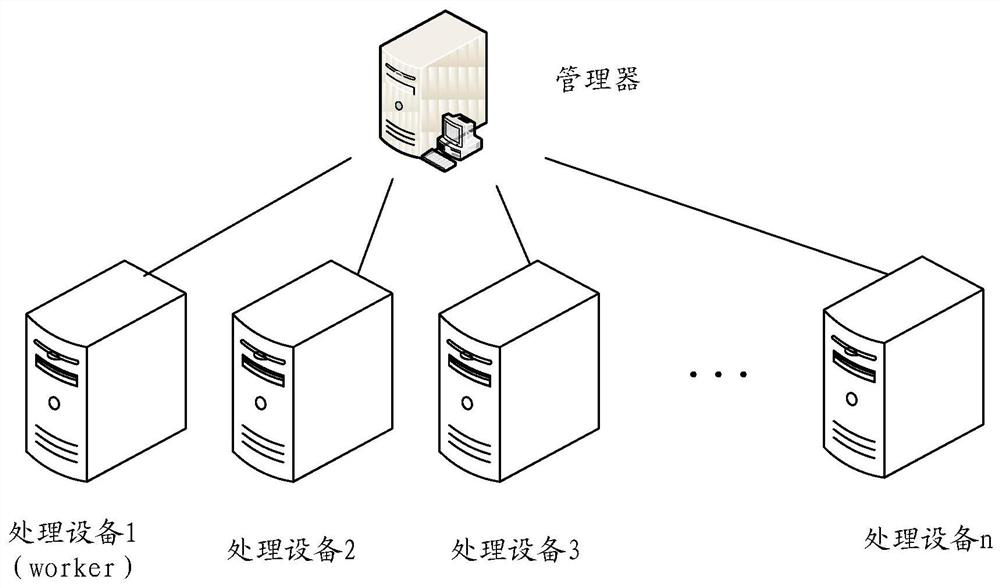

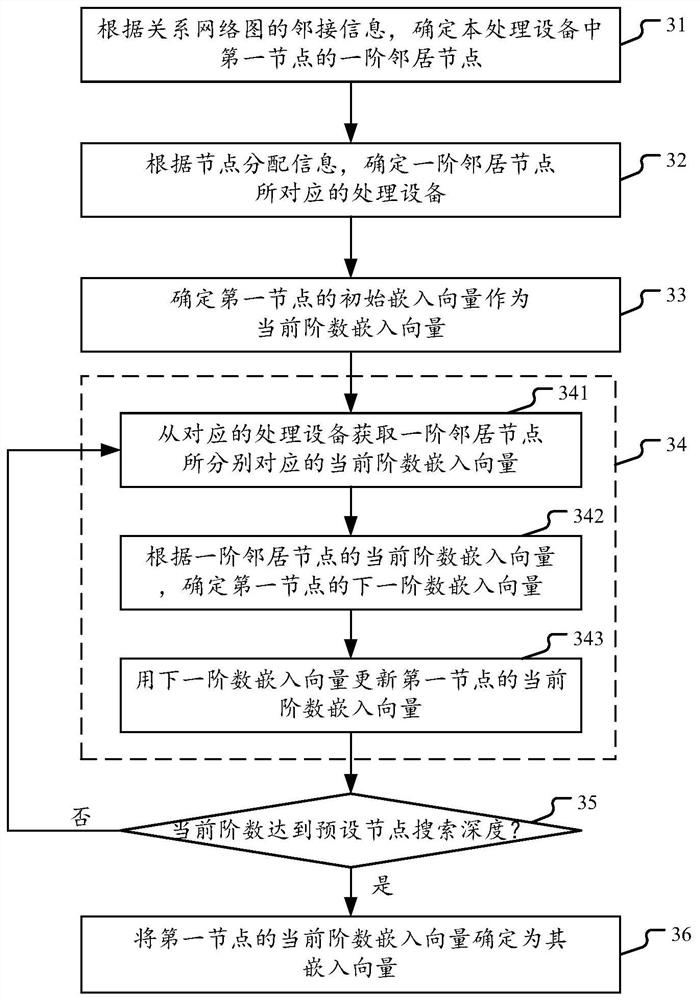

Method and Device for Distributed Graph Embedding

ActiveCN109194707BReduce memory pressureImplement parallel computingTransmissionDistributed computingMultidimensional space

The embodiment of the invention provides a method and a device for embedding a relation network graph in a multi-dimensional space through a distributed architecture. The distributed architecture comprises a plurality of processing devices, wherein one set of nodes of the relation network graph is distributed to each processing device. The method is executed by a certain processing device, and comprises the steps of: determining first-order adjacent nodes of a first node for which the processing device is responsible, determining processing devices corresponding to the first-order adjacent nodes; determining an initial embedding vector of the first node as a current order embedding vector; employing the current order embedding vector of the first-order adjacent nodes to calculate the nextorder embedding vector of the first node, and employing the next order embedding vector to update the current order embedding vector; and continuously executing the updating step until the current order quantity reaches a preset search depth, and taking the current order embedding vector at present as an embedding vector of the multi-dimensional space. Therefore, the distributed graph embedding method and device can effectively embed the relation network graph into the multi-dimensional space.

Owner:ADVANCED NEW TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com